Abhishek/IMS Gsoc2014Proposal

Personal Details

- Name : Abhishek Kumar Singh

- Location(Time Zone): India, IST(UTC + 5:30)

- Education : B.Tech in Computer Science and Engineering

- Email : abhishekkumarsingh.cse@gmail.com

- Blog URL : http://abhisheksingh01.wordpress.com/

Full body and hands gestures tracking

Abstract

Integration of whole body motion and hand gesture tracking of astronauts to ERAS(European MaRs Analogue Station for Advanced Technologies Integration) virtual station. Skeleton tracking based feature extraction methods will be used for tracking whole body movements and hand gestures, which will have a visible representation in terms of the astronaut avatar moving in the virtual ERAS Station environment.

Benefits to ERAS

“By failing to prepare, you are preparing to fail.” ― Benjamin Franklin

It will help astronauts in getting familiar with the their habitat/station, the procedures to enter/leave it, the communication with other astronauts and rovers, etc. Thus preparing themselves by getting a before hand training in the Virtual environment will boost their confidence and will reduce chances of failures to great extent, ultimately resulting in increase in the success rate of the mission.

Project Details

INTRODUCTION

An idea of implementing integration of full body and hand gesture tracking mechanism is proposed after having a thorough discussion with the ERAS community. The method proposed use 3D skeleton tracking technique using a depth camera known as a Kinect sensor(Kinect Xbox 360 in this case) with the ability to approximate human poses to be captured, reconstructed and displayed 3D skeleton in the virtual scene using OPENNI, NITE Primesense and Blender game engine. The proposed technique will perform the bone joint movement detections in real time with correct position tracking and display a 3D skeleton in a virtual environment with abilities to control 3D character movements. The idea here is to dig deeper into skeleton tracking features to track whole body movements and hand gesture capture. The software should also maintain long-term robustness and quality of tracker. It is also important that the code should be less complex and more efficient. It should have more automated behavior and minimum or no boilerplate code. It should also follow the standard coding style set by the IMS(Italian Mars Society) coding guidelines.

The other important feature of the tracker software should be that, it should be sustainable long-term in order to support further future improvements. In other words, the codes and tests must be easy to modify when the core tracker code changes, to minimize the time needed to fix the code and tests after architectural changes are performed to the tracker software. This feature would allow the developers to be more confident of refactoring changes in the software itself. Following are the details of the project and the proposed plan of action.

REQUIREMENTS DURING DEVELOPMENT

- Hardware Requirements

- Kinect Sensor(Kinect Xbox 360)

- A modern PC/Laptop

- Software Requirements

- OpenNI/NITE library

- Blender game engine

- Tango server

- Python 2.7.x

- Python Unit-testing framework

- Coverage

- Pep8

- Pyflakes

- Vim (IDE)

THE OUTLINE OF WORK PLAN

Skeleton Tracking will be done using Kinect sensor and OpenNI/NITE framework. Kinect sensor will generates a depth map in real time, where each pixel corresponds to an estimate of the distance between the Kinect sensor and the closest object in the scene at that pixel’s location. Based on this map, application will be developed to accurately track different parts of the human body in three dimensions.

OpenNI allows the applications to be used independently of the specific middleware and therefore allows further developing codes to interface directly with OpenNI while using the functionality from NITE Primesense Middleware. The main purpose of NITE Primesense Middleware is an image processing, which allows for both hand-point tracking and skeleton tracking. Tracking of whole skeleton can be done using this technique however main focus of the project will be on developing framework for full body motion and hand gesture tracking which can be later integrated with ERAS Virtual station. The following flow chart gives a pictorial view of working steps.

Basically, The whole work is divided into three phases :

- Phase I : Skeleton Tracking

- Phase II : Integrating tracker with Tango server and prototype development of a glue object

- Phase III : Displaying 3D Skeleton in 3D virtual scene

Phase I : Skeleton Tracking

Under this phase comes tracking of full body movements and hand gesture capturing. RGB and depth stream data are taken from the Kinect sensor and is passed to PSDK(Prime Sensor Development Kit) for skeleton calibration.

Skeleton Calibration : Calibration is done to gain control over the controlling device.

Skeleton calibration can be done :

- Manually, or

- Automatically

Manual Calibration :

- For manual calibration user is require to stand in front of Kinect with his whole body visible and has to stand with both hands in air('psi' position) for few seconds. This process might take 10 seconds or more depending upon the position of Kinect sensor.

Automatic Calibration :

- It enable NITE to start tracking user without requiring a calibration pose. It also helps to create skeleton shortly after user enters the scene. Although skeleton appears immediately but auto-calibration takes several seconds to settle at accurate measurements. Initially skeleton might be noisy and less accurate but once auto-calibration determines stable measurements the skeleton output becomes smooth and accurate.

However, Analyzing cons and limitation of both method. In the proposed application, I will be giving option to the user to choose among two given calibration method. Considering the fact that a user can go out of view only if the training session is interrupted. So we will ask the user (that will occupy always the same VR station) to do a manual calibration at the beginning of the week and then an automatic recalibration can happen every time a simulation restart in the same training rotation.

Skeleton Tracking :

Once calibration is done OpenNI/NITE will start the algorithm for tracking the user's skeleton. If the person goes out of the frame but comes in really quick, the tracking continues. However, if the person stays out of the frame

for too long, Kinect recognizes that person as a new user once she/he comes back, and the calibration

needs to be done again. Once advantage which we get here is that Kinect doesn't require to see the whole body if the tracking is configured as the upper-body only.

output : NITE APIs will return the positions and orientations of the skeleton joints.

Phase II : Integrating tracker with Tango server and prototype development of a glue object'

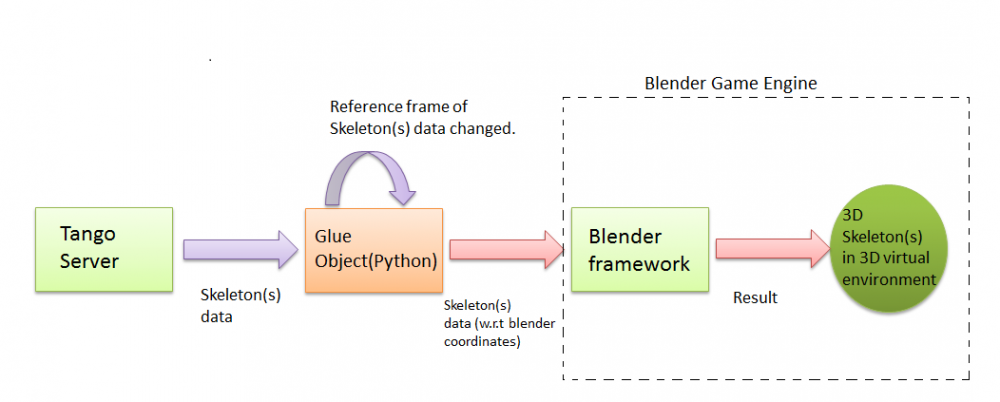

As whatever we are doing here must be ready for supporting multi-player (a crew of 4/6 astronaut)so there will be 4/6 Kinect sensors and 4/6 computers supporting each a virtual station. The application must be able to populate each astronaut environment with the avatars of all crew members. The idea is that Tango will provide around the skeleton data of all crew members for cross visualization. Skeleton data obtained from each instances of tracker will be published to Tango server as tango parameters. A prototype will be developed for changing reference frame of the tracked data from NITE framework to blender reference frame and send it to blender framework for further processing. It is called a glue object since, it acts as a interface between the NITE and blender framework. Since blender has support for python bindings, this glue object will be created via Python.

Phase III : Displaying 3D Skeleton in 3D virtual scene

Under this step work will be done in-order to get all skeleton(s) data from glue object and is transferred to blender framework, where 3D skeleton will be displayed in the 3D virtual scene driven by blender game engine. Basically it provides a simulation of user in virtual environment. The idea here is that 3D skeleton inside the virtual environment will mimic the same gestures/behavior which is performed by user in real world.

Deliverables

An application that tracks full body movement and hand gesture for effective control of astronaut's avatar movement with following features.

- application will detect the movement and display the user's skeleton in 3D virtual environment in real time and the positions of the joints are presented accurately

- It can detect many users’ movements simultaneously

- Bones and joints can be displayed in 3D model in different colors with the name of user on top of head joint

- It can display the video of RGB and depth during the user movement

- Users can interact with 3D virtual scene with rotation and zoom functions while user can also see avatar in a variety of perspectives

- It can display 3D virtual environment in a variety of formats(3DS and OBJ). Also, virtual environment can be adjusted without interpretation of the motion tracking

- Proper automated test support for the application with automated unit test for each module.

- Proper documentation on the work for developers and users

Project Schedule

1. Requirements gathering..........................................................................................1 week

- 1. Writing down requirements of the user.

- 2. Setting up test standards.

- 3. Writing test specification to test deployed product.

- 4. Start writing developer documentation.

2. Implement skeleton calibration................................................................................1 week

- 1. Writing code to support both automatic and manual calibration method.

- 2. Writing automated unit test to test written code.

- 3. Adding to developer documentation

3. Implement skeleton tracking....................................................................................2 week

- 1. Adding code for full body & hand gesture tracking.

- 2. Writing automated unit test to test working of code.

- 3. Adding to developer documentation.

4. Integrate to Tango server and implement glue object.............................................3 week

- 1. Writing code to integrate and support blender reference frame.

- 2. Writing automated unit test to test working of glue object.

- 3. Adding to developer documentation.

5. Integrating tracker with Blender game engine........................................................3 week

- 1. Implement integration mechanism & create avatar from processed data.

- 2. Adding automated unit test to test working of code.

- 3. Adding to developer documentation.

6. Buffer time.............................................................................................................2 week

- 1. Prepare user documentation.

- 2. Implement nice-to-have features.

- 3. Improve code readability and documentation.

Task to be done before midterm [19th May, 2014 - 23th June, 2104]

1. Requirements gathering

2. Implement skeleton calibration

3. Implement skeleton tracking

Task to be done after midterm [28th May, 2014 - 18th August, 2014]

4. Integrate with Tango server and implement glue object

5. Integrating tracker with blender game engine

6. Buffer Time

Time

I will be spending 45 hours per week for this project.

Summer Plans

I am a final year student and my final exams will be over by 20th May, 2014. As, I am free till August I will be spending much of my time towards my project.

Motivation

I am a kind of person who always aspires to learn more. I am also an open source enthusiast who likes exploring new technology. GSoC(Google Summer of Code) provides a very good platform for students like me to learn and show case their talents by coming up with some cool application at the end of summer. Apart from computers, Physics has always been my favorite subject. I always have a keen interest in research organizations like IMS, ERAS, NASA and CERN. Since IMS is a participating organization in GSoC 2014, It is a golden opportunity for me to spend my summer working with one of my dream organization.

Bio

I am a final year B.Tech Computer Science and Engineering student at Amrita School of Engineering, Amritapuri, India. I am passionate about Machine learning and HCI(Human Computer Interaction) and have keen interest in Open Source Software.

Experiences

My final year project involves use of Kinect Xbox 360 and Kinect programming languages and SDKs. I am building a application based on 3D Face Recognition that uses a different kind of algorithm(will be used for first time) and will solve many of the problems of 2D face recognition and is also able to identify partial scans. It's a research project so, am not allowed by my mentor to disclose codes.

Along the process I have learned lot about Kinect, have used and captured color and depth images using this device. In the process also learned some basics about skeleton tracking and about PCL(Point Cloud Library) in OpenNI.

I have sound knowledge of python programming language along with its advanced concepts like descriptors, decorators, meta-classes, generators and iterators along with other OOPs concepts. Also, I have contributed to some of the open source organization before.

Details of some of my contributions can be found here

Github : https://github.com/AbhishekKumarSingh

Bitbuket : https://bitbucket.org/abhisheksingh