Browser History

Introduction

The places history system is a redesign of the Firefox global history system using the new SQLite-based mozStorage APIs. This system provides additional performance, flexibility, and querying capabilities.

See also:

Places history features

The major places history views will be queries over places visited by time. The user will be able to select any date range and see when they visited each page. Actually showing which pages were visited during a given time period means we will have to store every time the user visits any page, rather than just the last visit time. This fixes some problems with the old history system where items would move around if you visited them again.

These view should be as organized and as concise as possible. The old history system includes many redirect pages in the view, and doesn't have things like favicons and good grouping that might make it easier to locate pages of interest. As a result, favicons for every page will be stored for viewing in history, and we will provide better domain-style grouping and a calendar for picking days rather than a tree view.

The second most user-visible history feature will be keyword searching. This will be essentially unchanged from the old system, which is too bad because the current system sucks. Because there is no word index, we do a brute-force search over all titles for the keyword in question. While sometimes useful, this is not always what you want, resulting in a number of third-party history indexing products. Long term, it would be nice to index pages you visit. For the coming release it might be nice to provide a hook for third-party products so they can provide text searching capabilities to the places system.

The last major change will be the ability to define complex queries over the history and bookmark systems. Most users will not be generating such queries, but they can be used to provide a variety of nice features. For example, a query folder can be created for "My favorite pages" which contains the 20 most frequently visited URLs in history. A query for the most frequently visited pages that are not bookmarked provides a good candidate list for suggesting bookmarks.

Example

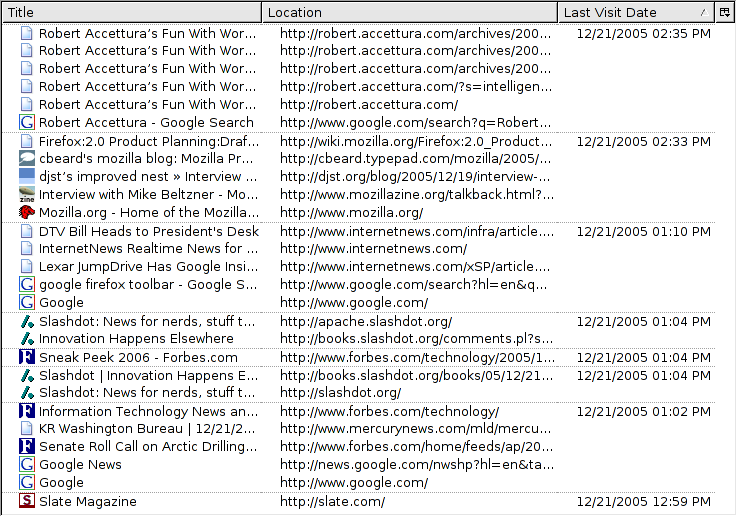

This screenshot shows some of these ideas:

Image credit: Brett Wilson, http://maxradi.us/

All pages have favicons for better visual differentiation and grouping. Pages are arranged into "sessions" consisting of followed links. Typing a new URL or following a bookmark gives you a new "session" which appears as a dotted line in this image. This is an actual screenshot, but the ugly redirect pages have been manually removed. Proper tracking of redirect pages is pending, see Browser_History:Redirects.

An informal goal is to save and expose enough information for a variety of interesting visualization or suggestion extensions to be created. For example:

- A graph of the user's browsing behavior, showing which pages were visited and when and how the user navigated between them.

- Suggesting links. By looking at what link the user frequently visits from the current page, perhaps some sort of suggestion or prefetching could take place. For some pages, this indication would be very strong. Other AI-like things could potentially be done. For example, many people visit the same set of sites when they open the browser in the morning. It would be nice if something could be done to make this nicer.

Bookmark pages and notes

Firefox 2 will likely have some kind of bookmark button for easy bookmarking of pages. This button will be illuminated whenever the user is on a bookmarked page, but redirects provide an extra problem. If the bookmark page is moved, or more commonly, if the user manually sets a bookmark, for example "amazon.com", they will be redirected. This destination page won't be bookmarked, so the button will not be illuminated. The old history system also had problems with redirected bookmarks because the favicons would never get set properly. Therefore, we need to know more about redirects to handle these cases correctly.

If other annotations are stored with pages, redirects could also pose problems. For example, if I have notes on a page and it is redirected, my notes will not be available to me. There should be a way for services using annotation functionality to see if the current page was ever redirected from somewhere else, and to also check those pages for annotations of interest. More on redirects can be read on Browser_History:Redirects.

Required information

It will be necessary to collect and store much more information than is done in the old history system. To extract information about sequences of page views, we need to know exactly which page generated each navigation. Because the same page could be opened in more than one tab/window at once, the URL is not sufficient for this task.

Database design

The history will be stored in two SQL tables — "moz_history" and "moz_historyvisit".

The "history" table essentially duplicates the functionality of the current Mork history table: It contains:

- Unique ID (primary key)

- URL

- Title

- User-defined title

- Visit count

- Last visit date

- Host name (see below)

- Hidden (bool)

- Typed (bool)

Note that the host name is stored backwards, unlike the current table. This is done so that it can be indexed alphabetically and we can quickly pull out all pages within any "mozilla.org" domain by asking for hostname fields that begin with "gro.allizom." A period is always appended to the reversed hostname.

The second table stores transitions between pages, which is information unavailable now.

- Source *visit* ID

- Destination page ID

- Time

- Transition type

Transition type will hopefully contain info about whether the link was a redirect clicked, opened in new tab/window, typed, just an image on the page, etc.

Because the source for each visit is another visit, not just a URL, we can track transitions between specific viewings of pages.

Performance

The SQLite database system does file-level locking to manage access to the database. This means that for every transaction (even read), the database file must be checked for changes to update the cache, and locked. Most systems can do this quickly, although this operation is clearly not good for performance. Some Linux/Unix users may have their home directories and profiles stored on a network-mounted drive. In these cases, individual database accesses can be relatively expensive.

Therefore, it is very important that database access be minimized and grouped in transactions. A new query/result format will be used instead of RDF. RDF requires many extra transactions, to retrieve the ID of a URL, then to retrieve the title, then to retrieve the visit date, etc. This makes performance, especially for network users, unacceptably slow. The new query system will retrieve one set of results for one query, meaning that there will be only one database access or the accesses can be internally grouped in a transaction. Initial tests of this system show that is is plenty fast enough over a good network and virtually instant for local users (the majority).

Link coloring

Link coloring is perhaps the most important performance issue, since this directly affects page load time. A naive approach requires querying the database for each link that comes in. Even when the history database is local, asking the OS for a file lock for each link on a page will be very bad for performance.

To solve this problem, URLs will be loaded into an in-memory database when the application starts (or, perhaps, the first time they are required). These links can be sorted so they can be accessed very quickly, and require no OS calls or file locks. This list will only contain URLs, and none of the extra information such as visit dates, visit counts, and titles.

Because these data will reside in memory, it is important to not take too much space. If we succeed in the goal of allowing users to keep large amounts of history, size could be significant. We will, therefore, want to limit the number of URLs that we bring into memory, either allowing, for example, the past 10,000 URLs, or the past 30 days of visits.

A two-level approach may improve perceived size. Most URLs in the table are advertisements and random images from Web sites to which the user will never see a link, and probably wouldn't even care about if they did. Then there are the top-level pages (with hidden=false) that are the top-level URLs that the user actually visited. They are much more likely to care about these top-level links. To save space and speed things up, we could keep the past X URLs in memory, plus the previous Y top-level URLs. It is unlikely that users will even notice that most of their history links are not being used for link coloring.

Database size

I just imported my Mork history database file into the equivalent SQLite format. The SQLite file size was one-tenth of the original Mork file. So, even though we will be storing more information (every time a page is visited) overall database size will probably be smaller.