Apps/QA/Test Infrastructure

Test Infrastructure Requirements

The Problem

The problem we are currently facing in our testing environments is that each group has their own infrastructure. The infrastructure is built around varying concepts of dev , beta, staging environments.

The problem is those environments all have different schedules that code is pushed, and released, and it is not possible to guarantee what version of the marketplace, browser id, Apps , Sync Tests have gone through a level of integration testing.

Each group has their own infrastructure, and it's not entirely clear to each group what the benefits of moving to a shared environment are. Current Release Schedules Are the following:

Sync Service - https://intranet.mozilla.org/QA/Server_Weekly_Trains_Staging#Schedules

Weekly Push Wed Push to staging Monday - Release to production

MarketPlace - https://mail.mozilla.com/home/wclouser@mozilla.com/AMO%20Schedule.html

Infrastructure Diagram Release Infra addons.allizom is staging server, but only updated as needed Release happens 2pm Thursday DBA Sheeri / MPressman

BrowserID - https://wiki.mozilla.org/QA/BrowserID/TestPlan#Weekly_Test_Schedules

Thursdays: deployment to Production, Stage (QA), and Dev Thursdays/Fridays: open testing and experimentation by Dev, QA, and community Following week: Monday - Wednesday: QA testing and sign off of current deployment DBA: petef

Apps - Web Services (HTML / JS / Dashboard)

As needed

The Purpose

Define an apps test environment that allows for testing javascript api's, the firefox browser, and the android Soup app.

QA should have the ability to qualify a build, and know with certainty that those are the bits being shipped. Production releases should be done using the jenkins server to push the files to production.

Minimum Requriements

Initial Hardware Requirements

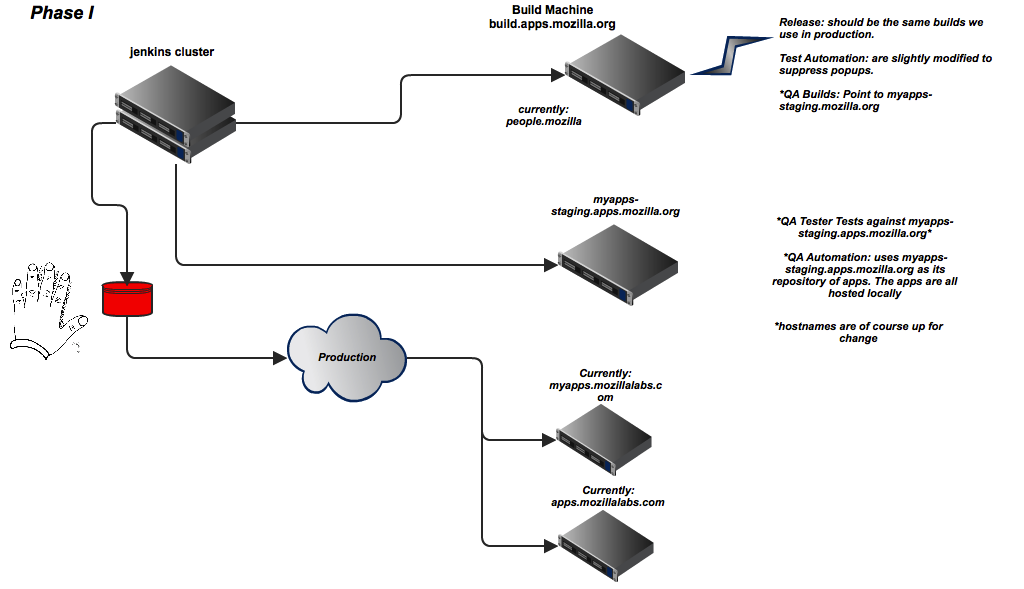

build.apps.mozilla.com apps-staging.apps.mozilla.com myapps-staging.apps.mozilla.com

Concept

Reasoning

Jenkins Cluster

The brains of our jenkins infrastructure resides on:

openwebapps-test1.vm1.labs.sjc1.mozilla.com

Jenkins machine is to be snapshotted monthly, as well as having its configuration backed up to github

Slaves will be deployed as needed to support additional builds / jobs. Current machine load is minimal, the intention is to add this as needed by the demands of the project.

There is an open request for a windows virtual machine, which will also function as a jenkins slave.

The requirements are detailed in the bug at the bottom of the page.

High Availability

High Availability Concerns are addressed using the following two solutions.

I have also checkpointed within Automation Services team, and these seem to be standard mechanisms to achieve the required result

https://wiki.jenkins-ci.org/display/JENKINS/PeriodicBackup+Plugin Snapshotting: https://bugzilla.mozilla.org/show_bug.cgi?id=726173

Multiple Build Targets

Within each build we have hardcoded values for myapps.mozillalabs, and apps.mozillalabs. Ideally we need to parameterize these inputs to the build process.

lib/api.js: "127.0.0.1:60172":"", "myapps.mozillalabs.com":"", lib/api.js: "stage.myapps.mozillalabs.com":"", "apps.mozillalabs.com":"", lib/main.js:const APP_SYNC_URL = "https://myapps.mozillalabs.com"; lib/main.js: "https?://myapps.mozillalabs.com", lib/main.js: "*.myapps.mozillalabs.com", lib/main.js: "https?://apps.mozillalabs.com", lib/main.js: * openwebapps@mozillalabs.com should be got from self.data lib/main.js: let button = win.document.getElementById("widget:openwebapps@mozillalabs.com-" + TOOLBAR_ID); lib/main.js: let dboard = "https://myapps.mozillalabs.com"; lib/main.js: * library, hosted at https://myapps.mozillalabs.com/ lib/main.js: contentURL: "https://myapps.mozillalabs.com", lib/main.js: if (origin == "https://myapps.mozillalabs.com") { lib/main.js: if (!found) tabs.open("https://myapps.mozillalabs.com"); lib/nativeshell.js: "http://apps.mozillalabs.com"); lib/nativeshell.js: "http://apps.mozillalabs.com"); lib/nativeshell.js: subKey.writeStringValue("Contact", "http://apps.mozillalabs.com"); lib/nativeshell.js: //subKey.writeStringValue("Readme", "http://apps.mozillalabs.com"); package.json: "id": "openwebapps@mozillalabs.com",

The same is true for android. It is important to be able to modify these parameters for QA / Release / Automation. So that when we click on the Apps icon in the lower right of the browser it launches the staging myapps instead of the production myapps.mozillalabs.com

We will probably need to be able to generate our own nightlies based upon a github repo build of firefox. This would be necessary for QA to be able to test based upon what is checked in to github vs having to wait until patches are reviewed.

Staging Servers

myapps-staging.apps.mozilla.com

- This is a where the dashboard will be hosted. Gives us the ability to test dashboard changes before they go live.

- Serve test apps that are staged for qa. Currently a lot of the tests we run are based upon external apps. When local apps provide a more reliable alternative.

- apps-staging will also host a staging version of the html / js version of mozApps apis.

Server Rearrangement

Some thoughts from Ian:

I think we can move everything to a single server (we'll call it myapps-staging): the HTML shim, the dashboard, the test appdir, and we should be able to generate an XPI there as well, and in addition future sync deployments.

If we can automate this well, then we can potentially base it all on a manifest of repositories. A manifest might look like:

OPENWEBAPPS=https://github.com/mozilla/openwebapps.git OPENWEBAPPS_BRANCH=update-api OPENWEBAPPS_REV=99a844f BROWSERID_SITE=https://diresworb.org

And do the same thing for each component (since we have multiple repos). If we can build that on a brand new VM (maybe just an externally hosted cloud VM) we can really let people create their own manifests to test other combinations.

Open Questions and Answers

- (jsmith) We should consider building out a community landing page on the build machine (similar to how firefox has a aurora, beta, nightly build)

- +1 by David and Jason

- (jsmith) Jenkins - How could we address fault tolerance in our continuous integration?

- (dclarke) Snapshots. The internet connection was lost due to a NAT / Firewall issue that affected all the virtual machines.

- (dclarke) But I can see a benefit to having a backup process, fault tolerance would indicate that we are not able to tolerate faults, and i think for a CI server, especially where we are at in the release process. Time could be more appropriately assigned.

- (jsmith) With the apps hosting server (apps-staging.apps.mozilla.org), how it will address the issue with the one app per origin problem?

- (dclarke) Single app per origin problem: you can host different apps on different ports, and it's not a problem.

- (jsmith) Right now, the system only supports allowing one app to get hosted on a single origin

- (jsmith) How do we get around that issue?

- (jsmith) For developers - How would they say, test their own implementations for their own specific work? Is the plan to do it all locally on their machine? Or is there a way to deploy it remotely to specific areas for their individual testing?

- (jsmith) How does the test infrastructure support separation of concerns of different build needs (building different builds, running unit tests, running system tests)?

- (dclarke) Good point, ian brought up a scheme which would allow us to kick off jobs based upon branches, so developers can kick off and view the reports to their own jobs.

- (jsmith) Right now - I see one server all handling this, could that be too much load on one particular system?

- (jsmith) Might want to consider using Jenkins support for master-slave relationships to cut back the centralized load on the infrastructure

- (dclarke) Master - Slave is fine, and probably the direction we will go. For builds that are quite labor intensive Ex; building firefox, running test automation, but for right now the build process can be kept local, because it is so light weight.

Project Tracking

Infrastructure Phase I Tracker Bug: https://bugzilla.mozilla.org/show_bug.cgi?id=726952 Linux Build Hosting Machine: https://bugzilla.mozilla.org/show_bug.cgi?id=726954 Windows Jenkins Virtual Machine: https://bugzilla.mozilla.org/show_bug.cgi?id=726878 Linux Myapps Staging Machine: