Loop/Data Collection

To monitor the performance and other aspects of the Loop service, we will be gathering information independent of the platform metrics. The list of collected metrics will be expanded in later phases of the project.

Mozilla has a number of data collection services, including:

- Telemetry

- Log Collection

- Crash Reporting

- Input

The collected data is broken down into these categories below.

Telemetry Metrics

For simple telemetry that can be indicated by a simple scalar or boolean, we will be using the nsITelemetry interface.

For custom collected telemetry, the basic steps to be performed are:

- Create a report object as detailed below, setting the "report" field to indicate which kind of report is being submitted

- Create a new UUID-based report ID ("slug")

- Save the created report to a file in {PROFILE_DIRECTORY}/saved-telemetry-pings, in a file named after the report ID.

In general, this report will look something like the following:

{

"reason": "loop",

"slug": "75342750-c96c-11e3-9c1a-0800200c9a66",

"payload": {

"ver": 1,

"info": {

"appUpdateChannel": "default",

"appBuildID": "20140421104955",

"appName": "Firefox",

"appVersion": "32.0",

"reason": "loop",

"OS":"Darwin",

"version":"12.5.0"

},

"report": "...",

// Fields unique to the report type are included here.

}

}

ICE Failures

Tracked in bug 998989

One of the first and most important bits of information we need to gather is ICE failure information. When we initially launch the MLP, it is quite likely that we will see non-trivial connection failures, and we will want to be in a position to rapidly diagnose (and, where possible, fix) the causes of these failures. To that end, we need to be able to get to the ICE statistics; and, if a call fails to set up (i.e., ICE connection state transitions to "failed" or "disconnected"), upload ICE logging information.

Details

- When a PeerConnection is instantiated, begin listening for the iceconnectionstatechange event

- This may require adding code in PeerConnection.js that allows retrieval of all of the PCs for a given WindowID, using the _globalPCList

- If the state changes to "failed" or "disconnected", Retrieve ICE logging information and upload it to the ICE log to the telemetry server:

- Instantate a WebrtcGlobalInformation object (see http://dxr.mozilla.org/mozilla-central/source/dom/webidl/WebrtcGlobalInformation.webidl)

- Call "getLogging" on the WGI object

- In the callback from getLogging (which takes an array of log lines as its argument):

- Consolidate the log file into a single string

- Submit the report as detailed above

Example payload

See https://bug998989.bugzilla.mozilla.org/attachment.cgi?id=8446879

Nature of Data

- Uncompressed, these logs are on the order of 50-1000 kB, but they contain significant redundancy: when run through gzip, they reduce to something more in the 3-60 kB range.

- We anticipate that these logs will be generated for something on the order of 5% to 20% of total call volume initially, shrinking as we troubleshoot and eliminate the issues they help us discover.

- When the feature lands in nightly, we are optimistically hoping for on the order of 10,000 users, probably making no more than 1 or 2 calls a day (i.e., we would anticipate initial worst-case load to be approximately 4,000 logs per day). After we get some experience with the feature, we should be able to refine this to be more accurate.

The logs will necessarily contain users' IP addresses (and will be nearly useless without them)IP address information will be fuzzed; but information in the SDP extends the fingerprinting surface, so access control will be important.- Eventually, we will want to develop fingerprinting heuristics for grouping logs together that are likely to share common root causes, but this is not required for the initial deployment.

- For initial analysis, we could probably do with something as simple as a report that says "on date, there were x failures, broken down as follows: failed: failed count, disconnected: disconnected count," and then lets us list all the failures for a given date/reason pair, ultimately allowing us to download the log to analyze. As we get experience with how things tend to break, we might want to refine this some, but it's a good start.

Call Quality Metrics

This covers collection of the following information:

- Call setup latency

- Media metrics (RTT, jitter, loss)

Tracked in bug 1003163

URL Sharing

Tracked in bug 1015988

This will use normal telemetry peg counts. Details for the data to be collected are in the bug.

Input Metrics

The API for submitting data to input.mozilla.org is described here. We will be using a "product" value of "Hello" (cf. Bug 1003180, comment 29).

User Satisfaction

Based on discussions with the input folks, we're going to make a few changes here very soon: (1) All reports will add the locale field; (2) The standalone client will use a product name other than Loop (perhaps "Loop Standalone"); and (3) The FFxOS client will use a product name other than "Loop".

Tracked in bug 1003180 (meta) and:

- bug 972992 (built-in client).

- bug 974873 (standalone client).

- bug 1060412 (firefox os client).

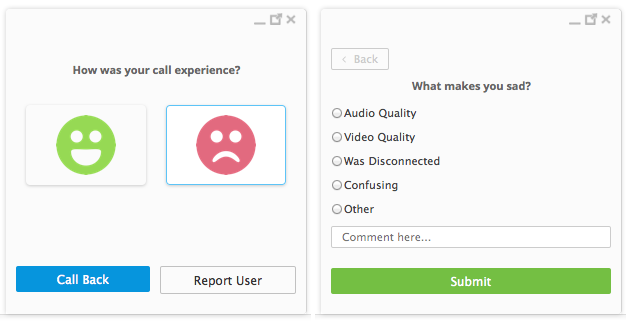

The built-in client will have a screen similar to this pop up when the user finishes a call:

The link-clicker client will have a similar screen for call completion.

Based on bug 974873, the current feedback proposal is as follows:

- Screen with Happy or Sad displayed at the end of the call in the conversation window

- If happy clicked, thank the user for the feedback and close conversation window

- If Sad clicked display another screen:

- Audio Quality (category: "audio")

- Video Quality (category: "video")

- Was Disconnected (category: "disconnected")

- Confusing (category: "confusing")

- Other (w/ text field) (category: "other")

For the standalone client, we will report the "happy" boolean; and, if false, indicate the reason using the "category" field and the user-provided input in the "description" field. We will also include the URL that the user clicked on to initiate the call (without the call token) in the "url" field. The key reason for doing so is that it allows us to distinguish standalone feedback from build-in client feedback. Secondarily, if we change URL endpoints (say, due to versioning), it allows us to distinguish reports from two different kinds of server. Because of the shortcomings of the individual fields in the standard userAgent object, we will report the entire user agent string for the standalone client (i.e., the value in navigator.userAgent).

For the built-in client, we will report the "happy" boolean; and, if false, indicate the reason using the "category" field and the user-provided input in the "description" field. We will also report channel, version, and platform; see the fjord API documentation for more information about these fields.

Example Payloads

An example payload for a standalone ("link-clicker") client:

POST https://input.allizom.org/api/v1/feedback HTTP/1.1

Accept: application/json

Content-type: application/json

{

"happy": true,

"description": "",

"product": "Loop",

"url": "http://call.mozilla.org/", // designates this as a link-clicker

"user_agent": "Mozilla/5.0 (Mobile; rv:18.0) Gecko/18.0 Firefox/18.0"

}

An example payload for a built-in client:

POST https://input.allizom.org/api/v1/feedback HTTP/1.1

Accept: application/json

Content-type: application/json

{

"happy": false,

"category": "other",

"description": "Could not move video window out of the way",

"product": "Loop",

"channel": "aurora",

"version": "33.0a1",

"platform": "Windows 8"

}

Other Metrics

The following data types don't clearly match existing data collection systems. They may be able to leverage existing systems, possibly with modifications; or they may require entirely new data ingestion servers.

User Issue Reports

Tracked in bug 1024568

Note to self: we also are going to want to collect information about graphics card drivers, sound cards, webcam drivers, etc. This is TBD.

Use Cases

This data collection is intended to handle the following use cases:

- "I was expecting a call, but never got alerted, even though the other guy said he tried to call." For this use case, the user needs to be able to click on something, probably in the Loop panel, to proactively indidicate a problem.

- "I am in the middle of a call right now, and this is really bad." Basically, if the media experience becomes unsatisfactory, we want the user to be able to tell the client while we still have the media context available, so we can grab metrics before they go away.

- "I was in the middle of a call and it ended unexpectedly."

- "I just tried to make a call but it failed to set up."

- "I just received a call alerting and tried to answer, but it failed to set up."

Data to Collect

When the user indicates an issue, the Loop client will create a ZIP file containing information that potentially pertains to the failure. For the initial set of reports, it will include the following files:

- index.json: Simple text file containing a JSON object. This JSON object contains indexable meta-information relating to the failure report. The format is described in #Index_Format, below.

- sdk_log.txt: All entries in the Browser Console emitted by chrome://browser/content/loop/*

- stats.txt: JSON object containing JSON serialization of stats object

- local_sdp.txt: SDP body for local end of connection

- remote_sdp.txt: SDP body for remote end of connection

- ice_log.txt: Contents of ICE log ring-buffer

- WebRTC.log: Contents of WebRTC log; only applicable for reports submitted mid-call. This will require activation of the WebRTC debugging mode, collection of data for several seconds, and then termination of WebRTC debugging mode. See the "Start/Stop Debug Mode" buttons on the about:webrtc panel.

- push_log.txt: Logging from the Loop Simple Push module (note: this isn't currently implemented, but should be added as part of this data collection).

Index Format

The report IDs will be formed by appending a UUID to the string "le-".

The index file is a JSON object with the following fields:

- id: ID of report (must match ZIP filename)

- timestamp: Date of report submission, in seconds past the epoch

- phase: Indicates what state the call was in when the user indicated an issue.

- noalert - The user proactively indicated that a call should have arrived, but did not.

- setup - The call was in the process of being set up when the user indicated an issue.

- midcall - The call was set up and in progress when the user indicated an issue.

- postcall - The call was already finished when the user indicated an issue. The call may have never set up successfully.

- setupState: call setup state; see Loop/Architecture/MVP#Call_Setup_States (only if phase == setup)

- init

- alerting

- connecting

- half-connected

- type: Indicates whether the user was receiving or placing a call:

- incoming

- outgoing

- client: Indicates which client is generating the report. Currently, only the builtin client has the ability to collect the necessary information; this field is included to allow for future expansion if the interfaces to collect this information are made available in the future.

- builtin

- channel: Browser channel (nightly, aurora, beta, release, esr)

- version: Browser version

- callId: (for correlating to the other side, if they also made a report)

- apiKey: Copied from the field provided during call setup.

- sessionId: Copied from the field provided during call setup (to correlate to TB servers)

- sessionToken: Copied from the field provided during call setup (to correlate to TB servers)

- simplePushUrl: Copied from the field provided during user registration

- loopServer: Value of the "loop.server" pref

- callerId: Firefox accounts ID or MSISDN of calling party, if direct

- calleeId: Firefox accounts ID or MSISDN of called party

- callUrl: URL that initiated the call, if applicable

- dndMode: Indication of user "availability" state; one of the following values:

- available

- contactsOnly

- doNotDisturb

- disconnected

- termination: If the call has been during setup, the "reason" code from the call progress signaling (see Loop/Architecture/MVP#Termination_Reasons).

- reason: User-selected value, one of the following:

- quality

- failure

- comment: User-provided description of problem.

- okToContact: Set to "true" only if user opts-in to option to let developer contact them about this report

- contactEmail: If "okToContact" is true, preferred email address for contact

These objects will look roughly like the following:

{

"id": "le-4b42e9ff-5406-4839-90f5-3ccb121ec1a7",

"timestamp": "1407784618",

"phase": "midcall",

"type": "incoming",

"client": "builtin",

"channel": "aurora",

"version": "33.0a1",

"callId": "35e7c3a511f424d3b1d6fba442b3a9a5",

"apiKey": "44669102",

"sessionId": "1_MX40NDY2OTEwMn5-V2VkIEp1bCAxNiAwNjo",

"sessionToken": "T1==cGFydG5lcl9pZD00NDY2OTEwMiZzaW",

"simplePushURL": "https://push.services.mozilla.com/update/MGlYke2SrEmYE8ceyu",

"loopServer": "https://loop.services.mozilla.com",

"callerId": "adam@example.com",

"callurl": "http://hello.firefox.com/nxD4V4FflQ",

"dndMode": "available",

"reason": "quality",

"comment": "The video is showing up about one second after the audio",

"okToContact": "true",

"contactEmail": "adam@example.org",

}

Uploading a User Issue Report

No existing Mozilla data ingestion systems provide a perfect fit for the data that these user reports will contain. Instead, we will create a very simple system that has the ability to grow more complex as needs evolve.

To that end, we will be using Microsoft Azure blob storage for the report upload and storage. The Loop client will perform PUT requests directly against into a blob container. Azure includes an access control mechanism that allows servers to hand out time-limited signed URLs that can then be used to access the indicated resource.

When a user indicates an issue, the Loop client collects the data from the index.json file (including a unique issue ID selected by the client), and contacts the Loop server asking for a new URL to store the issue report in:

POST /issue-report HTTP/1.1

Accept: application/json

Content-Type: application/json; charset=utf-8

Authorization: <authentication information>

{

"id": "le-4b42e9ff-5406-4839-90f5-3ccb121ec1a7",

"timestamp": "1407784618",

"phase": "midcall",

"type": "incoming",

"client": "builtin",

"channel": "aurora",

"version": "33.0a1",

"callId": "35e7c3a511f424d3b1d6fba442b3a9a5",

"apiKey": "44669102",

"sessionId": "1_MX40NDY2OTEwMn5-V2VkIEp1bCAxNiAwNjo",

"sessionToken": "T1==cGFydG5lcl9pZD00NDY2OTEwMiZzaW",

"simplePushURL": "https://push.services.mozilla.com/update/MGlYke2SrEmYE8ceyu",

"loopServer": "https://loop.services.mozilla.com",

"callerId": "adam@example.com",

"callurl": "http://hello.firefox.com/nxD4V4FflQ",

"dndMode": "available",

"reason": "quality",

"comment": "The video is showing up about one second after the audio",

"okToContact": "true",

"contactEmail": "adam@example.org",

}

Note: the ID is client-provided rather than server-generated to allow for the "retry-after" throttling behavior described below.

Upon receiving such a request, the Loop server performs the following steps:

- Checks that the client does not need to be throttled,

- Stores the received fields in an Azure table, and

- Forms a blob storage URL to upload the information to

Report Throttling

To mitigate potential abuse, the Loop server needs to throttle handing out issue URLs on a per-IP basis. If a Loop client attempts to send a request more frequently than the throttle allows, then the Loop server will send an HTTP 429 response indicating how long the client must wait before submitting the report. The client will then re-attempt sending the report once that period has passed.

HTTP/1.1 429 Too Many Requests Access-Control-Allow-Methods: GET,POST Access-Control-Allow-Origin: https://localhost:3000 Content-Type: application/json; charset=utf-8 Retry-After: 3600 { "code": "429", "errno": "114", // or whatever is allocated for this use "error": "Too Many Requests", "retryAfter": "3600" }

This means that, upon startup, the Loop client code needs to check for outstanding (not-yet-uploaded) reports, and attempt to send them. If a report is over 30 days old and has not been successfully uploaded, clients will delete the report. The Loop server will similarly check that the issueDate field is no older than 30 days, and will reject the request for an upload URL.

Data Index Storage

(TBD: basically, the Loop server stores the JSON index object in an Azure table so that developers can search for reports by various criteria. If the client-provided report ID already exists and a file exists in blob storage, the client gets an error)

HTTP/1.1 409 Conflict Access-Control-Allow-Methods: GET,POST Access-Control-Allow-Origin: https://localhost:3000 Content-Type: application/json; charset=utf-8 { "code": "409", "errno": "115", // or whatever is allocated for this use "error": "Conflict", "message": "Report ID already in use" }

If the client receives such an error, it should assume that the report was previously uploaded successfully without being removed for its own local storage, and deletes the local file.

Upload URL Generation

The blob URL fields are constructed as follows:

- The host is the Azure instance assigned to Mozilla's account

- The container is "loop-" followed by a four-digit year and two-digit week number (e.g., if the report date sent by the client in its POST request falls in Week 33 of 2014, UTC, then the container would be named "loop-201433"). For clarity, we will be using the ISO-8601 definition of "week number." (See the Wikipedia article on week numbers for a good explanation of the ISO-8601 week numbering system).

- The filename is {id}.zip, using the id field provided by the client.

- The "signedversion" field (sv) is the Azure API version we're currently using

- The "signedexpiry" field (se) is the current time plus five minutes (this simply needs to be long enough to upload the report)

- The "signedresource" field (sr) is "b" (blob storage)

- The "signedpermissions" field (sp) is "w" (write only)

- The "signature" field (sig) is computed with our Azure shared key, as described by the Azure SAS documentation

This URL is then returned to the user:

HTTP/1.1 200 OK Access-Control-Allow-Methods: GET,POST Access-Control-Allow-Origin: https://localhost:3000 Content-Type: application/json; charset=utf-8 { "issueURL": "https://mozilla.blob.core.windows.net/loop-201433/le-13b09e3f-0839-495e-a9b0-1e917d983766.zip?sv=2012-02-12&se=2014-08-13T08%3a49Z&sr=b&sp=w&sig=Rcp6gQRfV7WDlURdVTqCa%2bqEArnfJxDgE%2bKH3TCChIs%3d" }

Once it acquires an issue upload URL, the loop client then performs a PUT to the supplied URL to upload the report zipfile. The Azure REST API documentation contains more detailed information about this operation.

Data Retrieval

To retrieve reports, authorized developers will need to be able to perform the following operations:

- Given a report ID, retrieve the corresponding report ZIP file

- Given a set of criteria selected from the fields described above, list the values of the other index fields, including the report ID (which should be a hyperlink to retrieve the report ZIP file itself)

Aside from satisfying those two use cases, and enforcing that only authenticated and authorized developers have access, this interface can be very rudimentary.

Data Purging

At the end of each week, a data retention job will locate containers with a name indicating that they exceed the data retention policy for these reports (five weeks), and remove the containers (including all contained reports). Note that this means that raw data will be kept for between 28 and 35 days, depending on the day of the week on which they are submitted.

This job also removes data from the index table older than its retention policy (which may be somewhat longer than the retention policy for the raw data; this is still TBD). (TODO: table removal can probably be optimized through the use of partitions -- I need to look into Azure table storage a bit more).