Marketplace/Reviewers/Apps/Guide/SecReviewTraining

Stephanie Ouillon, Christiane Ruetten

Introduction to Firefox OS App Security Reviewing

This article is part of the security training for Firefox OS app reviewers who want to learn how to conduct meaningful security review. Marketplace already has a few automated ways of spotting security issues in submitted apps, and more are being developed, but for the foreseeable future human reviewers will be the sensors hardest to fool when it comes to spotting security problems in code.

A functional review of a Firefox OS application is a significant amount of work in itself, and the available time per review is already tight, but review of packaged apps shouldn't stop there. Most security issues fall into just a few major categories, and developers keep making the same mistakes. Once you learn what to look for and where, a quick security review isn't much of an overhead and will have a huge impact on overall security of the Firefox OS ecosystem.

The security model of Firefox OS relies on code review as heavily as it relies on privilege separation. In most scenarios, malicious code that is embedded in an app or injected through a backdoor or vulnerability is limited by the permissions of its host app. To break out of its sandbox it will need to exploit security vulnerabilities in other applications with higher privileges or within the OS itself.

While we cannot completely prevent that malware and other malicious code will make it onto Firefox OS devices, we can greatly limit the damage that it can do by ensuring a good code quality in the app ecosystem and especially in the important apps that require potentially harmful permissions.

You’ll often hear mobile users say: “Why would someone want to access my phone? There’s nothing important in there!” Well, why would they? Perhaps there really are no online accounts connected to the device that can be plundered, and no text messages with banking PINs to snatch, every modern smartphone is a fully connected and powerful computer that can dial up premium services, display unsolicited ads, attack other devices on the net, and turn user behavior into a pattern gold mine for advertisers and the surveillance-happy. And rest assured: the bad guys are ready to turn this into their profit.

In an effort to make their life harder and the life of our users more care-free, this series of security review tutorials will walk you through the review process from the perspective of an experienced reviewer. It will hopefully help you to build the knowledge and intuition it takes to quickly assess whether things smell funny, so you can swiftly decide to investigate further or escalate to a senior reviewer.

Meet The Damn Vulnerable FxOS App

For the sake of simplicity of this introduction we are using Rob Fletcher’s DVFA demo app which he created to highlight the most common security-relevant programming errors in Firefox OS applications and how bad guys would try to exploit them. It will kindly hand you its vulnerabilities on a platter with its extensive documentation, so it offers probably the best opportunity to dive right into the basics of FxOS app security.

If you have access to the Marketplace reviewer backend and want to click along in this tutorial, you will find the app in the review queue. Please make sure that you don’t accidentally publish it. For everyone else we’ll provide screenshots so you can follow the tutorial none the less.

Understanding what the code is doing – or at least supposed to do – already wins you half the game in a security review, so let’s start with the description provided by the developer. When you open the app in the review queue and read the description, it should already give you a fairly good idea of what the app is supposed to do. If it doesn’t, Marketplace users won’t understand it either, and that alone is reason enough for a rejection.

Sometimes the privacy policy will give important clues about what the app is doing with data it collects, so skimming the relevant portion of the privacy policy is also a good idea. In case you’re doing a re-reviews of an updated app version, you might want to take a moment to click through potential abuse reports. Users tend to notice when apps turn bad on them.

When it comes to app security, most issues you find are the honest mistakes that – when things go really wrong – expose data or functionality to unprivileged apps. But every now and then you’ll encounter devs that try to sneak-by unsolicited functionality to collect data or make a quick buck without user consent.

Hardcore security folks tend to always assume the smartest possible attacker and tell you how in vain it is to try to defend against them, but you’ll be surprised how crude and unsophisticated most of these attempts in mobile apps are. In the Android world we've seen devs even collecting quite a history of questionable apps, so if you feel like it clicking through their list of other apps on the Marketplace and even a quick Google search for their name can give you valuable clues about their intent and reputation.

Reading through DVFA’s description (“do not install this app”) and privacy policy (“don’t expect any”) makes it pretty clear that you don’t want this app on your phone, so we’re really good with this one, but it should raise a red flag when at this point you’re still scratching your head about what the app is really all about or when clues about crucial app activity are hidden deep inside the privacy statement, for example.

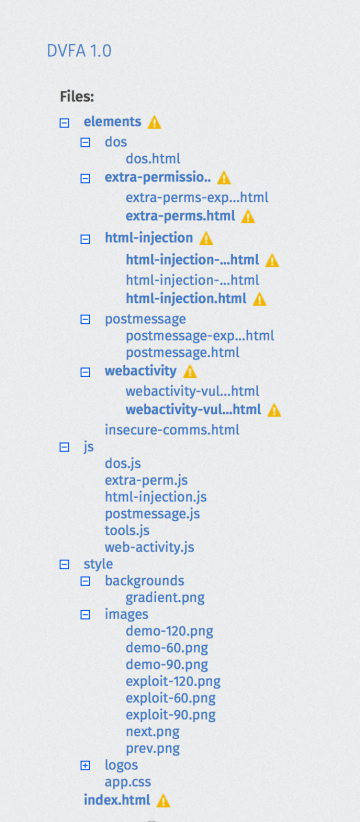

Content counts

App security is all about data flow, and the most important source of data is the app’s Zip file content itself. See the orange warning signs next to some entries? Those are app-validator warnings, and we’re working on a functional extension that will tag unaltered files of known frameworks like jquery and require.js so it will be easy for reviewers to dismiss them. But for now it’s a good start to take note of the frameworks used and their versions for a quick search engine check for known vulnerabilities.

If the app merits an extensive security review, you’ll want to download the app to your local machine and check the framework files’ checksums against their official counterparts to exclude any backdoors in there.

Most apps collect all their JavaScript code inside a single code directory. In case of DVFA, all the code lives in /js. If you can spot JS code in unusual places or hidden deep inside a directory tree, you may want to take a second look.

Also be aware that nothing in Firefox OS restricts JavaScript files to a .js extension. When skimming .html files, any script tags referencing .gif files or similar oddities should put you on red alert as this is a commonplace security scanner evasion technique.

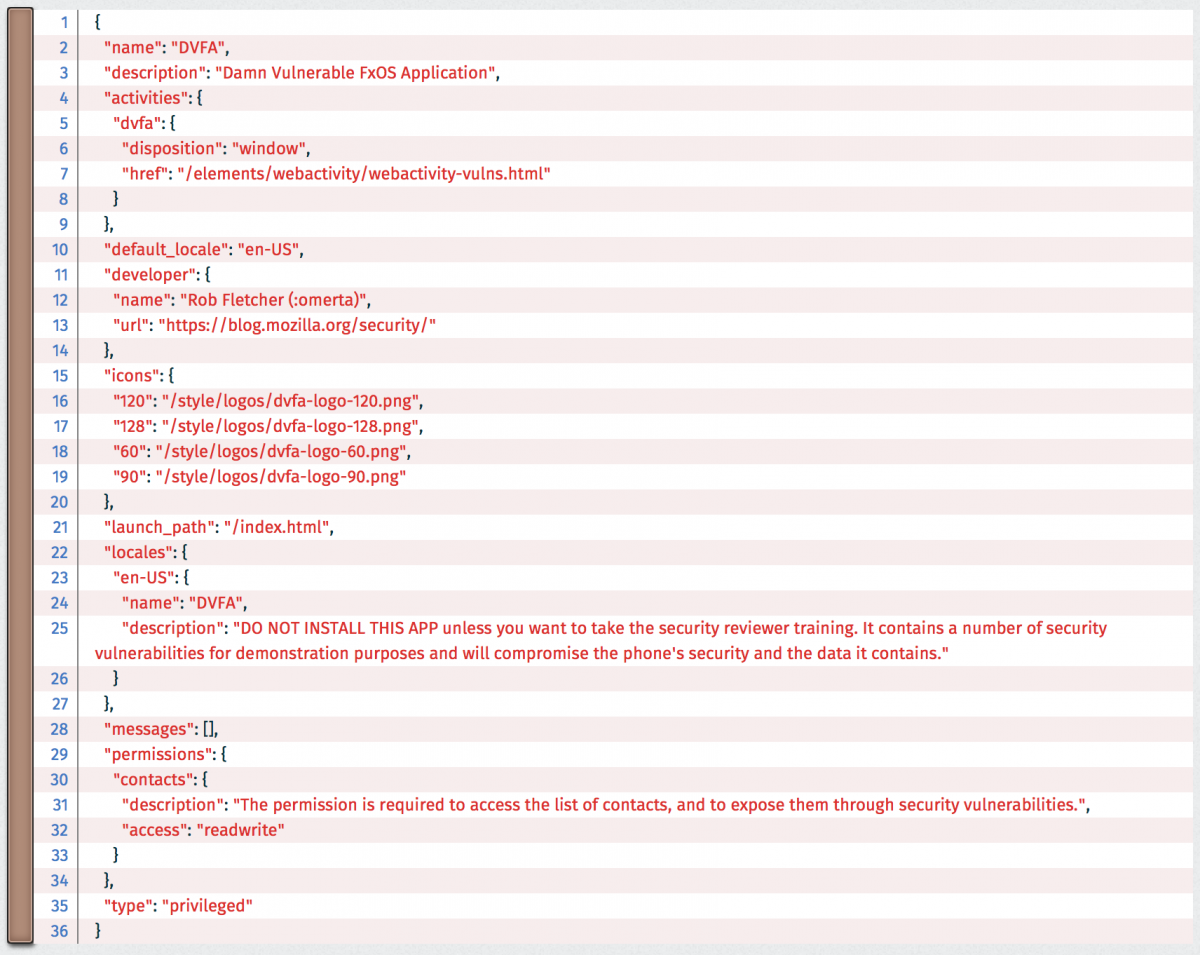

Planning the review process

Once you have dealt with framework files and gotten an idea of what components the app is comprised of, it is time to take a look at the app code itself which can be quite extensive. How do you know what part of the code is security sensitive and thus worth your attention? Here the app’s manifest.webapp will give you the most important hints. Two main parts will specifically require your attention: permissions and web activities.

It's all about permissions

Permissions define what APIs and functionalities the app can access. Firefox OS apps are based on the principle of least permissions which means an app should only be granted the minimal set of privileges it requires to function. From a reviewer perspective, this has two implications: First, you can check that a permission requested in the manifest is actually being used. Secondly, when it is pertinent, you can make sure the access level (read/readwrite) of the permission is set to the minimum and that it is used in a safe way.

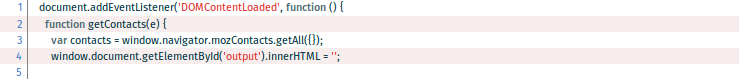

For instance, let’s have a look at the contacts permission. It is used in /js/extra-perm.js with the mozContacts API where you’ll find the function getContacts():

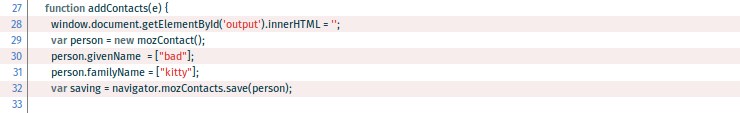

The access level is readwrite. Read access allows the app to get all contacts using the getAll() method of the Contacts API. Write access allows to create a new contact with the save() method like in the function addContacts():

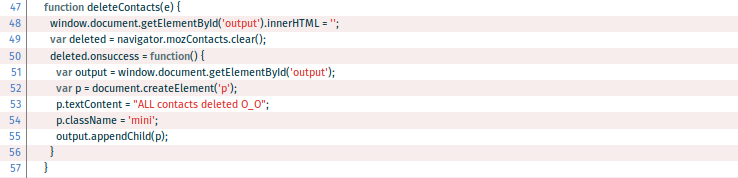

But let's keep in mind that having write access means the app can also modify existing contacts or even delete the entire Contacts database. Thus, the developer has been able to implement a function deleteContacts() which makes calls to the mozContacts.clear() method:

According to its description and purpose, is the app qualified to manage the Contacts database in such a manner? Being able to delete the entire Contacts database seems dangerous since the app isn’t meant to be a Contacts manager.

Because of the principle of least permissions, unused permissions should be removed. Checking that requires extra care when the app uses a lot of permissions or has a large codebase since you can easily miss where a permission has been used or not.

Some permissions are more security sensitive than others. The following table will provide a quick summary of the relevant section within our security guidelines:

| Threats | Associated permissions |

|---|---|

| Unwanted communications, User data silently sent to third-party, Data interception if SSL/TLS is not used | systemXHR, tcp-socket, browser |

| Accessing and loading unsafe content | browser Geolocation, |

| Privacy issue if geolocation data are stored | geolocation, fmradio |

| Deleted or corrupted user data | contacts, device-storage |

| Battery drain | audio-channel-normal, audio-channel-content, audio-channel-notification, audio-channel-alarm, alarms |

| Unreasonable settings which can set the phone unusable | desktop-notification |

Web activities and handlers

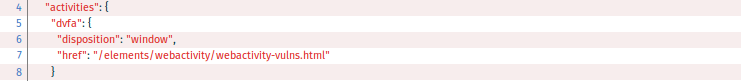

Web activities are the second big thing defined in the manifest. They provide a mechanism to exchange data among applications. Our DVFA app declares a single "dvfa" activity:

Depending on its implementation, an activity takes arguments, performs some action, and returns data to the calling app. Is it consistent with what its description in the manifest says? What is it doing with the data provided by the calling app? Could it send malicious data back to the caller? Keep in mind that any app can call the dvfa activity once it is registered. In the manifest, activities can optionally define filters which restricts an activity to specific MIME types for the data. This can be useful to understand what data the activity handler is meant to do.

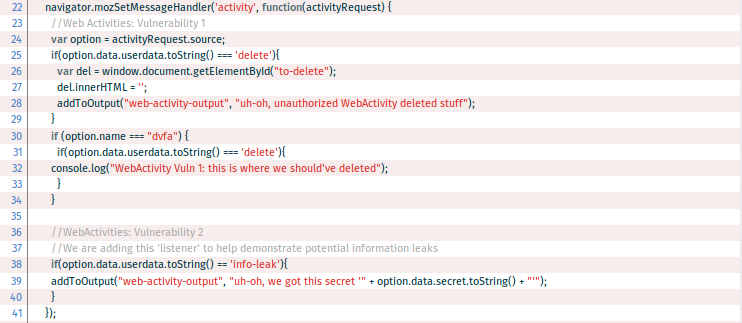

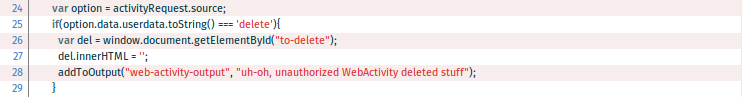

The code implementing the activity is defined in /js/tools.js as navigator.mozSetMessageHandler('activity', function(activityRequest).

This is a very simplistic handler, so it’s outright obvious that it completely lacks any sort of input filtering. This isn’t always a problem, for example when there’s just a few string matches for some harmless commands to perform, but in this case, things break horribly.

First, the handler checks the value of the data provided by the activity caller. Data about the caller, as the name of the called activity, data sent to the activity handler and so on, are stored in activityRequest.source.

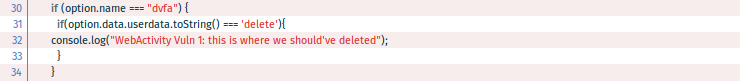

At first glance, it looks like all the important checks are there: Line 25 checks the command string and line 30 seems to check the activity name. But the order of checks is all wrong! By sending the string “delete” in the userdata field, any app is able to perform what usually would be a more harmful operation than generating that string. The check is performed just after that, by checking the value of the name attribute of the activityRequest.source object.

But it is too late. Also consider that all activities declared by an app share the same handler. So in the case that more than one activity is declared in the manifest, the handler should check the name of the activity being called to decide which actions should be executed.

The last part of the handler also lacks any kind of activity filtering and executes what would usually be a security sensitive action: information leaking. The issue which is pointed here is that data sent to the activity should not be trusted since it can be provided by any app installed on the phone and can be tainted. In this way the dvfa activity illustrates how any app can remotely delete content and retrieve secret data by simply sending the string “delete” or “info-leak”. In case of DVFA the errors are rather obvious, but when the faulty logic is a bit more convoluted (and it usually is), these can become hard to spot and serious vulnerabilities.

Through an XSS, darkly

Once you went through the manifest's permissions and web activities, the probably most important question becomes: which user data is the app handling? This can be resources on the phone (such as video or audio files, pictures): if the app has storage permissions, it is very likely that it deals with such resources. Data can also be strings provided by the user (credentials, search keywords, form content). Visually, these are very easy to spot: if there is some <input> HTML field, then might want to check what the app is doing with the data entering there.

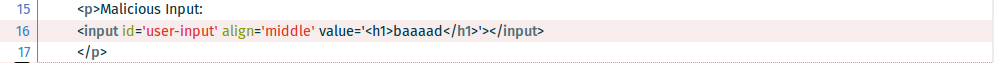

In the DVFA app, there is a few places where the user can provide personalized input like for example in /html/html-injection-demo.html:

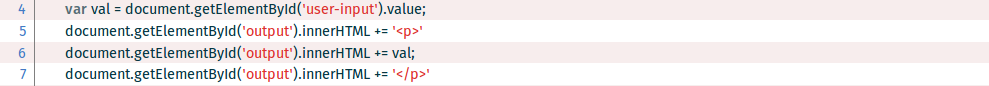

A typical mistake that developers make is to embed user-supplied input from such sources into the DOM without sanitizing the data. This can lead to Cross-Site Scripting (XSS). Such mistakes most often evolve around DOM write access in general and .innerHTML and .outerHTML in particular. So, searching for those keywords in the code is a good starting point for an investigation. We find that in /js/html-injection.js the value of the user-provided input used as follows:

The developer has used the function .innerHTML to output new content into a new paragraph node and into the DOM. What the developer hasn’t considered is that the user can provide HTML code in the input field, as it is done by default in the app (<h1>baaaad</h1>). The .innerHTML method will not interpret the <h1> tag as text, but as a HTML code, and thus the string "baaaad" ands up as the actual title.

This is a simple case of code injection. The default Content Security Policy in Firefox OS protects against the execution of injected JavaScript code. Try "<script>alert(1);</script>" and it will be displayed as text and nothing will pop up. But CSP will not protect against HTML code injection. Thus apps using .innerHTML or .outerHTML tags with user-supplied content are likely to be vulnerable to DOM-based XSS unless the content is properly sanitized.

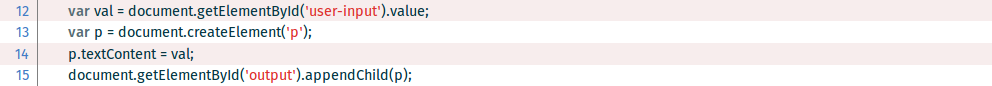

The best way to mitigate the risk – because sanitation is not bullet-proof and some corner cases are easy to overlook – is to use .createElement instead of .innerHTML. This is correctly demonstrated in another function of /js/html-injection.js:

And next

Admittedly, real-world security reviews don't play out as straight-forward as with the Damn Vulnerable Firefox App. But as a showcase app it will be maintained to include the most common and serious security issues that we encounter in the Marketplace. For this introduction we could only dive into a few of them, so feel to check out the Github repo from time to time to find out the latest developments in the area of bad code. If you want to dive deeper into the security of Firefox OS apps right away, we hope that you find the concluding list of links to in-depth information helpful.