Web Speech API - Speech Recognition

WebSpeech API - Speech Recognition

Frequently Asked Questions

What is it?

The speech recognition part of the WebSpeech API allows websites to enable speech input within their experiences. Some examples of this include Duolingo, Google Translate, Google.com (for voice search).

What is it not?

- Speech recognition by the browser

- A translation service

- Text-to-speech/narration

- Always-on listening

- A voice assistant (see below)

- Voice search

Why are we doing it?

Chrome, Edge, Safari and Opera support a form of this API currently for Speech-to-text, which means sites that rely on it work in those browsers, but not in Firefox. As speech input becomes more prevalent, it helps developers to have a consistent way to implement it on the web. It helps users because they will be able to take advantage of speech-enabled web experiences on any browser they choose. We can also offer a more private speech experience, as we do not keep identifiable information along with users’ audio recordings.

If nothing else, our lack of support for voice experiences is a web compatibility issue that will only become more of a handicap as voice becomes more prevalent on the web. We’ve therefore included the work needed to start closing this gap among our 2019 OKRs for Firefox, beginning with providing WebSpeech API support in Firefox Nightly.

What does it do?

When a user visits a speech-enabled website, they will use that site’s UI to start the process. It’s up to individual sites to determine how voice is integrated in their experience, how it is triggered and how to display recognition results.

As an example, a user might see a microphone button in a text field. When they click it, they will be prompted to grant temporary permission for the browser to access the microphone. Then they can input what they want to say (an utterance). Once they’ve finished their utterance, the browser passes the audio to a server, where it is run through a speech recognition engine. The speech recognizer decodes the audio and sends a transcript back down to the browser to display on a page as text.

How can I use it?

First make sure you are running Firefox Nightly newer than 72.0a1 (2019-10-22). Then type about:config in your address bar, search for the media.webspeech.recognition.enable and media.webspeech.recognition.force_enable preferences and make sure they are set as true. Then navigate to a website that makes use of the API, like Google Translate, for example, select a language, click the microphone and say something. If you your purpose is to develop using the API, you can find the documentation on MDN.

Where does the audio go?

Firefox can specify which server receives the audio data inputted by the users. Currently we are sending audio to Google’s Cloud Speech-to-Text. Google leads the industry in this space and has speech recognition in 120 languages.

Prior to sending the data to Google, however, Mozilla routes it through our own server's proxy first [1], in part to strip it of user identity information. This is intended to make it impractical for Google to associate such requests with a user account based just on the data Mozilla provides in transcription requests. (Google provides an FAQ for how they handle transcription data [2].) We opt-out of allowing Google to store our voice requests. This means, unlike when a user inputs speech using Chrome, their recordings are not saved and can not be attached to their profile and saved indefinitely.

For Firefox, we can choose whether we hold on to users’ data to train our own speech services. Currently we have audio collection defaulted to off, but eventually would like to allow users to opt-in if they choose.

Where are our servers and who manages it?

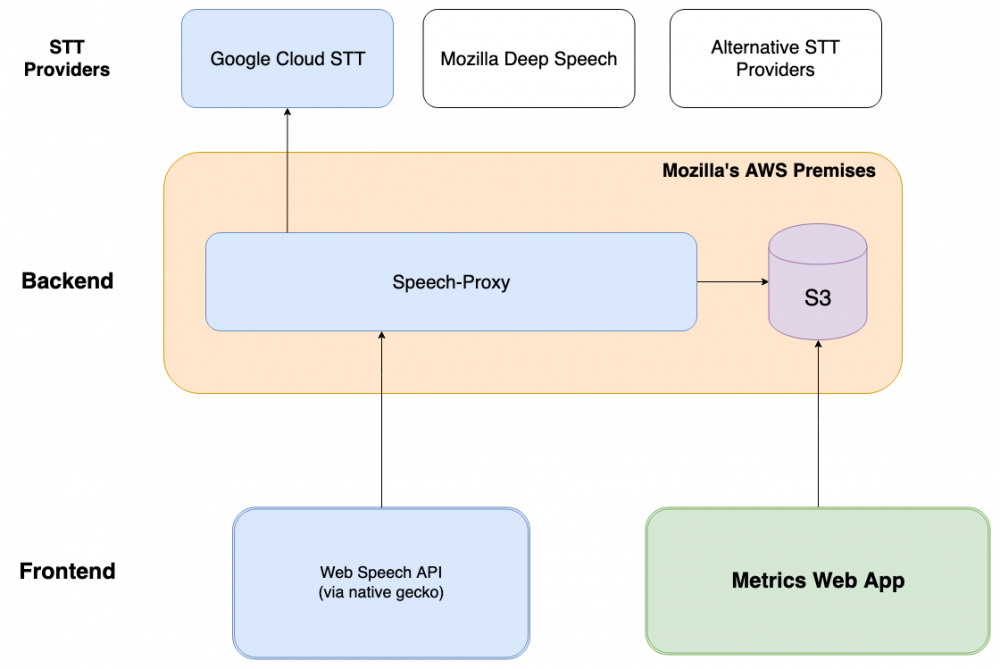

The entire backend is managed by Mozilla's cloudops and services team. Here is the current architecture:

A typical request follows the steps below:

- The Web Speech API code in the browser is responsible for prompting the user for permission to record from the microphone, determine when speaking has ended, and submit the data to our speech proxy server. There are four headers that can be used by the client to alter the proxy's behavior [3]: * Accept-Language-STT: determines the language aiming to be decoded by the STT service * Store-Sample: determines if the user allows Mozilla to store the audio sample in our own servers to further use (training our own models, for example) * Store-Transcription: determines if the user allows Mozilla to store the transcription in our own servers to further use (training our own models, for example) * Product-Tag: determines which product is making use of the API. It can be: vf for voicefill, fxr for Firefox Reality, wsa for Web Speech API, and so on.

- Once the proxy receives the request with the audio sample, it looks for the headers that were set. Nothing other than what was requested by the user plus a timestamp and the user-agent is saved. You can check it here: [4]

- The proxy then looks for the format of the file and decodes it to raw pcm.

- A request is made to the STT provider set in the proxy's configuration file containing just the audio file.

- Once the STT provider returns the request containing a transcription and a confidence score, it is forwarded to the client, who then is responsible to take an action according to the user's request.

How does your proxy server work? Why do we have it?

There are both technical and practical reasons to have a proxy server. We wanted to have both the flexibility to abstract the redirection of the user's requests to different STT services without changing the client code, and also to have a single protocol to be used across all projects at Mozilla. But the most beneficial reason was to keep our users anonymous when we need to use a 3rd party provider. In this case, the requests to the provider are made from our own server and only the audio sample is submitted to get a transcription. Some benefits of routing the data through our speech proxy:

- Before sending the data any 3rd party STT provider, we have the chance to strip user's identifying information and make an anonymous request to the provider.

- When we need to use 3rd party and paid STT services, we don't need to ship the service's key along with the client's code.

- Centralizing and funneling the requests through our servers decreases the chance of abuse from clients and allows us to implement mechanisms like throttling, blacklists, etc.

- We can switch between the STT services in real time as we need/want and redirect the request to any service we choose, without changing any code in the client. For example: send English requests to provider A and pt-br to provider B without sending any update to the client.

- We can both support STT services on premises as off premises without having any extra logic in the client.

- We can centralize all requests coming from different products into a single speech endpoint making it easier to measure the engines both quantitative as qualitative.

- We can support different audio formats without adding extra logic to the clients regardless of the format supported by the STT provider, like adding compression or streaming between the client and the proxy.

- If users desire to contribute to Mozilla's mission and let us save their audio samples, we can, without sending it to 3rd party providers.

There are three parts to this process - the website, the browser and the server. Which part does the current WebSpeech work cover?

The current work being added to Firefox Nightly is the browser portion of the process. It provides the path for the website to access the speech recognition engine on the server.

Can we not send audio to Google?

We can send the audio to any speech recognition service we choose. Mozilla is currently developing our own service called Deep Speech which we hope to validate in 2020 as a replacement for Google, at least in English. We may eventually use a variety of recognition engines for different languages.

Who pays for Google Cloud?

Currently the license for Google Cloud STT is being handled by our Cloud Ops team under our general contract with Google Cloud

How can I test with Deep Speech?

Considering you are using a Nightly version with the API enabled, you just need to change a preference to our endpoint enabled with Deep Speech (currently only English is available):

- Go to about:config

- Set the preference media.webspeech.service.endpoint to https://dev.speaktome.nonprod.cloudops.mozgcp.net/ (this endpoint is for testing purposes only)

- Navigate to Google Translate, click the microphone and say something.

Why not do it offline?

To do speech recognition offline, the speech recognition engine must be embedded within the browser. This is possible and we may do it eventually, but it is not currently planned for Firefox. We will, however, be testing offline speech recognition in Firefox Reality for Chinese users early in 2020. Depending on how those tests go, we may plan to extend the functionality elsewhere.

At one point back in 2015, there was an offline speech recognition engine (Pocketsphinx) embedded into Gecko for FirefoxOS. It was removed from the codebase in 2017 because it could not match the quality of recognition offered by server-based engines using deep neural nets.

But I still want to run offline

You can easily setup your own Deep Speech service locally in your computer using our service's docker images. Just follow the steps below:

- First install and start the Deep Speech Docker image from here.

- Then install and start the speech-proxy Docker image from here with the environment variable ASR_URL set to the address of yours Deep Speech instance.

- Set the preference media.webspeech.service.endpoint of your Nightly to the address of your speech-proxy instance

- Navigate to Google Translate, click the microphone and say something.

- If it works, then you have the recognition happening 100% offline in your system.

Why are we holding WebSpeech support in Nightly?

We are in the process of working with Google and other partners to update the WebSpeech API spec. While that process is underway, we will need to make updates to our implementation to match. We will not ship WebSpeech support more broadly until there is consensus that the Standard is appropriate for Mozilla.

Are you adding voice commands to Firefox?

There are experiments testing the user value of voice operations within the browser itself, but that effort is separate from general Webspeech API support. For more details on that work, dig in [[here]].

Have a question not addressed here?

Ask us on dev-platform@lists.mozilla.org