Labs/Ubiquity/Parser Documentation: Difference between revisions

No edit summary |

|||

| Line 3: | Line 3: | ||

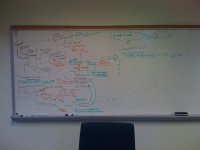

[[Image:Ubiquity_NLParser_Whiteboard_Diagram.jpg|200px|thumb|right|The parser architecture, as depicted by Jono DiCarlo.]] | [[Image:Ubiquity_NLParser_Whiteboard_Diagram.jpg|200px|thumb|right|The parser architecture, as depicted by Jono DiCarlo.]] | ||

= The Epic Journey = | |||

We will now follow the journey of a string through Ubiquity's parser. | We will now follow the journey of a string through Ubiquity's parser. | ||

| Line 10: | Line 10: | ||

trans hello world to ja | trans hello world to ja | ||

== Part 1: Parser plugin to PartiallyParsedSentences == | |||

=== Language-specific parser plugins=== | === Language-specific parser plugins=== | ||

| Line 37: | Line 39: | ||

The parser maintains a list of every command that's installed in Ubiquity, and the next step in the parsing process is to take the input verb and compare it to the name of each command to figure out which ones are potential matches, rank them by match quality, and eliminate the rest. | The parser maintains a list of every command that's installed in Ubiquity, and the next step in the parsing process is to take the input verb and compare it to the name of each command to figure out which ones are potential matches, rank them by match quality, and eliminate the rest. | ||

=== Meanwhile: Parsing the Arguments === | |||

(Also language-dependent) | |||

=== Assigning input substrings to command arguments === | |||

To create PartiallyParsedSentences | |||

=== If there is no verb match: Noun-First Suggestions === | === If there is no verb match: Noun-First Suggestions === | ||

| Line 68: | Line 72: | ||

In the unlikely event that the input and the selection are both empty, then the parser returns an empty suggestion list. | In the unlikely event that the input and the selection are both empty, then the parser returns an empty suggestion list. | ||

== Scoring and Sorting Suggestions == | |||

=== Scoring the Quality of the Verb Match === | === Scoring the Quality of the Verb Match === | ||

| Line 75: | Line 81: | ||

The current heuristic is extremely ad-hoc, but produces the ordering we want... so far. | The current heuristic is extremely ad-hoc, but produces the ordering we want... so far. | ||

When attempting to match an input verb to a command name, the parser tries the following types of matches: | |||

If the input word matches the beginning of the verb name, it gets a good score, between 0.75 and 1.0. The more percentage of the verb name that is matched, the higher the score is within this range. (All else being equal, this will rank short verb names before longer ones; I'm not sure this is really needed or correct.) | #A perfect match between the input word and the verb name earns the maximum score, 1.0. | ||

#If the input word matches the beginning of the verb name, e.g. input is "trans", command is "translate": it gets a good score, between 0.75 and 1.0. The more percentage of the verb name that is matched, the higher the score is within this range. (All else being equal, this will rank short verb names before longer ones; I'm not sure this is really needed or correct.) | |||

If the input word matches the verb name, but not at the beginning, (e.g.: input is "cal", verb is "add-to-calendar") then it gets a score between 0.5 and 0.75, again scaled by the percentage of the verb name that is matched. | #If the input word matches the verb name, but not at the beginning, (e.g.: input is "cal", verb is "add-to-calendar") then it gets a score between 0.5 and 0.75, again scaled by the percentage of the verb name that is matched. Match in the middle is not as good as match at the beginning, because it's assumed that if the user types "ans" they are more likely to want "answers" than "translate". | ||

#If the input word matches the beginning of a ''synonym'' of the verb name, it gets a score between 0.25 and 0.5. For example, "tweet" is a synonym for "twitter", so if the input is "twee" then "twitter" will go in this category. (Note this means that any match to a synonym is ranked below any match to a primary name. This is to prevent synonyms from colonizing the namespace.) | |||

If the input word matches the beginning of a ''synonym'' of the verb name, it gets a score between 0.25 and 0.5. (Note this means that any match to a synonym is ranked below any match to a primary name.) | #If the input word matches a synonym of the verb name, but not at the beginning, it gets a score between 0 and 0.5. For example, matching "eet" to "twitter" because it matches the end of "tweet". This type of match is considered a real long-shot, but better than nothing. | ||

If the input word matches a synonym of the verb name, but not at the beginning, it gets a score between 0 and 0.5. | |||

No match at all gets a 0. | No match at all gets a 0. | ||

| Line 93: | Line 97: | ||

( [https://ubiquity.mozilla.com/hg/ubiquity-firefox/file/71b710040206/ubiquity/modules/parser/parser.js#l127 NLParser.Parser._sortSuggestionList() in parser.js] ) | ( [https://ubiquity.mozilla.com/hg/ubiquity-firefox/file/71b710040206/ubiquity/modules/parser/parser.js#l127 NLParser.Parser._sortSuggestionList() in parser.js] ) | ||

=== Interpolating magic pronouns like "this" (or not) === | === Interpolating magic pronouns like "this" (or not) === | ||

Revision as of 01:15, 31 January 2009

Back to Labs/Ubiquity.

The Epic Journey

We will now follow the journey of a string through Ubiquity's parser.

Suppose that the user enters the following into the parser:

trans hello world to ja

Part 1: Parser plugin to PartiallyParsedSentences

Language-specific parser plugins

To make internationalization easier, all operations which are (human)-language-dependent are handled by language-specific plugins. For instance, in some languages the verb comes before the object, in other languages after the object, etc., so figuring out which part of the sentence is the verb is language-dependent.

See NLParser.makeParserForLanguage() in parser.js for how the parser chooses a particular language plugin to use; this is called on initialization. See NLParser.Parser.updateSuggestionList() in parser.js for how the parser dispatches the user input to the parser plugin.

So if Ubiquity is in English mode, then the sentence is dispatched to the English-specific parser plugin for the first stage of parsing. The entry point here is the function EnParser.parseSentence() in file locale_en.js.

For an example of how parsing works differently in other languages, see file locale_jp.js, the Japanese parser plugin, which would be called instead of the English one if the language had been set to "jp".

The job of the language-specific plugin is twofold:

- To find the verb in the input, so that it can be matched against command names

- To generate every valid assignment of input substrings to arguments of the command.

Where's the Verb?

Once inside the language-specific plugin, things might happen in a different order depending on the language, but we'll keep focusing on the English case for now.

The English parser starts by finding the verb. Currently it does this by splitting the input string on spaces, and taking the first word (i.e. everything up to the first space) as the verb. In our example, "trans" is our verb, and ["hello", "world", "to", "ja"] is the rest of our sentence.

(Note: this simplistic way of getting the verb currently limits us to having only single-word command names. It needs to be changed to support multi-word commands. We also need to consider whether we want to support the case of the user entering the object first and the verb afterward.)

Looking for a Verb Match

The parser maintains a list of every command that's installed in Ubiquity, and the next step in the parsing process is to take the input verb and compare it to the name of each command to figure out which ones are potential matches, rank them by match quality, and eliminate the rest.

Meanwhile: Parsing the Arguments

(Also language-dependent)

Assigning input substrings to command arguments

To create PartiallyParsedSentences

If there is no verb match: Noun-First Suggestions

NLParser.Parser.updateSuggestionList() in parser.js

NLParser.Parser.nounFirstSuggestions() in parser.js

If the input verb doesn't match *any* command names at all, the parser will try to look for a noun match. That is, the parser will assume that the user has skipped typing the verb and gone straight to typing an argument. It will treat the entire input string as a noun, and produce a list of verbs that could possibly take that noun as an argument.

In practice, many commands take arguments of type noun_arb_text, meaning that they can accept *any input whatsoever* as an argument. That means that these commands will always appear as part of valid verb list when doing noun-first suggestion. This isn't the most useful thing, so the parser draws a somewhat arbitrary distinction at this point between *generic* verbs (verbs that take any string whatsoever) and *specific* verbs (verbs that accept only some arguments and reject others) -- for instance the "tab" command, which accepts only names of open tabs as arguments. When doing noun-first suggestions, specific verbs that can accept the input are always ranked over generic verbs.

Generic verbs are ranked by the frequency with which they have been chosen as noun-first completions.

Verb quality matches play no part in ranking noun-first suggestions, because there is no verb match quality to compare. Only the frequency of past usage and the quality of the noun matches make any difference.

As an optimization, the parser doesn't actually try out every generic verb. Instead, it asks the Suggestion Memory module (see also) for the top N most often used generic verbs, and compares only to those. This doesn't make a difference in the final suggestions because less-frequently-used generic verbs wouldn't make it into the top of the list anyway; skipping them speeds things up.

If the input string is empty

NLParser.Parser.updateSuggestionList() in parser.js

Then the parser looks at the user selection. If it's non-empty, then we assume that the user is looking for suggestions of verbs that could operate on this selection. This case is then handled exactly like the case above, with the sole difference that the "argument" is coming from the selection and not from the typed input.

In the unlikely event that the input and the selection are both empty, then the parser returns an empty suggestion list.

Scoring and Sorting Suggestions

Scoring the Quality of the Verb Match

(NLParser.Verb.match() in parser.js)

The current heuristic is extremely ad-hoc, but produces the ordering we want... so far.

When attempting to match an input verb to a command name, the parser tries the following types of matches:

- A perfect match between the input word and the verb name earns the maximum score, 1.0.

- If the input word matches the beginning of the verb name, e.g. input is "trans", command is "translate": it gets a good score, between 0.75 and 1.0. The more percentage of the verb name that is matched, the higher the score is within this range. (All else being equal, this will rank short verb names before longer ones; I'm not sure this is really needed or correct.)

- If the input word matches the verb name, but not at the beginning, (e.g.: input is "cal", verb is "add-to-calendar") then it gets a score between 0.5 and 0.75, again scaled by the percentage of the verb name that is matched. Match in the middle is not as good as match at the beginning, because it's assumed that if the user types "ans" they are more likely to want "answers" than "translate".

- If the input word matches the beginning of a synonym of the verb name, it gets a score between 0.25 and 0.5. For example, "tweet" is a synonym for "twitter", so if the input is "twee" then "twitter" will go in this category. (Note this means that any match to a synonym is ranked below any match to a primary name. This is to prevent synonyms from colonizing the namespace.)

- If the input word matches a synonym of the verb name, but not at the beginning, it gets a score between 0 and 0.5. For example, matching "eet" to "twitter" because it matches the end of "tweet". This type of match is considered a real long-shot, but better than nothing.

No match at all gets a 0.

The algorithm doesn't currently recognize disjoint or out-of-order matches. E.g. if the user typed "add cal", they might mean "add-to-calendar", and we might detect that fact if we did disjoint matches, but we don't so this will get a score of 0. It might be worth experimenting with matches like this, but how to rank them?

Scoring the Frequency of the Verb Match

( NLParser.Parser._sortSuggestionList() in parser.js )

Interpolating magic pronouns like "this" (or not)

Getting suggestions from NounTypes

This can be asynchronous

Rejecting parsings with invalid argument assignments

If a NounType is given input, and it returns an empty suggestion list, then that noun assignment is considered invalid. This invalidates the PartiallyParsedSentence, and no fully ParsedSentences are created from it.

For example, if the input was

trans hello world to blarg

Then the PartiallyParsedSentences are:

- verb = TRANSLATE, directObject = "hello world to blarg", to = "", from = ""

- verb = TRANSLATE, directObject = "hello world", to = "blarg", from = ""

The "to" argument is noun_type_language. When it's given input "blarg", it produces no suggestions. Therefore the PartiallyParsedSentence that assigns the input string "blarg" to the "to" argument is considered invalid and dropped.

Note the difference between an invalid argument assignment, and leaving an argument unfilled. A PartiallyParsedSentence that leaves an argument unfilled is still valid. The above input would still produce a suggestion that treats "hello world to blarg" as the string to be translated, with the languages yet to be specified.

Turning PartiallyParsedSentences into FullyParsedSentences

Filling missing arguments with the selection

Filling missing arguments with the NounType's defaults

Scoring the Quality of the Noun Matches

Each ParsedSentence gets assigned a third score: noun match quality. This is distinct from its verb match quality and its frequency score.

Once again, we draw a distinction between noun_arb_text (the type of argument that can accept any input whatsoever) and more discriminating noun types. Currently a ParsedSentence gets +1 to its noun match quality for every specific noun type argument that is filled by the input.

So for example, in parsing "trans hello world to ja", we have these three fully parsed sentences:

- verb = TRANSLATE, direct object = "hello world to ja", to = unfilled, from = unfilled

- verb = TRANSLATE, direct object = "hello world", to = "japanese", from = unfilled

- verb = TRANSLATE, direct object = "hello world", to = "javanese", from = unfilled

The direct object is worth no points because it's arbitrary text. The "to" and "from" arguments are a specific noun type (language) and therefore worth one point if filled. So ParsedSentence number 1 gets 0 points for noun match quality, while ParsedSentences number 2 and number 3 get 1 point each.

This is an extremely simplistic noun match quality ranking. Replacing it with a real measurement, one that takes into account string distance or past choice frequency of noun suggestions, is a major opportunity for future evolution of the parser.