Media/WebRTC Audio Perf: Difference between revisions

No edit summary |

No edit summary |

||

| Line 87: | Line 87: | ||

#PulseAudio's pactl tool is used to find the right sink to play-out the audio produced by the remote Peer Connection. | #PulseAudio's pactl tool is used to find the right sink to play-out the audio produced by the remote Peer Connection. | ||

#PulseAudio's parec tool records mono channel audio played out at the sink in Signed Little Ending format at 16000 samples/sec. | #PulseAudio's parec tool records mono channel audio played out at the sink in Signed Little Ending format at 16000 samples/sec. | ||

#The output from the | #The output from the parec tool is fed into the SOX tool to generate .WAV version of the recorded audio and to trim silence | ||

at the beginning and end of the recorded audio file. <br> <br> | |||

<code> | <code> | ||

Command: | Command: | ||

parec -r -d <recording device> --format=s16le -c 1 -r 16000 | |||

| sox -t raw -r 16000 -sLb 16 -c 1 - <output audio file> trim 0 <record-duration> | |||

</code> | </code> | ||

<br> | <br> | ||

| Line 101: | Line 102: | ||

</code> | </code> | ||

=== TODO Feature List === | === TODO Feature List === | ||

These are near term things, if implemented, would improve the framework | |||

#Support different audio formats and lengths | |||

#Provide tools support across platforms. Currently we support LINUX only | |||

#Discuss the results generated and DataZilla, GraphServer integration | |||

#Allow configuration options to specify sample rates, number of channels and encoding. | |||

== Open Issues == | |||

==Open | |||

Revision as of 01:55, 10 December 2013

Note: A lot of things described in here is still a Work in Progress and is expected to change over time.

Introduction

This efforts aims at building tools and frameworks for analyzing audio and video quality for the Firefox WebRTC implementation. WebRTC involves peer-to-peer rich multimedia communications across variety of end-user devices and network conditions. The framework must be able to provide necessary means to simulate/emulate these usage configurations in order to analyze the behavior of WebRTC implementation in the firefox.

Background

Typical WebRTC Media Pipeline

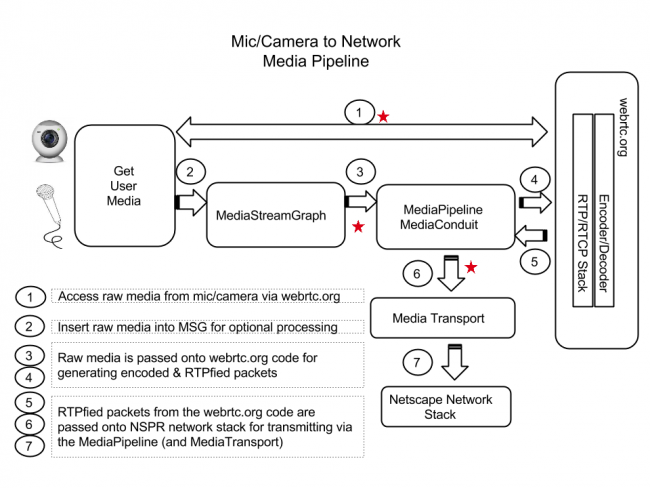

Below picture captures various components involved in the flow of

media captured from mic/camera till it gets transported. The reverse direction

follows a similar path back till the RTP packets gets delivered as raw media for

rendering.

With several moving components in the pipeline, it becomes necessary to analyze the impact these might have on the overall quality of the media being transmitted or rendered. For instance, the parts of the pipeline highlighted ( marked star) has potential to induce latency and impact quality of the encoded media. Thus, having possibilities to measure, analyze and account these impact has potential to improve the performance of the Firefox WebRTC implementation.

Not to forget, the pipeline doesn't capture impacts of latency induced due to network bandwidth, latency and congestion scenarios.

Scope

Following is wishlist of functionalities that the framework must be able to support eventually.

- Audio and Video Quality Analysis

- Quantitative measurements

- Qualitative measurements

- Latency Impact Analysis

- End to End Latency

- Latency impacts due to local processing

- Latency impacts under simulated constrained network conditions

- RTCP based analysis

- Codec Configuration Variability Analysis

- Sample Rate, Input and Output Channels, Reverse Channels, Echo Cancellation, Gain Control, Noise Suppression, Voice Activity Detection, Level Metrics, Delay, Drift compensation, Echo Metrics

- Video Frame-rate, bitrate, resolutions

- Hardware and Platform Variability Analysis

Ongoing Work

Audio Performance Framework

bug 909524 is ongoing effort to provide minimal components that serves as a good starting point for carrying out Peer Connection audio quality analysis as part of Mozilla's Talos framework.

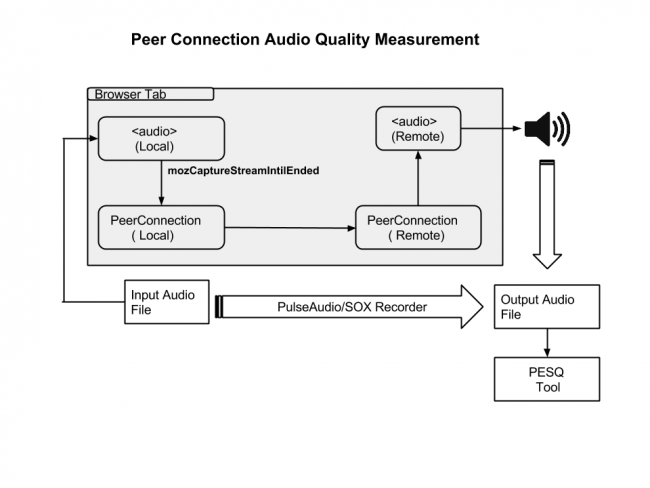

Below picture captures the test setup for reporting Perceptual Evaluation of Speech Quality (PESQ) scores for audio played through the Peer Connection.

The idea here is to setup 2 Peer Connections within a single instance of the browser tab to setup a one-way audio call .Finally compute PESQ scores between the input audio file fed into the local Peer Connection and the output audio audio file recorded at the play-out of the remote Peer Connection in a fully automated fashion.

Following sub-sections explain in details the steps involved for this purpose

Running Browser Based Media Test Automatically

Talos is Mozilla's python performance testing framework that is usable on Windows, Mac and Linux. Talos provides automated way to start/stop Firefox , perform tests and capture results to be reported to the Mozilla's graphing server across various Firefox builds.

Talos is used in our setup to run media tests along with other start-up and page-loader performance tests.

Feeding output of <audio> to the Peer Connection

Once we have the framework figured out to run automated media-tests, the next step is deciding on how do we insert input audio file into the WebRTC Peer Connection.

For this purpose Mozilla's MediaStreamProcessing API mozCaptureStreamUntilEnded enables the <audio> element to produce MediaStream that consists of whatever the <audio> element is playing. Thus the stream produced by the <audio> element in this fashion replaces the function of obtaining the media stream via the WebRTC GetUserMedia API. Finally, the generated MediaStream is added to the local Peer Connection element via the addStream() API as shown below.

// localAudio is an <audio> , localPC is a PeerConnection Object

localAudio.src = "input.wav";

localAudioStream = localAudio.mozCaptureStreamUnitlEnded();

localAudio.play()

localPC.addStream(localAudioStream)

Record, Cleanup and Compute PESQ

From the previous 2 sub-sections we have the following

- A way to run automated browser tests using Talos

- A way a feeding audio from an input audio file audio input to the Peer Connection without having to use the GetUserMedia() API

This sub-section explains various tools used to choose the right audio sink, record the audio played out and compute PESQ scores

- PulseAudio's pactl tool is used to find the right sink to play-out the audio produced by the remote Peer Connection.

- PulseAudio's parec tool records mono channel audio played out at the sink in Signed Little Ending format at 16000 samples/sec.

- The output from the parec tool is fed into the SOX tool to generate .WAV version of the recorded audio and to trim silence

at the beginning and end of the recorded audio file.

Command:

parec -r -d <recording device> --format=s16le -c 1 -r 16000

| sox -t raw -r 16000 -sLb 16 -c 1 - <output audio file> trim 0 <record-duration>

The recording is timed to match the length of the input audio file using SOX's trimming effects.

- Finally PESQ is used to compute the quality scores between the input audio file (original) and the output audio file (recorded)

Command:

PESQ +16000 <input-audio-file> <output-audio-file>

TODO Feature List

These are near term things, if implemented, would improve the framework

- Support different audio formats and lengths

- Provide tools support across platforms. Currently we support LINUX only

- Discuss the results generated and DataZilla, GraphServer integration

- Allow configuration options to specify sample rates, number of channels and encoding.