User talk:Dead project

Abstract

The goal of this project is bringing the power of computer vision and image processing to the Web. By extending the spec of Media Capture and Streams, the web developers can write video processing related applications in better way. The primary idea is to incorporate Worker-based JavaScript video processing with MediaStreamTrack. The user's video processing script can do the real image processing and analysis works frame by frame.

Introduction

To get a quick understand what is project FoxEye. Please see below file:

Presentation file on Portland Work Week.File:Project FoxEye Portland Work Week.pdf

Presentation file on P2PWeb WorkShop.File:Project FoxEye 2015-Feb.pdf

Youtube: https://www.youtube.com/watch?v=TgQWEWiGaO8

The needs for image processing and computer vision is increasing in recent years. The introduction of video element and media stream in HTML5 is very important, allowing for basic video playback and WebCam ability. But it is not powerful enough to handle complex video processing and camera application. Especially there are tons of mobile camera, photo and video editor applications show their creativity by using OpenCV etc in Android. It is a goal of this project to include the capabilities found in modern video production and camera applications as well as some of the processing, recognition and stitching tasks.

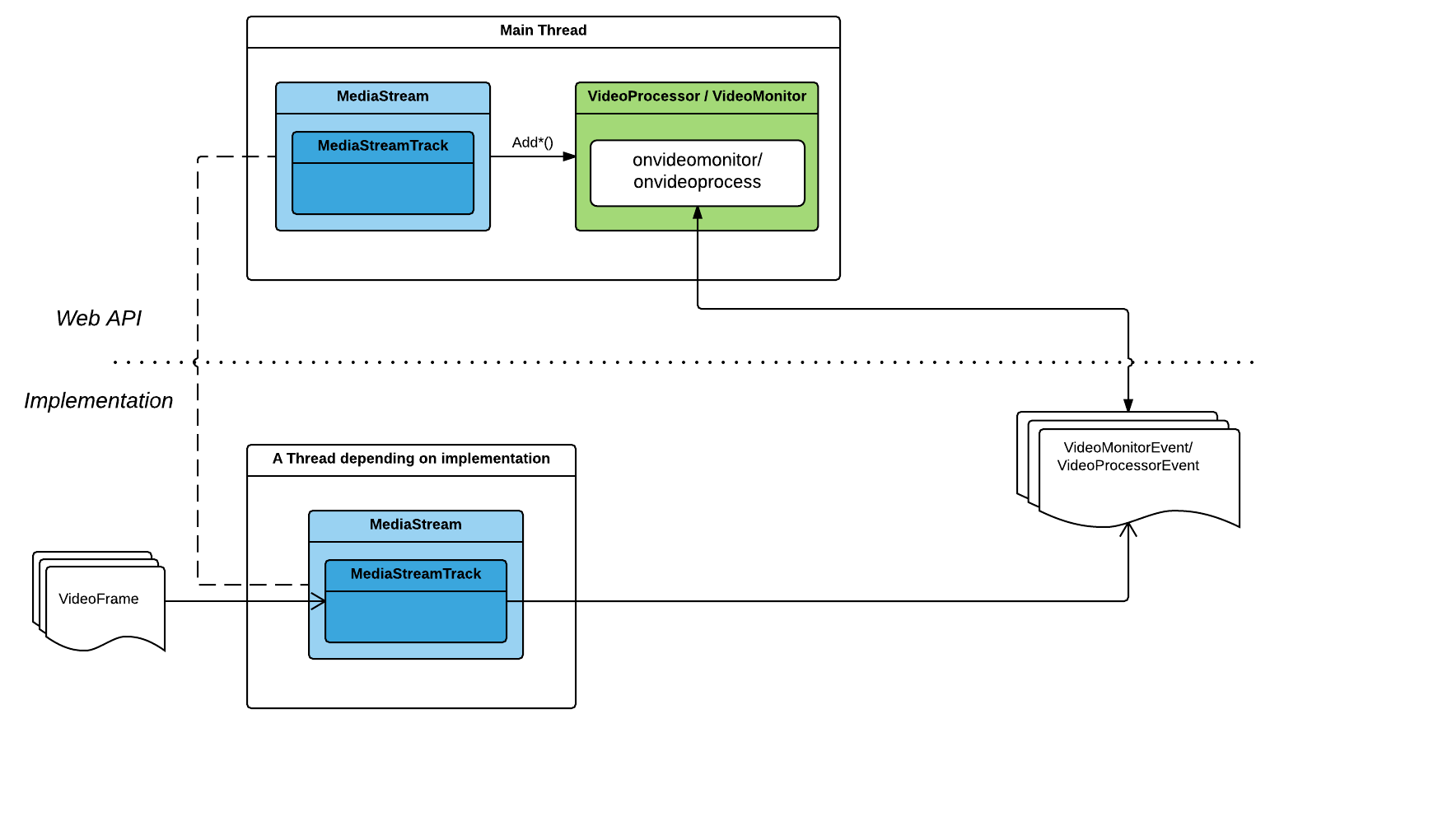

This API is inspired by the WebAudio API[1]. Unlike WebAudio API, we try to reach the goal by modifying existing Media Capture and Streams API. The idea is adding some functions to associate the Woker-based script with MediaStreamTrack. Then the script code of Worker runs image processing and/or analysis frame by frame. Since we move the most of processing work to Worker, the high-level image processing API can be JavaScript/native implementation easily.

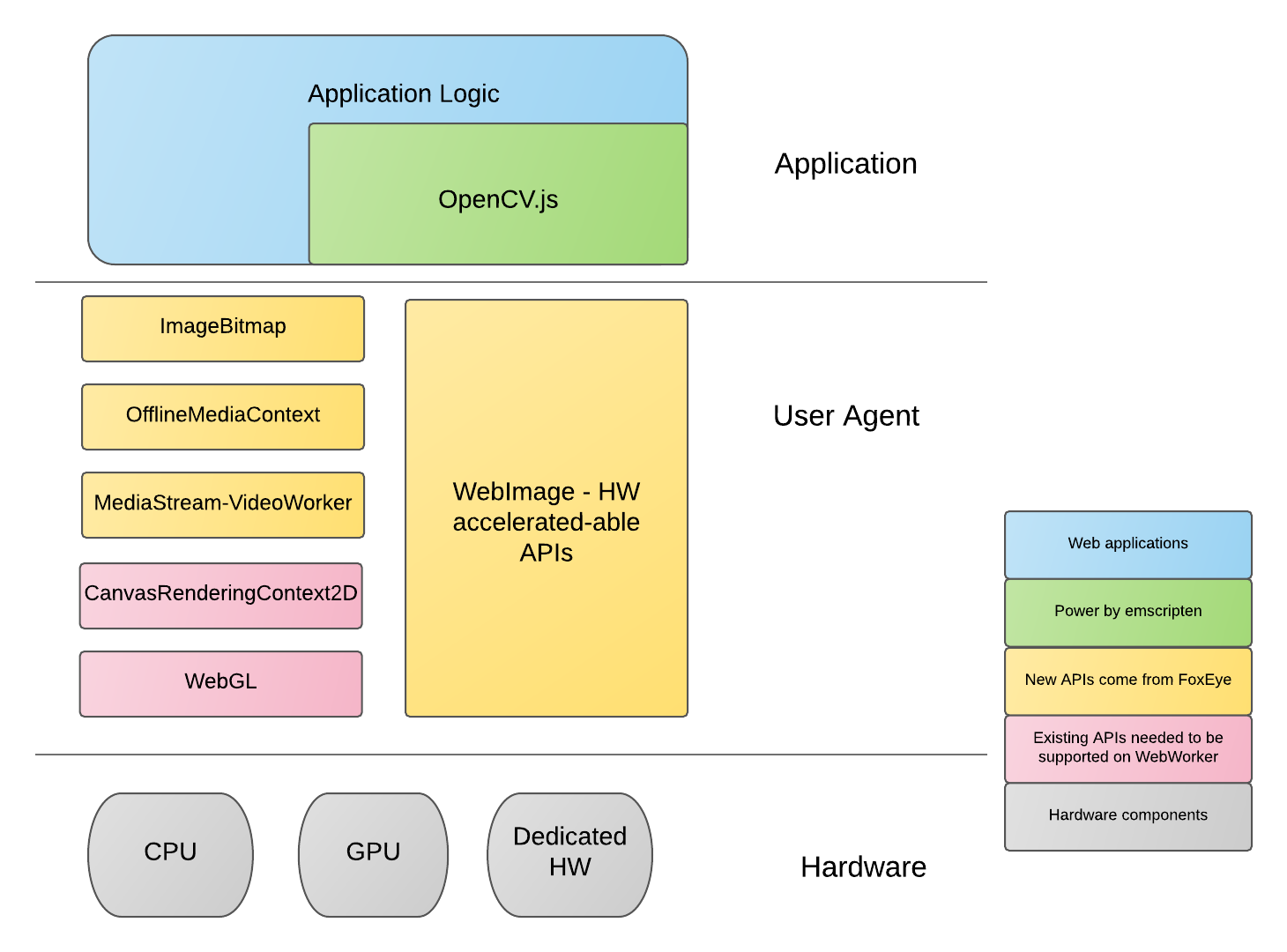

Basically, the spirit of this project is two part. The first part is extend the MediaStreamTrack to associate a VideoWorker. This part provide a way to do image processing job frame by frame. The second part is WebImage, it is a image processing/computer vision library for JavaScript developer. App developer can use it if they don't want to build such library by their own. Once the project finished, JavaScript developer can write an application like Amazon firefly and Word Lens in an easier way.

Concept

Real Time Processing:

The new design is a simple and minimal change for current API. By extending MediaStreamTrack and adding VideoWorker related API, we can let MedisStream be able to support video processing functionality through the script code in Worker. Below is the draft WebIDL codes. Please see [] for the draft specification.

[Constructor(DOMString scriptURL)]

interface VideoWorker : Worker {

void terminate ();

[Throws]

void postMessage (any message, optional sequence<any> transfer);

attribute EventHandler onmessage;

};

partial interface MediaStreamTrack {

void addWorkerMonitor (VideoWorker worker);

void removeWorkerMonitor (VideoWorker worker);

MediaStreamTrack addWorkerProcessor (VideoWorker worker);

void removeWorkerProcessor ();

};

interface VideoWorkerGlobalScope : WorkerGlobalScope {

[Throws]

void postMessage (any message, optional sequence<any> transfer);

attribute EventHandler onmessage;

attribute EventHandler onvideoprocess;

};

interface VideoProcessEvent : Event {

readonly attribute DOMString trackId;

readonly attribute double playbackTime;

readonly attribute ImageBitmap inputImageBitmap;

readonly attribute ImageBitmap? outputImageBitmap;

};

This example try to run text recognition in VideoWorker. In VideoWorker, developer can directly use OpenCV in asm.js version.

But that means the developer or library provider should provide a way to transform ImageBitmap to OpenCV::Mat type. The alternative is providing a new API(WebImage) which dealing with those kinds of interface problems. We can start this kind of implementation in B2G case first.

Example Code 1

Main javascript file:

var myMediaStream;

navigator.getUserMedia({video:true, audio:false}, function(localMediaStream) {

myMediaStream = localMediaStream;

var videoTracks = myMediaStream.getVideoTracks();

var track = videoTracks[0];

var myWorker = new Worker("textRecognition.js");

track.addWorkerMonitor(myWorker));

myWorker.onmessage = function (oEvent) {

console.log("Worker recognized: " + oEvent.data);

};

var elem = document.getElementById('videoelem');

elem.mozSrcObject = dest.stream;

elem.play();

}, null);

textRecognition.js:

var textDetector = WebImage.createTextDetector(img.width, img.height)

onvideoprocess = function (event) {

var img = event.inputFrame;

// Do text recognition.

// We might use built-in detection function or OpenCV in asm.js

var words= textDetector.findText(img);

var recognizedText;

for (var ix = 0; ix < words.length; ix++) {

recognizedText = recognizedText + words[ix] + " ";

}

postMessage(recognizedText);

}

WebImage:

The WebImage is a high level library for image processing and analysis library. Would not address too much in this wiki page right now. I am focusing on the worker part in current stage.

The underlying implementation of this library can be asm.js or native code which depend the performance need. Some features in asm.js might run poorly in B2g. We will do some experiments like the performance comparison between OpenCV-asm.js and native OpenCV in B2G.

We might need WebImage for HW accelerator case too. For example, we can provide some built-in detection functions like face detection.

In summary, if the performance is not any consideration, we will use asm.js version as much as we can. So the judgement will be the performance need. For example, if we need text recognition in B2G and the performance is critical and the asm.js version of tesseract can't run smoothly, then we go to native built-in way.

Why do we need WebImage:

- For performance critical cases.

- No need of changes in AP for optimization in particular platform: For example, we can use vendor's face detector in camera on B2G. Or native codes version in B2G if there is any memory/performance concern.

- Can be cross platforms: Can run asm.js version in all platforms/browser.

OfflineMediaContext:

OfflineMediaContext is for rendering/processing faster than real-time. One of the most common cases is the feature of saving processed video to a local file in Video Editor applications.

Demo Snapshots

Unlimited Potentials

According to "Firefox OS User Research Northern India Findings" [3], one of the key table-stake is camera related features. "Ways to provide photo & video editing tools" is what this WebAPI for. So if we can deliver some cool photo & video editing features, we can fulfill one of the needs of our target market.

In [3], it mentioned that one of purchase motivators is educate my kids. The features like PhotoMath can satisfy the education part.

In long term, if we can integrate text recognition with TTS(text to speech), we can help illiterate person to read words or phrase. That will be very useful features.

Also offline text translation in camera might be a killer application too. Waygo and WordLens is two of such applications in Android and iOS.

Text Selection in Image is also an interesting feature for browser. Project Naptha demos some potential functionality based on yext selection in Image.

Use Cases

- Digital Image Processing(DIP) for camera:

- Face In, see Sony Face In

- Augmented Reality, see IKEA AR

- Camera Panorama,

- Fisheye camera,

- Comic Effect,

- Long term, might need Android Camera HAL 3 to control camera

- Smile Snapshot

- Gesture Snapshot

- HDR

- Video Stabilization

- Bar code scanner

- Photo and video editing

- Video Editor, see WeVideo on Android

- A faster way for video editing tools.

- Lots of existing image effects can be used for photo and video editing.

- https://www.facebook.com/thanks

- Object Recognition in Image(Not only FX OS, but also broswer):

- Shopping Assistant, see Amazon Firefly

- Face Detection/Tracking,

- Face Recognition,

- Text Recognition,

- Text Selection in Image,

- Text Inpainting,

- Image Segmentation,

- Text translation on image, see Waygo

- Duo Camera:

- Nature Interaction(Gesture, Body Motion Tracking)

- Interactive Foreground Extraction

and so on....

Some cool applications we can refer in real worlds

- Word Lens:

- Waygo

- PhotoMath

- Cartoon Camera

- Photo Studio

- Magisto

- Adobe PhotoShop Express

- Amazon(firefly app)

Comparison

Canvas2DContext

Currently, you can do video effect by Canvas2DContext. See the demo made by [4]. The source code looks like below.

function frameConverter(video,canvas) {

// Set up our frame converter

this.video = video;

this.viewport = canvas.getContext("2d");

this.width = canvas.width;

this.height = canvas.height;

// Create the frame-buffer canvas

this.framebuffer = document.createElement("canvas");

this.framebuffer.width = this.width;

this.framebuffer.height = this.height;

this.ctx = this.framebuffer.getContext("2d");

// Default video effect is blur

this.effect = JSManipulate.blur;

// This variable used to pass ourself to event call-backs

var self = this;

// Start rendering when the video is playing

this.video.addEventListener("play", function() {

self.render();

}, false);

// Change the image effect to be applied

this.setEffect = function(effect){

if(effect in JSManipulate){

this.effect = JSManipulate[effect];

}

}

// Rendering call-back

this.render = function() {

if (this.video.paused || this.video.ended) {

return;

}

this.renderFrame();

var self = this;

// Render every 10 ms

setTimeout(function () {

self.render();

}, 10);

};

// Compute and display the next frame

this.renderFrame = function() {

// Acquire a video frame from the video element

this.ctx.drawImage(this.video, 0, 0, this.video.videoWidth,

this.video.videoHeight,0,0,this.width, this.height);

var data = this.ctx.getImageData(0, 0, this.width, this.height);

// Apply image effect

this.effect.filter(data,this.effect.defaultValues);

// Render to viewport

this.viewport.putImageData(data, 0, 0);

return;

};

};

// Initialization code

video = document.getElementById("video");

canvas = document.getElementById("canvas");

fc = new frameConverter(video,canvas);

...

// Change the image effect applied to the video

fc.setEffect('edge detection');Basically, the idea is use |drawImage| to acquire frame from video and draw it to canvas. Then call |getImageData| to get the data and process the image. After that, put the computed data back to the canvas and display it.

Compare to this approach, the proposed WebAPI has below advantages:

- Not polling mechanism.

- We use callback function to process all frames.

node-opencv

https://github.com/peterbraden/node-opencv "OpenCV bindings for Node.js. OpenCV is the defacto computer vision library - by interfacing with it natively in node, we get powerful real time vision in js." The sample codes looks like below:

- You can use opencv to read in image files. Supported formats are in the OpenCV docs, but jpgs etc are supported.

cv.readImage(filename, function(err, mat){

mat.convertGrayscale()

mat.canny(5, 300)

mat.houghLinesP()

})

- If however, you have a series of images, and you wish to stream them into a stream of Matrices, you can use an ImageStream. Thus:

var s = new cv.ImageStream()

s.on('data', function(matrix){

matrix.detectObject(haar_cascade_xml, opts, function(err, matches){})

})

ardrone.createPngStream().pipe(s);

opencvjs

https://github.com/blittle/opencvjs

It is a project to compile opencv to asm.js. Might be a dead project now.

Project Naptha

"Project Naptha automatically applies state-of-the-art computer vision algorithms on every image you see while browsing the web. The result is a seamless and intuitive experience, where you can highlight as well as copy and paste and even edit and translate the text formerly trapped within an image." Quoted from http://projectnaptha.com/ .

How it works?

Excerpt from Project Naptha: The primary feature of Project Naptha is actually the text detection, rather than optical character recognition. The author write a text detection algorithm called Stroke Width Transform, invented by Microsoft Research in 2008, which is capable of identifying regions of text in a language-agnostic manner in WebWorker. Once a user begins to select some text, it scrambles to run character recognition algorithms in order to determine what exactly is being selected. The default OCR engine is a built-in pure-javascript port of the open source Ocrad OCR engine. There’s the option of sending the selected region over to a cloud based text recognition service powered by Tesseract, Google’s (formerly HP’s) award-winning open-source OCR engine which supports dozens of languages, and uses an advanced language model.

Fixme List(Known Issues)

- OpenCV can't build with STLPort, only support GNUSTL.

- B2G can't build with GNUSTL.

- Text Detection and Recognition can't run on B2G.

- Some OpenCV API use STL as arguments. The unalignment STL will cause runtime error.

- Tesseract-OCR Build

- Use pre-installed Tesseract-OCR now. Maybe we should support source code build of Tesseract-OCR.

- Improve precision rate of text recognition.

- The actual precision rate should be higher than my roughly prototype. Need improve it.

- Separate OCR initialized.

- Prevent redundant initialization.

- Haven't done OpenCL integration in Gecko.

- OpenCV has a lot of OpenCL integration. We should take advantage from it.

- Canvas2DContext, WebGL can't run on worker.

- Need bug 801176 and bug 709490 landed.

- Need bug 801176 and bug 709490 landed.

- Need ImageBitmap for VideoWorkerEvent.

- Need bug 1044102 landed.

- Need bug 1044102 landed.

https://bugzilla.mozilla.org/show_bug.cgi?id=801176

https://bugzilla.mozilla.org/show_bug.cgi?id=709490

https://bugzilla.mozilla.org/show_bug.cgi?id=1044102

Questions

- As a OS/browser provider, should we focus on image processing/computer vision area?

- Should we propose a library/API for video processing?

- Any better idea than this one?

- Prioritize sub-projects.

- Anyone want to join this project?

Conclusion

This project is not a JavaScript API version for OpenCV. It is a way to let web developer do image processing and computer vision works easier. It can be a huge project if we want. At least five sub-projects can be based on this work.

- Photo/Video editing tools

- Camera Effect

- Face recognition

- Text recognition(copy/paste/search/translate text in image)

- Shopping Application like the APP "Amazon".

This might be a chance to build some unique features on Firefox OS via this project.

References

- [1]: WebAudio Spec, http://www.w3.org/TR/webaudio/

- [2]: Canvas 2D Context, http://www.w3.org/TR/2dcontext

- [3]:"Firefox OS User Research Northern India Findings", https://docs.google.com/a/mozilla.com/file/d/0B9VT90hlMtdSLWhKNTV1b3pHTnM

- [4]:"Frame by frame video effects using HTML5 canvas and video", http://www.kaizou.org/2012/09/frame-by-frame-video-effects-using-html5-and/

Acknowledgements

This whole idea of adopting WebAudio as the reference design for this project was from a conversation between John Lin. Thanks for Robert O'Callahan's great feedback and comments. Thanks for John Lin and Chia-jung Hung's useful suggestions and ideas. Also, big thanks to my team members who help me to debug the code. BTW, I want to thanks for my managers(Steven Lee, Ken Chang and James Ho) to give me some space to do this work.

About Author

My name is Chia-hung Tai. I am a senior software engineer in Mozilla Taipei office. I work on Firefox OS multimedia stuffs. Before this jobs, I have some experience in OpenCL, NLP(Nature Language Processing), Data Mining and Machine Learning. My IRC nickname is ctai. You can find me in #media, #mozilla-taiwan, and #b2g channels. Also you can reach me via email(ctai at mozilla dot com).