BugzillaAutoLanding

Project Description

This project will create and deploy a set of tools that will:

- grab patches from a bug in Bugzilla

- land patches on try (or other specified branch(es))

- poll for the results of automated pushes and depending on the branch specified:

- report back to the bug the results

- on success continue to push to another branch (eg: try, then mozilla-inbound)

- be able to automatically backout a push if failure detected

Goals

- A set of smaller tools that each have the ability to be part of a larger script that runs "autolanding" to try & other hg.m.o repos that do builds per checkin

- Tools that can be used in command line via API calls

- Tools to control the process - lots of toggles to go back to manual sheriffing (Global KillSwitch)

- Using our build/test resources wisely and not increasing the load so much that try is unusable for developers to work on their patches prior to automated/assisted landings

- Streamlining as much as possible the try server and trunk landing process without removing the human interaction with the build/test/perf results.

Non-Goals

- Replacing humans in the landing process

- Handling performance regressions

- Providing the best possible UI

- Going to extreme lengths to support auto-landing patches which do not follow the rules which the tool requires

People

- Lukas Blakk

- Marc Jessome

Designing the System

A simple survey on try usage (survey, results) was created and advertised to find out how developers currently use try and what they think about the autolanding workflow. We got 52 responses. This helped catch some things we missed like paying attention to LDAP authentication before pushing something from Bugzilla, and it also provided new observations on try workflow. Many developers state that they would find landing to try via Bugzilla to be 'more work' than what is currently offered with push-to-try (using try syntax). We are taking that into account in our design and are adjusting the goal to be primarily for the purposes of landing to trunk and not just getting try results posted to the bug. That last part will be handled more through try syntax where if a bug is specified, you can post the results to the bug and turn off email notification if desired.

API

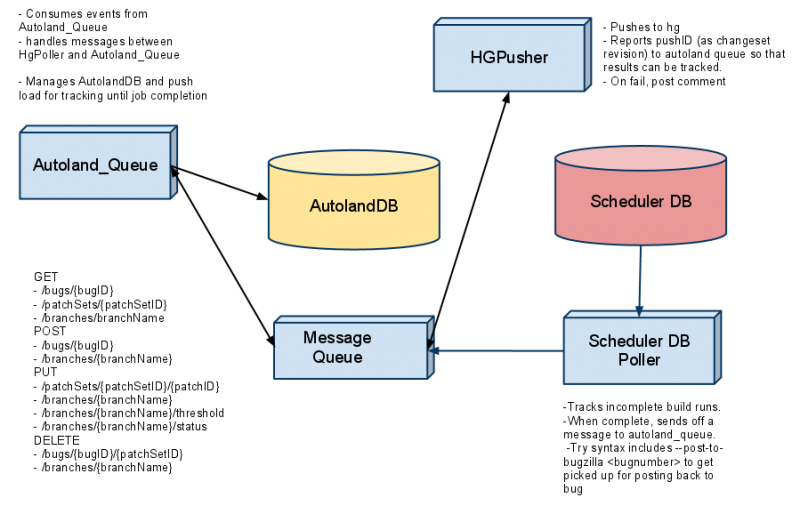

Architecture

REST Interface

List of request methods, urls, and parameters:

GET /bugs/{bugID} -> get bug patchsets

GET /patchSets/{patchSetID} -> get patchset information

GET /branches/branchName -> get branch information

POST /bugs/{bugID} -> create empty patchset

POST /branches/{branchName} -> create a new branch

PUT /patchSets/{patchSetID}/{patchID} -> add patch to patchset

PUT /branches/{branchName} -> update branch

PUT /branches/{branchName}/threshold -> set branch threshold

PUT /branches/{branchName}/status -> set status enabled/disabled

DELETE /patchSets/{patchSetID} -> delete a patchset (if not processing)

DELETE /branches/{branchName} -> delete branch from db

Object Definitions

PatchSets:

`id` int(11) NOT NULL AUTO_INCREMENT, `bug_id` int(11) DEFAULT NULL, `patches` text, `revision` text, `branch` text, `try_run` int(11) DEFAULT NULL, `creation_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP, `push_time` timestamp NULL DEFAULT NULL, `completion_time` timestamp NULL DEFAULT NULL,

Branches:

`id` int(11) NOT NULL AUTO_INCREMENT, `name` text, `repo_url` text, `threshold` int(11) DEFAULT NULL, `status` text,

Project Timeline

- Tracking bug: bug 657828

| Component Name | Bug(s) | Assigned To | Description | Start Date (est.) | Completion Date (est.) | On Track | Updates |

| HgPusher | bug 657832 | Marc | Details | Monday May 23rd | Friday June 10th | No | Integrating LDAP usage |

| SchedulerDBPoller | bug 430942 | Lukas | Details | Monday May 23rd | Friday June 24th | No | Needs better testing on bug commenting as currently it posts twice (or more times) to bugs |

| BugCommenter | bug 659167 | Marc | Details | Monday June 20th | Friday July 8th | Yes | Complete |

| AutolandDB | bug 659166 | Marc | Details | Monday June 20th | Friday July 22nd | Yes | |

| MessageQueue | bug 659166 | Marc | Details | Monday June 13th | Friday July 22nd | Yes | Module complete, needs to be used by all of project. Update Sept 20, 2011: We lose every second message, need to debug that. |

| LDAP Tool | bug 666860 | Marc | Details | Monday July 11th | Friday July 29th | Yes | Complete to fill needs of this project |

Testing

June 20th - July 22nd

- Set up on staging masters as per bug 661634 running the automation against the sandbox Bugzilla with autolanding/logging set to staging repos to test the individual components and watch for issues in the message queue and system.

Testing Round 2

Week of Dec 5 - 9

- Another round of testing the life cycle of a patch attached to sandbox bug using real push to try

What we need to ensure:

- Not losing every second message to mq

- No extraneous or unexpected postings to the bug from any modules

- Push to try should not require full headers on patches

- Whiteboard syntax either specifying attachment id or not - so [autoland-try] or [autoland-try,1234]

- Retrying oranges

- (feature I would like to have) Report back to bug if builds are canceled through self-serve

Week of Dec 12 - 16

Didn't get a full run going on autoland-staging last week, still working out kinks with schedulerdbpoller.

TODO:

- bug post attempts to non-existent or blocked bugs needs to be handled so that the revision is kicked out of the queue and doesn't get re-posted

- working on 400 bad requests right now in test_bz_utils

Week of Dec 19 - 23

- working on thresholds, queue messages, setting up autoland-staging01 to run autoland_queue.py and hgpusher.py

- filed bug 712360 to address LDAP connection errors

- have both modules working and speaking to each other but new bugs:

- hgpusher.py has to be started before autoland_queue.py so that the first time we find an autoland tag the patchset msg succeeds

- need to not put in a time when a 'bad msg' is returned on attempt to hgpusher

- message from hgpusher to autoland is broken right now

- thresholds and the use of 'mozilla-central' as the branch for try are complicated and require review and updating

- hg clone error doesn't return a message to autoland_queue and doesn't retry

remote: Permission denied (publickey,gssapi-with-mic). abort: no suitable response from remote hg! [Clone] error cloning 'ssh://hg.mozilla.org/try' into clean repository: Command '['hg', 'clone', u'ssh://hg.mozilla.org/try', u'/root/autoland-env/tools/scripts/autoland/build/clean/try']' returned non-zero exit status 255 [HgPusher] Clone error...

- Why is hgpusher picking up stray autoland.db messages after a blowout error?

(autoland-env)[root@autoland-staging01 autoland]# python hgpusher.py

[RabbitMQ] Established connection to localhost.

[HgPusher] Erroneous message: {u'_meta': {u'queue': u'autoland', 'received_time': '2011-12-21 02:51:24.446963', u'sent_time': u'2011-12-21 02:46:26.146165', u'routing_key': [u'autoland.db'], u'exchange': u'autoland'}, u'payload': {u'action': u'patchset.apply', u'patchsetid': 66, u'type': u'error'}}

Week of Dec 26 - 30

- bug fixes

- reworking queues to simplify, use direct topic

- completed autoland_queue <-> hgpusher communication

- refactored to be able to do try as its own branch

- added in a couple more variables to the patchsets (author, retries) and removed to_branch

- thresholds fixed so that try and mozilla-central aren't conflicting with each other

- schedulerdbpoller and autoland_queue both push things out if timed out or can't post to bug

Still to do

- complete end-to-end test running schedulerdbpoller on autoland-staging01

- first attempt: message of completion from schedulerdbpoller got nabbed by hgpusher

- hgpusher still has to be started before autoland_queue (why???)

- more code clean up and review

- test on actual bugzilla bug (check on attachment name as custom syntax)

Deployment

Go live to a small subset of devs (ehsan plus one or two others), watch for issues using live data from actual pushes/db Then go live to all with docs & usage info broadcast widely

TO DO

- Monitor load & machine resources/wait times

- Confirm bugs hit from previous attempts are no longer reproducible

- Write up developer documentation on how to use the current system

- Write blog posts and promote the new functionality as well as explaining where this is heading (automated landing across release branches)

Bugs hit

- Double bug posting

- Queue messages getting lost

API

Write and enable API to give sheriff access to the functionality of this system. Include Kill Switch, ability to override.

Post-Deployment

Outreach

In order to get a lot of eyes (and users) we have a multi-step outreach process to inform developers of this system.

- Blog posts

- Tweeting

- Yammer posts

- Monday meeting lightning talk

- Brown Bag

- File a tracking bug for all bugs filed about any module issues

- Documentation in wiki.m.o (and possibly in MDN)

Component Descriptions and Implementation Notes

BugzillaEventTrigger

A polling script that will pull all bugs from bugzilla with whiteboard tags matching: [autoland-$branch] [autoland-$branch:$patchID:$patchID]

Default behaviour would be to take [autoland-$branch] and grab all non-obsolete, non r- patches into the patchset that is then pushed to $branch, results returned and analyzed for further actions (like try->mozilla-{central,inbound}). To override or search for regressions we support adding explicit patchIDs to create a custom patchset for an autoland run.

In a discussion with Mconnor, the suggestion for user interaction with autoland is to have radio buttons by each attachment (with a bit of select all/none js) so that you could pick your patches for autoland and have an input field & submit button to send something like:

[autoland-try:1234:2345:3456] (greasemonkey script for picking patches) [autoland-mozilla-inbound:1234]

to the queue which would signify the final push destination and the attachment ids to use for the patch set. Whether this is something we can do with Bugzilla would need investigating so at first we are implementing this with whiteboard tags & polling with the bugzilla API for these tags.

HgPusher

Accepts a branch, patch id(s) and can clone the branch, apply the patches and report back with results of push (success == revision or FAIL). Also can handle special casing to do a backout on a branch.

- Input(s): Bugzilla Messages, command line

- Output(s): BugCommenter, hg.mozilla.org, AutolandDB, stdout

SchedulerDBPoller

Regularly polls the scheduler DB (on a timer) and checks for completed buildruns for any actively monitored branches. There should be a list to check against for what branches need to be watched. The incomplete runs from the last N units of time are kept by revision in a local cache file to check for completion. When an observed branch has a completed buildrun the SchedulerDBPoller can check two things:

- if try syntax is present (and branch is try) check for a --post-to-bug flag and trigger BugCommenter if flag and bug number(s) are present

- run that revision against the AutolandDB to see if that revision was triggered by landing automation. If yes, then PUT the results & trigger the BugCommenter otherwise ditch the completed revision

- Input(s): command line, AutolandDB

- Output(s): BugCommenter, stdout

BugCommenter

When called with a bug number and a comment, posts to the bug and returns the commentID as well as a result (SUCCESS/FAIL) -- should handle a few retries in case of network issues -- write to a log file that is watched by Nagios?

- Input(s): HgPusher, SchedulerDBPoller

- Output(s): Bugzilla, stdout

Note: Let's have a couple of template options here for what is posted to the bug depending on if it's a branch or try

MessageQueue

To-Do - Read up on RabbitMQ and Message Queue Listens to messages from BugzillaScraper (or Pulse Events?) broadcasts information to HgPusher, BugCommenter, AutolandDB

- Goal here would be to integrate with existing RMQ in build infrastructure, be able to deploy the components on any masters in the build network to share load and for them to be able to send/receive messages via RMQ to see an autoland cycle through to completion

AutolandDB

Keep track of the state of an autoland-triggered push from start to finish.

LDAPTool

Checks for hg permission level of submitter Compares bugzilla email to ldap email

Notes

- Set of tools which automate each step of this work and we can 'on-demand' turn off any of the tools

- Sheriff needs to be able to turn off the auto-landing altogether

- No limit in workflow -- sheriff should be able to override the queue to auto-land a priority patch

- can have oranges, but sheriff could 'force' the landing anyway

- We need to have stages:

- (TBD): how to deal with bugzilla

- HgPusher: deals with the hg stuff (merging patches, grabbing patches from bugs, what happens if any steps fail)

- SchedulerDB Poller: deals with a way of getting results from try/m-c to make sure we have the data on whether to proceed and/or leave comment in the bug regarding outcomes

- (fourth, optional/desired/future) how to monitor perf results and get an idea of when it's ok to push or flag on those results

- Be strict in accepting things from humans

- Rules for automated landings

- If they forget a rule, they get a comment - "Step X failed (reason)"

- For bugs with multiple patches - to land all together, look at non-obsolete patches which have been reviewed in alphabetical order

- Common for devs to name things "part 1", "part 2"

- Don't need to be lenient toward human mistakes

- Need correct descriptions, author information, header of patch -- if it doesn't include a header, try syntax

- Look at "checkin-needed" box, grab all non-obsolete, and if they have the right message then push all those - if all those steps succeed then you get the try push otherwise fail with comment -- clear "checkin-needed" from the bug

- Is the bug the right place? It's the public record for the issue, so yes - emailing the assignee takes the information away from the record

- Specialized tool for patch queues would be nice (not in the scope of this project!)

- Try syntax presence == try on try_repo but NEVER auto-land on trunk

- Developers will be encouraged to watch perf results on trunk, since try perf is not really useful information

- Bot has to watch m-c after it lands something there, results come back to the bug

- Merge tracking for commits (what was auto-landed and what was not)

- Merging between two auto-landed pushes

- We have to watch these to know when all the results are in, and what was successful/not (scheduler db has this information for us to get)

- Autoland Message Queue - consumes information from BugzillaScraper (pulse?) as event triggerer for HgPusher, BugCommenter

Security

- Must ensure that the patch is attached by someone with L1 hg access, so that we are auto-landing patches from authors with the same level of security as current push-to-try

- Why L1? This is about pushing to mozilla-central, so I think we should check for L3 access.

- L1 to push to try, then reviewer should have L3 for autolanding to trunk

Deployment Coordination with IT

Talk with Amy and Zandr about how to deploy this without Single Point of Failure and also with consideration for load & resources. We want to have a production-level system here so what will that require? Can this live on cruncher for production?

Setting up the staging masters

First run, August 2011

On autoland-staging01 I have done the following:

- sudo yum install hg

- checked out my tools repo

- Installed Python 2.6.7 from source

- ./configure, make (yum install zlib-devel, readline-devel, bzip2-devel), make install

- added /usr/local/bin to the PATH in .bashrc to use 2.6 as default Python

- Set up a virtualenv using these notes

- in the virtualenv installed:

- argparse

- simplejson

- sqlalchemy

- mysql-python

- Also required (but not currently installed):

- python-ldap for ldap utils

- pika for rabbitmq communication with python

- mock for tests

Second run Dec 2011

- Cloned a read-only of git://github.com/lsblakk/tools.git to /root/autoland-env on autoland-staging01

- copied in config.ini.prod values from /root/lsblakk-tools/scripts/autoland and made a backup of the config.ini, then did a symlink from config.ini.prod to config.ini

- pip install urllib3 (to get urllib2) - also had to add import urllib2 to autoland_queue.py

- rebooted autoland-staging01 to kill any stray processes of mq/rabbit

- start rabbit server:

su rabbitmq -s /bin/sh -c /usr/lib/rabbitmq/bin/rabbitmq-server

- JSON errors on request call for bz poller

- fixed calls to bz_utils __init__ in both autoland_queue and hg_pusher

- now I can start both and have their queues listening but autoland_queue is reading my bug as not having patches, trying to get it to work with :attachment_id in the tag and there's some patch list/splitting errors

- yum package of rabbitmq-server was 2.2 and to get rabbitmq-plugins I needed a newer version so did:

yum remove rabbitmq-server rpm -ivh http://www.rabbitmq.com/releases/rabbitmq-server/v2.7.1/rabbitmq-server-2.7.1-1.noarch.rpm