Abstract Interpretation

This is a work in progress to explain the static program analysis technique of Abstract Interpretation, which is basic background for using the Treehydra abstract interpretation libraries.

Background: Program Analysis

This is just some boring background material. Feel free to skip to Introduction on first reading.

Program analysis refers to algorithms for discovering facts about the behavior of programs. Examples include finding which variables are constant, whether null pointer errors can occur, or whether every "lock" operation is followed by exactly one "unlock". Program analysis is a basic technology with many applications, especially compiler optimizations, bug finding, and security auditing.

Program analysis has two major divisions: static analysis and dynamic analysis. Dynamic analysis basically means testing: you run the program on one or more test cases and observe its behavior. For example, you can run the program and see if you get any null pointer errors. The primary advantage of dynamic analysis is that you can get exact information about the program behavior, because you are actually running it. The disadvantage is that you get information only for the test inputs you provide, and only for the code paths exercised by those test inputs. Thus, dynamic analysis cannot prove that an optimization is safe or that a program is free of some class of security flaws.

The goal of static analysis is to solve this problem by attaining complete coverage of the program: using special algorithms to analyze the behavior over all possible inputs. The price is that the results are only approximate. (Discovering exact behavior for all inputs would solve the halting problem.) But the results only have to be approximate in one direction. That is, you can create a bug-finding analysis that has false positives (sometimes reports a bug where there is none) but does discover all bugs. And you can also create a version that has no false positives but might miss some real bugs. There is a lot of design freedom in how to approximate, and designing good approximations is a key part of the art of static analysis. The goal is to be precise where it counts for your results, but approximate elsewhere, so the analysis runs fast.

Introduction

Abstract interpretation is a basic static analysis technique. We will describe it informally, focusing on the ideas and practical applications, and leaving the mathematical underpinnings aside. We will focus on intraprocedural abstract interpretation, i.e., analyzing one function at a time, rather than analyzing the whole program together, because whole-program analysis is difficult to apply to a large codebase like Mozilla.

We're going to explain abstract interpretation using an example analysis problem called constant propagation. The goal is to discover at each point in the function whether any of the variables used there have a constant value, i.e., the variable has the same value no matter what inputs are provided and no matter what code path was followed. This analysis is used by compilers for the constant propagation optimization, which means replacing constant variables with a literal value. (This may save a load of the variable, and it also removes a use of the variable, which can enable other optimizations.)

Here is an example C++ function we want to analyze, with some comments showing constant variables.

int foo(int a, int b) {

int k = 1; // k is constant: 1

int m, n;

if (a == 0) {

++k; // k is constant: 2

m = a;

n = b;

} else {

k = 2; // k is constant: 2

m = 0;

n = a + b;

}

return k + m + n; // k is constant: 2

}

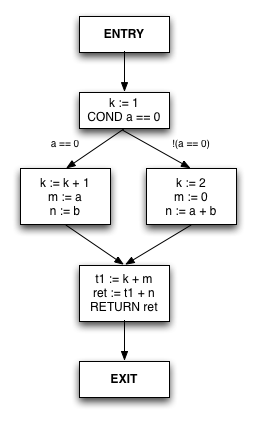

Control Flow Graphs

Abstract interpretation operates on a flowchart representation of the function called a control flow graph or CFG. Below is the CFG for the example function. This CFG is similar in flavor to the real GCC CFG, just with a few unimportant details removed.

It looks like a pretty normal flowchart. Some features to note:

- All possible control transitions are shown as explicit edges (that is, links between the nodes containing code - see http://en.wikipedia.org/wiki/Graph_(mathematics) for more information).

- Statements always have exactly one operation and at most one assignment. Temporary variables are added if necessary. This will really simplify our job: instead of handling every kind of C++ statement, we have only have to analyze these simple statements.

A little terminology:

- Each node is called a basic block, often abbreviated BB. The defining feature of a basic block is that there is a single entry point and a single exit, so basic blocks are straight-line code.

- In different sources, statements may be called statements, instructions, triples (of a destination and up to 2 operands), quadruples or quads (counting the operator), or even tuples. We'll call them instructions, as that's the name used in Treehydra code.

- A program point is the (notional) point just before or after each instruction. The function has a well-defined state at program points, so our analysis facts will always refer to program points. E.g., at the program point just before 't1 := k + m', k is constant with value 2.

Concrete Interpretation

To explain abstract interpretation, we'll start with concrete interpretation, which is just the normal kind you are already familiar with. We'll use it to build up to abstract interpretation.

We could imagine trying to guess which variables are constant just by calling the function with different inputs, and finding which variables are constant over all those runs. Let's start by running with "a = 0, b = 7" and trace the run:

(instruction) (interpreter state after instruction) ENTRY a = 0, b = 7 k := 1 a = 0, b = 7, k = 1 COND a == 0 (TRUE) k := k + 1 a = 0, b = 7, k = 2 m := a a = 0, b = 7, k = 2, m = 0 n := b a = 0, b = 7, k = 2, m = 0, n = 7 t1 := k + m a = 0, b = 7, k = 2, m = 0, n = 7, t1 = 2 ret := t1 + n a = 0, b = 7, k = 2, m = 0, n = 7, t1 = 2, ret = 9 RETURN ret EXIT

So k = 2 just before its use in 't1 := k + m'. We can run with many other inputs and get the same result. But testing can never tell us that k = 2 for all inputs. (Well, actually it can, but only if you can run 264 test cases.)

Approaching Abstract Interpretation

But if you look at the function, you can see that for k, there are really only two important cases: a == 0, and a != 0, and b doesn't matter. So let's (on paper) run two tests: one with input "a = 0, b = ?" and the other with "a = NZ, b = ?", where NZ means "any nonzero value" and ? means "any value". First, a = 0:

(instruction) (interpreter state after instruction) ENTRY a = 0, b = ? k := 1 a = 0, b = ?, k = 1 COND a == 0 (TRUE) k := k + 1 a = 0, b = ?, k = 2 m := a a = 0, b = ?, k = 2, m = 0 n := b a = 0, b = ?, k = 2, m = 0, n = ? t1 := k + m a = 0, b = ?, k = 2, m = 0, n = ?, t1 = 2 ret := t1 + n a = 0, b = ?, k = 2, m = 0, n = ?, t1 = 2, ret = ? RETURN ret EXIT

It looks pretty much like the concrete case, except that we now have some abstract values, NZ and '?', that represent sets of concrete values.

We also have to know what operators do with abstract values. For example, the last step, "ret := t1 + n", evaluates to "ret := 2 + ?". To figure out what this means, we reason about sets of values: if '?' can be any value, then 2 + '?' can also be any value, so 2 + '?' -> '?'.

The other test is "a = NZ, b = ?":

(instruction) (interpreter state after instruction) ENTRY a = NZ, b = ? k := 1 a = NZ, b = ?, k = 1 COND a == 0 (FALSE) k := 2 a = NZ, b = ?, k = 2 m := 0 a = NZ, b = ?, k = 2, m = 0 n := a + b a = NZ, b = ?, k = 2, m = 0, n = ? t1 := k + m a = NZ, b = ?, k = 2, m = 0, n = ?, t1 = 2 ret := t1 + n a = NZ, b = ?, k = 2, m = 0, n = ?, t1 = 2, ret = ? RETURN ret EXIT

At this point, we've tested with every possible input, and we've tested every path, so our results are complete. This proves that k = 2 is in fact always true just before "t1 := k + m".

We're definitely getting somewhere, but the procedure we just followed doesn't generalize. We knew exactly what abstract values to use for the test cases, but that was only because this was a simple function and we could see that by hand. This won't work for complicated functions and it's not automatic. We need to make things simpler.

Another problem is that we are analyzing each path through the function individually. But a function with <math>k</math> if statements can have up to <math>2^k</math> paths, and a function with loops has an infinite number of paths. So on real programs, we'll experience path explosion and we won't get complete coverage.

Abstract Interpretation by Example

One problem with our first approach to abstract interpretation was that we don't know how to pick what sets abstract values to use for input test cases. So let's not. Instead, let's just do one test with any input at all: "a = ?, b = ?":

(instruction) (interpreter state after instruction) ENTRY a = ?, b = ? k := 1 a = ?, b = ?, k = 1 COND a == 0

Now what? We have no information about a, so we don't know which branch to take. So let's do both. First, the true case:

(instruction) (interpreter state after instruction)

a = ?, b = ?, k = 1

k := k + 1 a = ?, b = ?, k = 2

m := a a = ?, b = ?, k = 2, m = ?

n := b a = ?, b = ?, k = 2, m = ?, n = ?

Then, the false case:

(instruction) (interpreter state after instruction)

a = ?, b = ?, k = 1

k := 2 a = ?, b = ?, k = 2

m := 0 a = ?, b = ?, k = 2, m = 0

n := a + b a = ?, b = ?, k = 2, m = 0, n = ?

At this point, the two execution streams rejoin. We could just keep running the streams separately, but we know that will lead to path explosion in general. So instead, let's merge the streams by merging the states. We need a single interpreter state that covers both of these states:

a = ?, b = ?, k = 2, m = ?, n = ?

a = ?, b = ?, k = 2, m = 0, n = ?

We can get it by merging the variables individually. As before, we use set reasoning. For example, with k, we say, k is 2 on the left and on the right, so k = 2 in the merged state. With m, m could be anything if coming from the left, so even though it must be 0 if coming from the right, m could be anything in the merged state. The result:

a = ?, b = ?, k = 2, m = ?, n = ?

We can continue executing in one stream:

t1 := k + m a = ?, b = ?, k = 2, m = ?, n = ?, t1 = ? ret := t1 + n a = ?, b = ?, k = 2, m = ?, n = ?, t1 = ?, ret = ? RETURN ret EXIT

And we get the answer we wanted, k = 2. The key ideas were:

- "Run" the function using abstract values as inputs.

- An abstract value represents a set of (concrete) values.

- At a control-flow branch, go both ways.

- At a control-flow merge, merge the state.

Notice that this time, we didn't have to divide up states at the start, we just started with no knowledge, which is perfectly general. Also, because we rejoin execution streams, in a loop-free method, we only have to interpret each instruction once, so we have completely solved the path explosion problem. (We haven't tried loops yet, and they are a bit harder--they require us to analyze statements more than once, but only finitely many times.)

Algorithms

Above, we showed how to do abstract interpretation informally, by hand. In order to code this up, we need a more technical description.

Specifying an Abstract Interpretation

In order to create an abstract interpretation, we have to specify three things: the abstract values, the flow function, and the initial state. We can perform an unlimited number of different analyses using the exact same framework just by varying those things.

In the following, I'm going to simplify things so I don't have to explain everything at once. So if you're an expert, you'll notice that I lie a bit in places, but I'm only ignoring certain design options that aren't important yet.

Abstract Values and Lattices

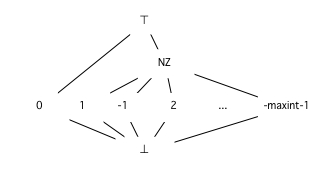

Every abstract interpretation requires a defined collection of abstract values. An abstract value is just a set of concrete values. In the standard integer constant propagation analysis, the abstract values are:

- ⊤, called "top" (remember the T for top), the set of all integer values

- 1, 2, ..., the singleton sets of integer values

- ⊥, called "bottom", the empty set

For our Mozilla analyses, we use these abstract values, and we add a "nonzero" value NZ, which is useful for analyzing tests for zero that appear in if statements. Notice that NZ contains abstract values like 1 and 2. We can depict the set containment relationship graphically.

The mathematical structure corresponding to the picture is called a lattice.

Notice how the lattice controls the level of approximation of the abstract interpretation. For example, if a value can be 1 or -1, the closest covering approximation in our lattice is NZ. A different lattice could provide an abstract value that represents this set more precisely.

Merging. The lattice is key: among other things, it tells us how to merge two abstract values. To merge two abstract values v1 and v2, find the lowest value in the lattice that covers both v1 and v2. The technical term is least upper bound or lub. The funny symbol way to write "the lub of v1 and v2" is "v1 ⊔ v2".

The funny symbol is like a square set union symbol, and in fact, the lub operation can be described as "the approximate set union". Consider merging states where "x = 1" and "x = 2". Looking at the lattice, we see that 1 ⊔ 2 = NZ. But abstract values are just sets of values, so merging them should be like taking a union. In fact, {1} union {2} = {1,2}, but we can't represent {1,2} exactly in our lattice. The closest cover is NZ, which is 1 ⊔ 2.

Flow Functions

Abstract interpretation requires us to maintain an abstract interpreter state and then interpret each instruction in the current state (technically, the state at the program point before the instruction).

The flow function is what does that interpretation. The input is an abstract state and an instruction. The output is the abstract state after executing the instruction. Thus, the flow function describes the abstract semantics of every instruction type.

An implementation of a flow function looks much like an interpreter's main switch statement, just operating on abstract values.

Deriving flow functions. Usually you can figure out what the flow function should do just by thinking about it. For example, for a statement "x := K" where K is any integer literal, it's pretty obvious that the result is that x gets abstract value K.

There is also a mechanical procedure for deriving the flow function for a statement "x = a op b" for any binary operator. (This also applies to unary and nullary operators, making the needed changes.) For each pair of possible input abstract values va and vb:

- Evaluate "ca op cb" for every concrete value ca covered by va and every concrete value cb covered by vb. (Formally: let C = { ca op cb | ca in va, cb in vb }.)

- Collect the set of results and translate to an abstract value vr. (Find the lowest node in the lattice that covers C.)

- Then, the flow function should have "va op vb -> vr".

For example, consider our lattice with NZ and the operation "x = a + 1". For "a = NZ", we evaluate "ca + 1" for ca in "everything but 0". The result is "everything but 1". The lowest covering abstract value is "top".

Initial State

Finally, we need to specify the initial abstract state, the one that holds when the function is entered. For constant propagation, we used the state of no information, namely, everything set to "top" (any value). This is often a good choice, but some analyses need other choices. For example, the outparams analysis is all about whether an outparam has been written to inside the function, so the initial state of the outparams is NOT_WRITTEN.

Example

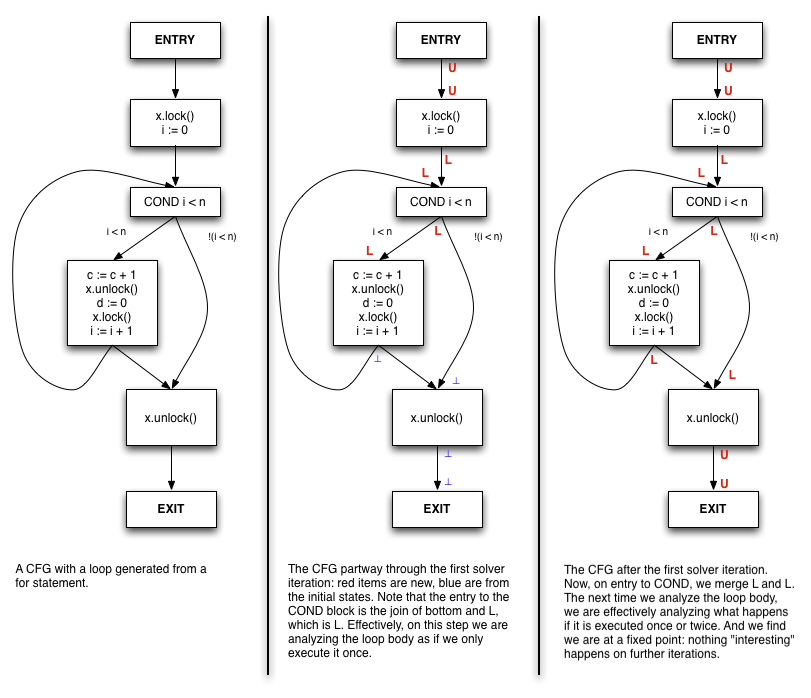

Let's use this framework to define another abstract interpretation. This time, we'll try a bug checker: we'll assume there is an API with lock() and unlock() functions, and check that every unlock() is preceded by a lock().

In a practical problem, the first step of all is to figure out the core analysis that needs to be defined, separately from the checkers, code transformers, and other tools. In this case, the property we need abstract interpretation to discover is, at every program point whether lock() was the last locking API call. Given that information, we can finish the checker by looking at the program point before each call to unlock() and verifying that lock() was called. Now we just need to define the abstract interpretation to gather that information.

Lattice. Usually, the hard part is designing the lattice. In this kind of problem, it is standard to model the lock (or other object whose protocol is being checked) as a state machine. We'll say that locks can be in either the state L (locked) or U (unlocked). We'll have top and bottom as always, finishing our lattice.

Flow function. With the lattice in place, the flow function is easy. We can write down a chart:

(statement) (result) x.lock() x = L x.unlock() x = U anything else no effect

Initial state. Our spec said that lock() had to be called before unlock(). This suggests that on entry, locks are not locked, so we'll use a starting state of U for all locks.

Solving

In our examples above, to run the abstract interpretations, we just sort of went down paths by hand. That's not much of an algorithm: it doesn't explain what order to go in or how to sync up at join points. (And in fact, it's not necessary to explicitly sync up at join points at all.) Also, we didn't do loops.

Fortunately, the Treehydra library code implements all of this 'solving' process: all you have to do is specify the lattice, a .flowState() function, and an initialState() function. But it might be helpful to understand what the solver does.

Fixed Point Solving

The basic solving algorithm works on basic blocks. In each step, we get the entry ("in") state of a basic block from its predecessors, then abstractly interpret the basic block, and finally set the new exit ("out") state for that block. If the out state changed from the last time we interpreted that block, then we need to make sure the successor blocks run again (because their in state depends on the current block's out state). In this way, we keep running blocks until no state changes from last time. The resulting state (at every program point) is called a fixed point, because further abstract interpretation doesn't change it.

Here is the basic algorithm as pseudocode:

// Set initial states

for each basic block bb:

set bb.inState = bottom for every variable

set bb.outState = bottom for every variable

let ebb = the entry basic block

set ebb.inState = (user-defined initial state)

// Iterate until fixed point

do:

let changed = false

// Process all basic blocks, looking for changes

for each basic block bb:

// Set up in state

if (bb != ebb)

set bb.inState = merge out states of predecessors

let cur_state = bb.inState

// Interpret block

for each instruction of bb, isn:

cur_state = (user-defined flow function)(isn, cur_state)

// Set out state and check for changes

if (cur_state != bb.outState) changed = true

bb.outState = cur_state

until not changed

Exactly why this works depends on a bunch of math that we don't need to get into, but we can give some intuitions.

First off, when there are no loops, basically we're just doing straight abstract interpretation in arbitrary order. If we do a later block, then an earlier block, we just redo the later block and throw out the old results, but the end result is the same as if we went in the "perfect" order we'd do by hand.

With loops, we actually need to analyze the loop body more than once, even if we pick the best possible order. Because of the way states are merged, the first time, we analyze the body as if it is executed only once. The next time, we end up analyzing the body as if it is executed either once or twice. A fixed point is reached once further iterations produce no new behaviors.

Here is an example of solving with a loop:

Remarks

The solver interprets the basic blocks in arbitrary order, and more than once. So if you watch the solver (using its tracing mode), you won't see code paths being executed or collected. This is a necessary consequence of avoiding path explosion.

The states in the solver can be "wrong" on the intermediate steps, before the solver is finished. This means you shouldn't try to check properties or use the results inside the flow function, while the solver is running. Instead, you should check after the solver is all done.

The Treehydra solver tries to process basic blocks in a good order to reduce the number of times they have to be processed to get the solution. Basically, when there are no loops it goes in topological order (much like 'make'). When there are loops it uses something like topological order (reverse postorder).

Some abstract interpretations can go into infinite loops. (Note that the examples we gave always terminate.) Usually, this can only happen if there is a bug in the flow function or if the lattice has a path with infinitely many steps from bottom to top (e.g., the lattice of all integer ranges). The analysis can also run a really long time if the lattice just has a long path from bottom to top. Diagnosing and repairing termination issues is an advanced topic I'm not prepared to go into here, but feel free to ask for help with specific issues.

Note that sometimes solving just takes a long time: functions can have 1000 or more basic blocks, and analyzing such a function in detail in JS take take up to a minute or so.

Advanced Topics

Interpreting Branch Conditions

Way back in our original example, you may have noticed a fact that our abstract interpretation failed to discover: that m is constant with value zero at the end of the function. The reason we don't discover this is that on the "a == 0" branch of the conditional, we do "m := a", and our state has "a = ?", so we set "m = ?". But if we're on the "a == 0" branch, then a has to be zero, right?

Of course. But basic abstract interpretation doesn't try to interpret branch conditions. One reason is that the branch has no side effects, so interpreting it abstractly doesn't assign anything.

But we certainly can try to discover and apply information from conditionals, and the Treehydra libraries include this feature. It's a little harder than a flow function, because in this case we're not executing an instruction and approximating, but rather reasoning backward from the truth or falsity of a condition. This also means we need a separate function from the flow function, a "conditional flow" or filtering function. Treehydra calls it "flowStateCond". The input is a state, a conditional expression, and whether the expression is true or false. The output is a state representing the values from the original state that pass the expression.

In the example above, we are in state "a = ?" and learn that the condition "a == 0" is true. So we filter '?' down to just the values equal to 0, namely 0 itself. So the result is "a = 0".

If the input state had instead been "a = NZ', then we filter NZ (nonzero) down to just the values equal to 0, which is the empty set. So the result is "a = bottom", effectively proving that this branch is not taken.

Treehydra contains the basic machinery to detect conditionals and exclude branches that cannot be executed. The user only has to supply the flowStateCond function.

Some analyses, such as outparams, require tracking pairs or tuples of correlated variables, not just single variables. This requires a modification to the notion of abstract state we used in the basic explanation.

Even constant propagation can theoretically benefit from tracking pairs. Consider this example:

if (foo) {

a = 1;

b = 2;

} else {

b = 1;

a = 2;

}

c = a + b;

It's clear that c is a constant, 3. But our constant propagation won't detect it: after the if, we have state "a = ?, b = ?".