Bugzilla:CMU HCI Research 2008

In the fall of 2008, a group of students getting their masters degree in Human Computer Interaction (HCI) from Carnegie Mellon University in Pittsburgh volunteered to conduct user research on Bugzilla, for credit in Usability Methods classe. This group consisted of 5 students:

- Tom Bolster

- Fred Pfisterer

- Emily Vincent

- Vedant Mehta

- Josh Zúñiga

These students conducted the following HCI usability research methods:

- Contextual Inquirty

- Keystroke Level Modeling (KLM)

- Cognitive Walkthrough

- Heuristic Evaluation

- Think Alouds

All of this research was done on the 3.0 release of Bugzilla.

Contextual Inquiry

Contextual Inquiry (CI) is an observational method which focuses on users performing work within their environment. The observer asked the users questions, as they were performing the task of interest, to collect rich qualitative data. The clear benefit is seeing the minutiae and understanding why those details matter in the context of the user’s work domain.

The student group conducted five CIs during their research. The first CI was with a resident assistant whose responsibility was to support the dorm residents during their stay for the school year. As a part of these responsibilities, this resident assistant reported maintenance problems using a web based system. Our group wanted to explore the concept of trying to get help with something that an individual could not fix themselves. This CI aligned with our focus; enabling sustained involvement of new users in defect submission.

The second CI was conducted with a documentation specialist/tester reporting problems end users experienced with the product they develop. This user was the connection between the development group and the end users of the product. The user used a competitive defect tracking software to Bugzilla.

The student group conducted the final three CIs with different individuals in the same company, but with widely varied roles. This company uses Bugzilla as their primary defect tracking tool to aid in their software development. The first user role we observed was a developer who had not used Bugzilla before. This user would be considered a skilled developer. The second user was a development lead for the group and would be considered an expert user of Bugzilla. This user was responsible for planning and guiding the development efforts of the product. The final user observed was an application tester who was responsible for application level testing and QA of the product. This user would be considered a novice user of Bugzilla.

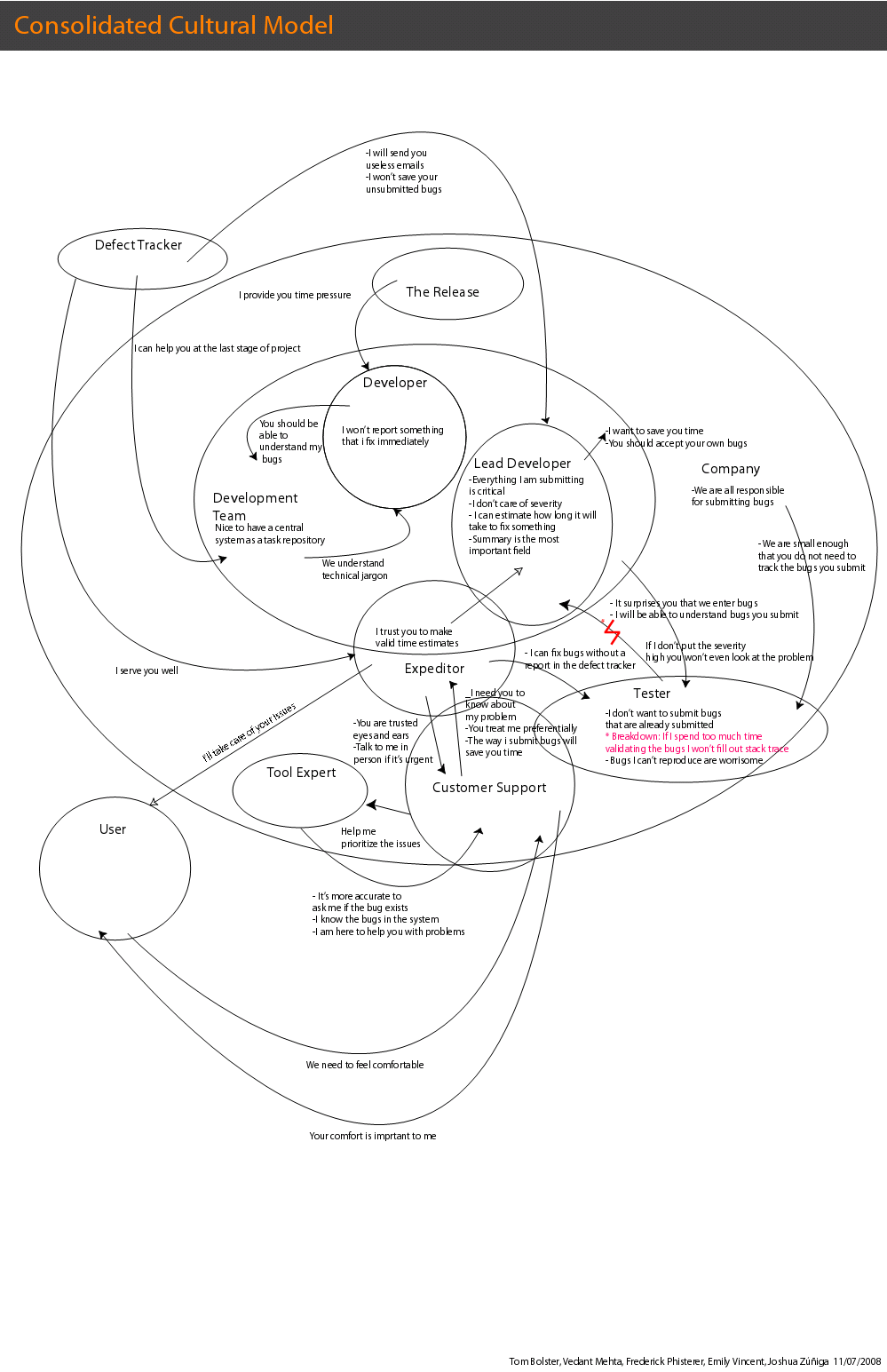

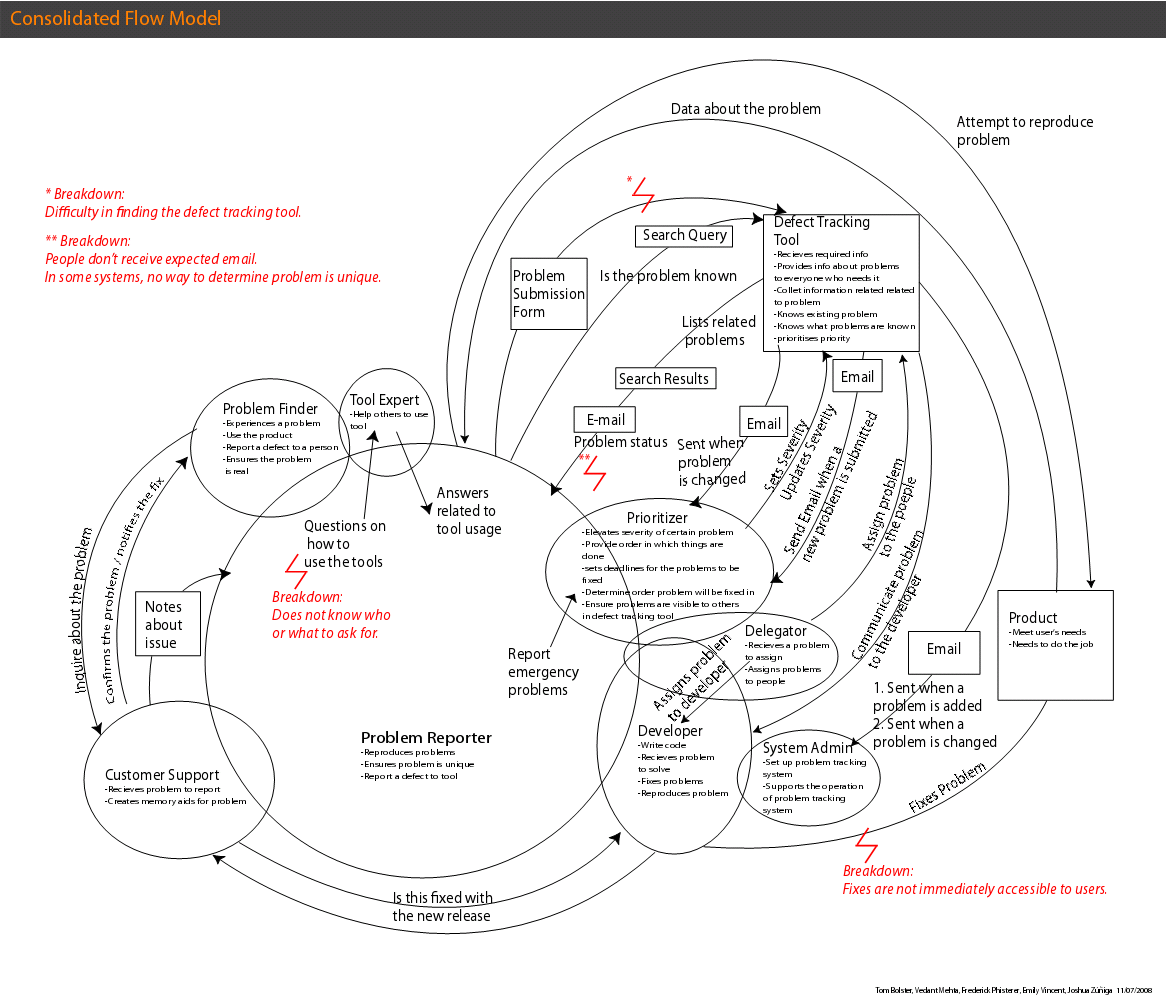

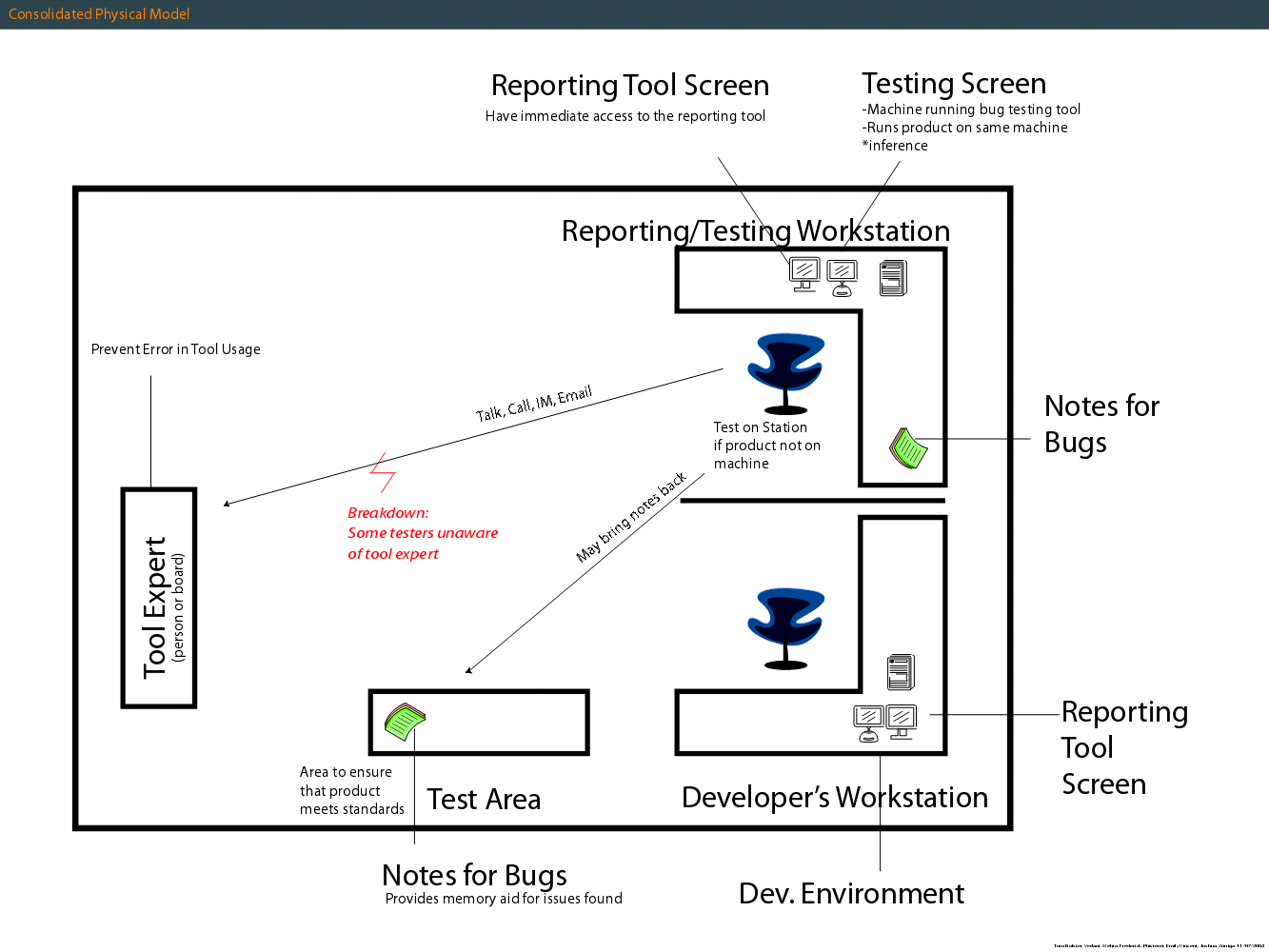

During the modeling stage the group developed models which illustrated how users went about their work in context. Through the models we showed (1) how communication flows to and from users, (2) the sequence of actions to complete a task and the underlying intent, (3) the cultural influences on the user from people, groups, or objects, (4) the artifacts and how those were used, and (5) the physical space where the user performs their work. Each of the models revealed important findings around the user’s work. Contextual Inquiry was by and large the compass the team used for guidance as it allowed them to abandon their assumptions of the user and see how people actually behave in context.

By nature, CI has high preparation costs associated with it as is highly qualitative and subjective. In this instance five users were observed, but ideally a group would collect upwards of 15-20 CIs of many users involved in the work being observed. Forming a focus to guide questions, pre-interview practice, conducting the interview, modeling, post-interview follow up, and finally consolidation and interpretation sum to a cost far greater than each of the other methods we used.

In comparison to other methods, CI is an input to the other methods. Inherently, CI is used to understand the users and observe what they do in context. CI is a guide for design and does not require that a system even exist in order to perform. In contrast, the other methods are used to evaluate a system as it exists in low or high fidelity.

Cultural Model

Workflow Model

Physical Model

Keystroke Level Modeling (KLM)

Working on this section

Cognitive Walkthrough

Cognitive Walkthrough is a method which evaluates the ability of a new user learning to use an interface for the first time. Assumptions about the user are listed early on and each step of the correct task process is asked four questions.

- Will users be trying to produce whatever effect the action has?

- Will users see the control (button, menu, switch, etc.) for the action?

- Once users find the control, will they recognize that it produces the effect they want?

- After the action is taken, will users understand the feedback they get, so they can go on to the next action with confidence?

Each question is answered yes or no, and an explanation as to why is included. Any “No” answers represent potential problems with the interface for new users learning the system. The task studied is that of searching the Bugzilla database to make sure that the bug being submitted has not already been reported, and then to report the bug.

Task description

The group selected two different tasks for this cognitive walkthrough which are to be commonly executed subsequently and both are sufficiently critical to the process of tracking a software defect.

Task 1: Employ the advanced search form of the system to find out if a similar problem concerning the java exception “ArithmeticException: / by zero” already exists in the system.

Task 2: Submit new bug report using the Bugzilla bug submission screen. The bug should contain the java stack trace which is already on your clipboard and describe the problem in a concise manner.

A Priori Description of Users and Background Knowledge

The primary goal of this Cognitive Walkthrough is to provide data for a straightforward refinement of the current interface. Therefore we chose a class of users that Bugzilla is currently trying to address in terms of facilitating their processes. The user is affiliated in some way with the development of a software product. The user might be employed in customer service, a development team or work as a tester whose primary job is to discover and report defects with the product that their organization is marketing.

Assumption 1: The user is a skilled computer user who knows how to use the keyboard and mouse.

Assumption 2: The user is familiar with the web and browsers. This means they know how standard browser & web features work, like the scroll bar, the back button, forms and links.

Assumption 3: The user has a Bugzilla account already and knows how to sign in. Due to this assumption, we will start the task after the user has already signed in.

Assumption 4: The user is a new user to the Bugzilla system and did not submit a bug with this system before.

Assumption 5: The user does not want to submit a bug that already exists.

Assumption 6: The user is familiar with the software package for which they are submitting a bug.

Significant Findings

First of all, it is difficult to understand how to search for existing bugs, as there are many options of how to search on the main page.

Second, the function of “find a specific bug” does not match the basic search functionality expected from the novice user, and thus they are unlikely to realize that they should be using advanced search.

Finally, there is no indication of how severity should be set on the bug submission page.

Usability Aspect Reports

Heuristic Evaluation

Heuristic Evaluation (HE) is a method where inspectors examine an interface by checking it against a list of accepted design principles (heuristics). Heuristic Evaluation is particularly well-known for being inexpensive and fast – it requires a small investment of time but the return can be considerable. The team of inspectors was comprised of 5 current Masters-in-Human-Computer-interaction graduates with background and experience in diverse fields such as Computer Science, Information Technology, Psychology and Design. Compared to the other usability evaluation methods, HE took our group only a short time and many of the violations we uncovered influenced our design decisions. In particular, performing an HE of submitting a new defect report and searching an existing defect drove home the importance of providing users good feedback.

Findings

- New bug submission reports page has many labels which are unclear to a novice user. ECV‐HE‐02, ECV‐HE‐05, VM-HE-07, TIB-BZ-09

- Throughout the website there are multiple fields with unclear usage. FP-HE-03 ECV‐HE‐04, ECV‐HE‐08, TIB-BZ-12, TIB-BZ-18

- There is little in-form/in-page help and documentation. JZ‐HE‐02, ECV‐HE‐01, ECV‐HE‐12, VM-HE-02, VM-HE-05, VM-HE-10, TIB-BZ-09

- Search feature violates multiple heuristics. JZ‐HE‐08, FP-HE-02, VM-HE-07, TIB-BZ-01, TIB-BZ-21, TIB-BZ-22, TIB-BZ-28

- Problems in understanding widgets and hyperlinks VM-HE-01,TIB-BZ-05,FP-HE-01a,ECV-HE-11

List of Heuristics

http://www.useit.com/papers/heuristic/heuristic_list.html

Understanding severity ratings

http://www.useit.com/papers/heuristic/severityrating.html

Usability Aspect Reports

Usability Aspect Report for Heuristic Evaluation

Think Alouds

Think Aloud Usability Testing is considered to be the “gold standard” of usability testing. This is because the data comes directly from users, showing the experimenter exactly which parts of the interface users have trouble with. In Think Alouds (TAs), the user is asked to perform a task while “thinking aloud.” This means asking the user to verbalize their internal process, parts of which are non-linguistic such as perceptual processes. By understanding what the user is thinking and how the user perceives the system, the tester gains a fresh perspective on the interface.

The student group performed two Think Alouds on the Bugzilla system. The user was given a bug that they had supposedly encountered. Their task was to first search the repository to see if that issue had already been submitted and then (if they did not find it) to submit the bug. The two users had different levels of experience. The first user has a technical background (experience in IT) and has browsed bugs in Bugzilla before but never submitted. The second user was non-technical and had never seen Bugzilla before the TA.

Think Aloud data is often surprising, and this data was no exception. The most significant finding from the first TA was the user’s confusion over the Assign To and Alias fields. The user initially attempted to indicate who submitted the bug by putting their own email address in the Assign To field. The user later decided that the Alias field was the correct place to do this instead of Assign To. In the second TA, the user ended up getting very lost in the system because she did not understand that the search had returned no results. Both users had difficulty on the Advanced Search page, and experienced confusion with the term “component” and the Deadline field. The final implication was the feed-forward and feedback on which fields are required or not. This was seen in the confusion the users expressed over knowing which fields were actually required and how they had submitted forms with multiple errors on the page.

The group reviewed the videos of each Think Aloud and logged items which fulfilled the criterion below, either negative or positive. Those items were then consolidated into the over arching problem and then written as a usability aspect report (UAR). Each UAR received a group consensus severity rating and those with a 3 or higher were written as full UARs. Those below the threshold were written as rough UARs including: name, number, evidence, severity rating, relationships (if any), and the criterion meet.

Negative Criterion

- The user articulated a goal and does not succeed in attaining that goal within 3 minutes (then the experimenter steps in and shows him/her what to do--the next step).

- The user articulates a goal, tries several things or the same thing over again (and then explicitly gives up).

- The user articulates a goal and has to try three or more things to find the solution.

- The user accomplishes the task, but in a suboptimal way

- The user does not succeed in a task. That is, when there is a difference between the task the user was given and the solution the user produced.

- The user expresses confusion, hesitation, or surprise.

- The user expresses some negative affect or says something is a problem.

- The user makes a design suggestion (don’t ask them to do this, but sometimes they do this spontaneously as they think-aloud).

Positive Criterion

- The user expressed some positive affect or says something is really easy.

- The user expresses happy surprise.

- Some previous analysis has predicted a usability problem, but this user has no difficulty with that aspect of the system.

Usability Aspect Report

Bugs Filed To Respond to Research

HCI 2008 Research Tracking Bug (look at the Dependency Tree to see all bugs filed in response to the research)