Electrolysis/Performance Optimization

This document is still a work in progress - stay tuned!

We are using a number of data sources in order to make sure multi-process Firefox (e10s) is as snappy and responsive as possible. Our two biggest sources are Telemetry data that we get from running Firefox instances out in the wild, and Talos performance data. Both are useful, but Talos performance data has the benefit of being run on most pushes to our integration branches. They are also run on machines that we can control, which means we can do experiments to see if our work is making performance better or worse. Telemetry data is good for seeing what users are likely experiencing in the real world, and for verifying that fixes and optimizations that you’ve landed are actually making a difference.

This document is meant to be a reference on how to investigate Talos regressions. We will not be exploring regressions reported from Telemetry.

The steps and tips below are not hard and fast rules, but are rather a distillation of lessons and techniques learned while dealing with Talos regressions over a number of different projects.

Broadly speaking, Talos regressions for e10s fall into two general buckets:

- A change has recently landed that has caused e10s Talos numbers to change for the worse

- A Talos test has just been activated, and e10s is performance worse than non-e10s

We’ll deal with the first case first, since that’s the easier one to reason about.

E10s Talos regression from a recent push

Congratulations! You either caused an e10s Talos regression with one of your patches, OR you’re responsible for investigating a Talos regression from somebody else’s patch.

Confirm that the regression exists

The first step is to confirm that the regression exists. This is where retriggers really come in handy. Talos, despite much effort, is still quite noisy. The best way we’ve found to eliminate the noise is to trigger a Talos test a number of times in order to get as concentrated a set of samples as possible.

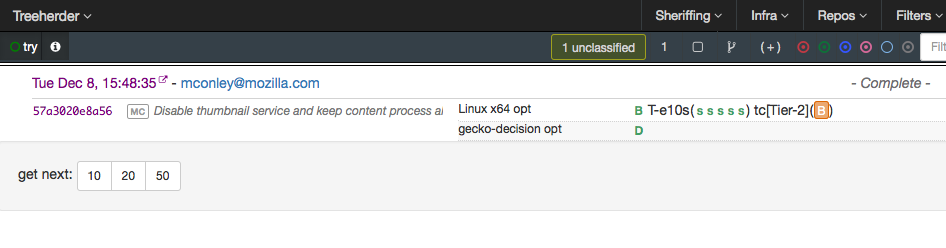

Start by doing retriggers for the test for the push just before the regressing patch, and again for the push with the patch. About 4 or 5 retriggers is usually sufficient.

Note that if you’re running the tests on try, you can use "mozharness: --rebuild-talos” to re-run the test automatically some number of times without manually retriggering.

For example, this try syntax will cause the tsvgr test to be built and run for optimized Linux 64, with 4 retriggers (resulting in 5 runs in total):

try: -b o -p linux64 -u none -t svgr-e10s mozharness: --rebuild-talos 4

Once you have your retriggers done, you’ll want to compare the two sets against one another to ensure that you can detect the regression.

Trying to detect a significant difference between two sets of numbers can be tricky, especially if the difference is very small, so a tool has been developed to help you do this. This is the Perfherder analysis tool.

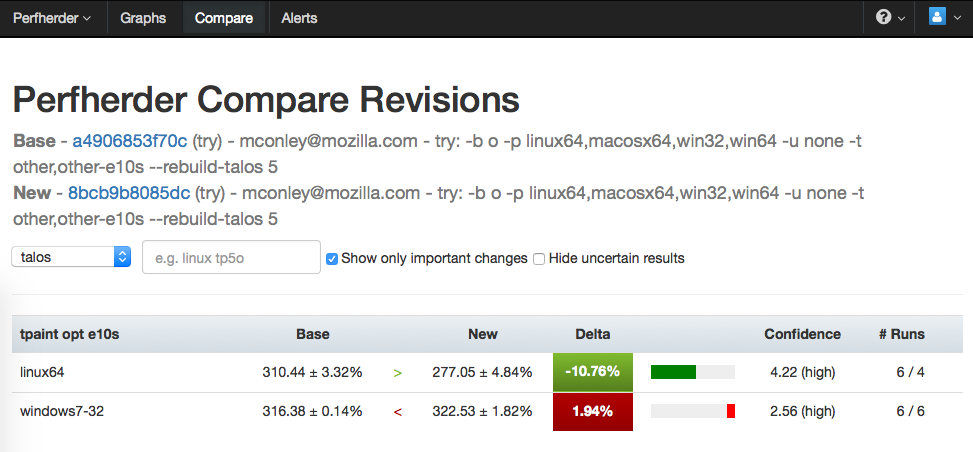

Visit the comparison tab of Perfherder, and supply then supply the repository name and hash for both of your builds. I tend to put the one I think is likely slower as the “Base”, and the one I suspect will be faster as “New”, but it really doesn’t matter so long as you remember which one you put where.

Perfherder will tell you whether or not there is a significant difference between the two sets of Talos results, as well as the magnitude of the difference, and how confident it is about its analysis.

Here’s an example comparison:

Notice that the whole “other” Talos test suite was run, but we’re only listing tpaint e10s here. That’s because “Show only important changes” is checked, which means that the rest of the tests didn’t see any significant change.

Here, we can see that the “New” patch seems to have caused a ~10.7% improvement for tpaint on Linux 64, but a ~2% regression on Windows 7.

E10s Talos regression from an activated test

TBD