Gecko:MediaRecorder

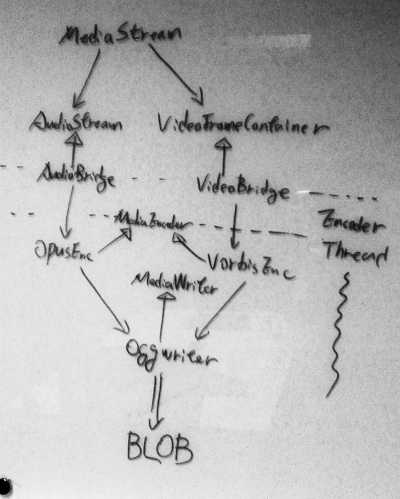

Media recoding architecture

Errata

- Vorbis => Theora

- We can not use VideoFrameContainer/AudioSystem directly, use adapter instead

MediaRecorder

Reference spec

https://dvcs.w3.org/hg/dap/raw-file/default/media-stream-capture/MediaRecorder.html

Overview

This API allow user to do the recording stuff. UA can instatiates a mediaStream object, call the start() and then call end stop() or wait mediaStream to be ended. The content of recording data would be encoded with the application select mine-type format and pass blob data via the dataavailable event. Application can also choose to receive smaller buffers of data at regular intervals.

Media Recorder API

WebIDL:

[Constructor (MediaStream stream)]

interface MediaRecorder : EventTarget {

readonly attribute MediaStream stream;

readonly attribute RecordingStateEnum state;

attribute EventHandler ondataavailable;

attribute EventHandler onerror;

attribute EventHandler onwarning;

void start (optional long timeslice);

void stop ();

void pause ();

void resume ();

void requestData ();

};

JavaScript Sample Code

var mStream;

var mr;

function dataavailable(e) {

//combine the blob data and write to disk

var encodeData = new Blob([e.data], {type: 'audio/ogg'});

}

function start()

{

mr = new MediaRecorder(mStream);

mr.ondataavailable = dataavailable;

try {

mr.start(1000); //every 1000ms, trigger dataavailable event

} catch (e) {

//handle start fail exception

}

}

function ex(e)

{

//handle webRTC exception

}

function streamcb(astream)

{

mStream = astream;

start();

}

//Use WebRTC to get mediaStream

navigator.mozGetUserMedia({audio:true}, streamcb, ex);

Related bug

bug 803414 Audio Recording - Web API & Implementation

bug 834165 Implement BlobEvent

bug 825110 [meta] Porting WebRTC video_module for B2G

bug 825112 [meta] Porting WebRTC audio_module for B2G

media encoder

audio encoder

video encoder

media writer

ogg writer

MediaEncoder Draft

API draft

/**

* Implement a queue-like interface between MediaStream and encoder

* since encoded may take long time to process one segment while new

* segment comes.

*/

class MediaSegmentAdapter {

public:

enum BufferType {

AUDIO,

VIDEO

}

/* AppendSegment may run on MediaStreamGraph thread to avoid race condition */

void AppendSegment(MediaSegment) = 0;

void SetBufferLength(size_t);

size_t GetBufferLength();

MediaSegment DequeueSegment();

protected:

MediaSegmentAdapter();

Queue<MediaSegment> mBuffer;

friend class Encoder;

}

/**

* A base class for video type adapters, which provide basic implementation

* e.g, copy the frame

*/

class VideoAdapter : MediaSegmentAdapter {

public:

/* This version deep copy/color convert the input buffer into a local buffer */

void AppendSegment(MediaSegment);

}

/**

* In FirefoxOS, we have hardware encoder and camera output platform-specific

* buffer which may give better performance

*/

class GrallocAdapter : MediaSegmentAdapter {

public:

/* This version |do not| copy frame data, but queue the GraphicBuffer directly

which can be used with SurfaceMediaSource or other SurfaceFlinger compatible

mechanism for hardware supported video encoding */

void AppendSegment(MediaSegment);

}

/**

* Similar to VideoAdapter, and since audio codecs may need |collect| enough data

* then real encode it, we may implement raw buffer with some specific length and

* collect data into the buffer

*/

class AudioAdapter : MediaSegmentAdapter {

public:

/* Copy/resample the data into local buffer */

void AppendSegment(MediaSegment);

}

/**

* Add some dequeue like interface to MediaSegment and make it thread-safe

* to replace these adapters

*/

/**

* MediaRecord keep a state-machine and make sure MediaEncoderListener is in

* a valid state for data manipulation

*

* This take response to initialize all the component(e.g.container writers, codecs)

* and link them together.

*/

class MediaEncoderListener : MediaStreamListener {

public:

/* Callback functions for MediaStream */

void NotifyConsumption(MediaStreamGraph, Consumption);

void NotifyPull(MediaStreamGraph, StreamTime);

void NotifyBlockingChanged(MediaStreamGraph, Blocking);

void NotifyHasCurrentData(MediaStreamGraph, bool);

void NotifyOutput(MediaStreamGraph);

void NotifyFinished(MediaStreamGraph);

/* Queue the MediaSegment into correspond adapters */

/* XXX: Is it possible to determine Audio related paramenters from this callback?

Or we have to query them from MediaStream directly? */

/* AppendSegment into Adapter need block MediaStreamGraph thread to avoid race condition

and we should schedule one encoding loop if any track updated */

void NotifyQueuedTrackChanges(MediaStreamGraph, TrackID, TrackRate, TrackTicks, uint32_t, MediaSegment);

/* Callback functions to JS */

void SetDataAvailableCallback()

void SetErrorCallback()

void SetMuteTrackCallback() /*NOT IMPL*/

void SetPauseCallback()

void SetPhotoCallback() /*NOT IMPL*/

void SetRecordingCallback()

void SetResumeCallback()

void SetStopCallback()

void SetUnmuteTrackCallback() /*NOT IMPL*/

void SetWarningCallback()

enum EncoderState {

NOT_STARTED, // Encoder initialized, no data inside

ENCODING, // Encoder work on current data

DATA_AVAILABLE, // Some encoded data available

ENCODED, // All input track stopped (EOS reached)

}

/* Status/Data polling function */

void GetEncodedData(unsigned char* buffer, int length);

EncoderState GetEncoderState();

/* Option query functions */

nsArray<String> GetSupportMIMEType()

Pair<int, int> GetSupportWidth()

Pair<int, int> GetSupportHeight()

/* Set requested encoder */

void SetMIMEType();

void SetVideoWidth();

void SetVideoHeight();

/* JS control functions */

void MuteTrack(TrackID) /*NOT IMPL*/

void Pause()

void Record()

void RequestData /*NOT IMPL*/

void Resume()

void SetOptions()

void Stop()

void TakePhoto /*NOT IMPL*/

void UnmuteTrack /*NOT IMPL*/

/* initial internal state and codecs */

void Init()

/* create MediaEncoder for given MediaStream */

MediaEncoder(MediaStream);

private:

void QueueVideoSegments();

void QueueAudioSegments();

void SelectCodec();

void ConfigCodec();

void SetVideoQueueSize();

void SetAudioQueueSize();

/* data member */

MediaSegment mVideoSegments; // Used as a glue between MediaStreamGraph and MediaEncoder

MediaSegment mAudioSegments;

Encoder mVideoEncoder;

Encoder mAudioEncoder;

MediaEncoder mMediaEncoder;

Thread mEncoderThread;

}

/**

* Different codecs usually support some codec specific parameters which

* we may take advantage of.

*

* Let each implementation provide its own parameter set, and use common

* params if no special params requested.

*/

union CodecParams {

OpusParams opusParams;

TheoraParams theoraParams;

MPEG4Params mpeg4Params;

// etc.

}

/**

* base class for general codecs:

*

* we generally do not implement codec ourself, but we need a generic interface

* to capsulate it.

*

* For example, if we want to support opus, we should create a OpusCodec and let

* it inherit this base class(by inherit AudioCodec), and implement OpusCodec by

* utilize libopus API.

*/

class Encoder {

public:

enum EncodingState {

COLLOCTING, /* indicate the encoder still wait enough data to be encoded */

ENCODING, /* there is enough data to be encoded, but incomplete */

ENCODED, /* indicate there is some output can be get from this codec */

}

Encoder();

nsresult Init() = 0;

/* Let Encoder setup buffer length based on codec characteristic

e.g. Stagefright video codecs may only use 1 buffer since the buffer maybe shared between hardwares */

/* Mimic android::CameraParameter to collect backend codec related params in general class */

CodecParams GetParams() = 0;

nsresult SetParams(CodecParams) = 0;

/* Start the encoder, if the encoder got its own thread, create the thread here */

nsresult Encode() = 0;

/* Read the encoded data from encoder, check the status before attempt to read, otherwise error would returned */

EncoderState GetCurrentState();

nsresult GetEncodedData(MediaSegment& encData) = 0;

/* codec specific header to describe self type/version/etc. */

Metadata GetCodecHeader();

/* force the encoder to output current available data */

/* XXX: this maybe required to support MediaEncoder::Request, but may not supported by all encoder backend */

void Flush() = 0;

private:

MediaSegmentAdapter mQueue;

}

class AudioTrackEncoder : public Encoder {

public:

/* AudioCodec may need collect enough buffers to be encode, return COLLECT as needed */

EncoderStatus Encode(MediaBuffer in, void* out, size_t length) = 0;

private:

bool IsDataEnough();

void* mLocalBuffer[MIN_FRAMES];

}

class VideoTrackEncoder : public Encoder {

public:

EncoderStatus Encode(MediaBuffer in, void* out, size_t length) = 0;

}

class OpusEncoder : public AudioTrackEncoder {

// Use libopus to encode audio

private:

// libopus ctx, etc...

}

class TheoraEncoder : public VideoTrackEncoder {

// Use libtheora to encode video

private:

// libtheora encoder is not blocking, thus we have to loop until frame complete

}

/**

* Generic base class for container writer

*

* Similar to MediaCodec and we separate container and codec for future extension.

*/

class MediaWriter {

public:

void AddTrack(Encoder);

/* Block until container packet write done*/

nsTArray<char> GetPacket();

}

class OggWriter {

// Use libogg to write container

protected:

// libogg context and others

VideoTrackEncoder mVideoEncoder; // e.g. TheoraEncoder

AudioTrackEncoder mAudioEncoder; // e.g. OpusEncoder/VorbisEncoder

}

Working flow

- MediaEncoder create MediaCodecs, MediaWriter based on request

- MediaEncoder create MediaSegmentAdapters from MediaCodecs

- MediaEncoder add MediaCodecs into MediaWriter tracks

- MediaEncoder register callback to MediaStream

- MediaStreamGraph thread callback to MediaEncoder when stream update

- MediaEncoder queue new MediaSegments into each MediaSegmentAdapters based on its type

- MediaSegmentAdapter copy/enqueue/color convert/etc... the data and queue them up

- MediaEncoder post a task to encoder thread

- Encoder thread ask MediaWriter for Packet

- If MediaWriter::GetPacket called for first time

- get Codec specific headers first, and produce Write header/metadata packet

- Otherwise

- MediaWriter ask Codecs for encoded data

- MediaWriter write packets

- If MediaWriter::GetPacket called for first time

- MediaEncoder call onRecordingCallback with raw data or nsArray

- MediaRecord API post encoded data blob to Javascript layer

NOTE: step 8 are not specified in this API

TEST CASE

- Recording media stream audio data, can be played by audio tag

- MediaRecorder state machine check

Problems

General codecs do not describe metadata- Codec type information have to be write done by some other mechenism

Some codecs collect enough data to produce output, MediaCodec::GetEncodedData is not adequate.- Writer should query MediaCodec state before attempt to read data

Some container force stream interleave, we may need some sync mechanism

- Since encoder may pending, EOS event may need some extra handling

=> Messaging related detail should be determinated until real implementation

Notes

- We will only implement Audio related part in current stage

- Some interaction between MediaEncoder and MediaRecorder is indeterminated, the affected function will not implemented at this stage (marked with /*NOT IMPL*/)

References

libogg/libopus/libtheora: http://www.xiph.org/ogg/