Gecko:Overview

This document attempts to give an overview of the different parts of Gecko, what they do, and why they do it, say where the code for them is within the repository, and link to more specific documentation (when available) covering the details of that code. It is not yet complete. Maintainers of these areas of code should correct errors, add information, and add links to more detailed documentation (since this document is intended to remain an overview, not complete documentation).

Contents

Docshell

The user of a Web browser can change the page shown in that browser in many ways: by clicking a link, loading a new URL, using the forward and back buttons, or other ways. This can happen inside a space we'll call a browsing context; this space can be a browser window, a tab, or a frame or iframe within a document. The toplevel data structures within Gecko represent this browsing context; they contain other data structures representing the individual pages displayed inside of it (most importantly, the current one). In terms of implementation, these two types of navigation, the top level of a browser and the frames within it, largely use the same data structures.

In Gecko, the docshell is the toplevel object responsible for managing a single browsing context. It, and the associated session history code, manage the navigation between pages inside of a docshell. (Note the difference between session history, which is a sequence of pages in a single browser session, used for recording information for back and forward navigation, and global history, which is the history of pages visited and associated times, regardless of browser session, used for things like link coloring and address autocompletion.)

There are relatively few objects in Gecko that are associated with a docshell rather than being associated with a particular one of the pages inside of it. Most such objects are attached to the docshell. An important object associated with the docshell is the nsGlobalWindowOuter which is what the HTML5 spec refers to as a WindowProxy (into which Window objects, as implemented by nsGlobalWindowInner, are loaded). See the DOM section below for more information on this.

The most toplevel object for managing the contents of a particular page being displayed within a docshell is a document viewer (see layout). Other important objects associated with this presentation are the document (see DOM) and the pres(entation) shell and pres(entation) context (see layout).

Docshells are organized into a tree. If a docshell has a non-null parent, then it corresponds to a subframe in whatever page is currently loaded in the parent docshell, and the corresponding subframe element (for example, an iframe) is called a browsing context container. In Gecko, a browsing context container would implement nsIFrameLoaderOwner to hold a frameloader, which holds and manages the docshell.

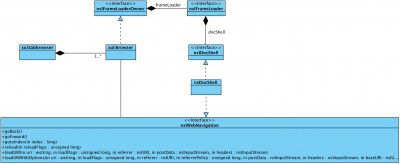

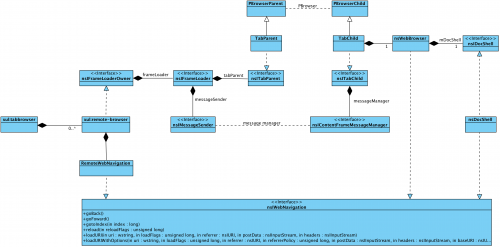

One of the most interfaces docshell implemented is nsIWebNavigation. It defines major functions of a browsing context, such as loadURI / goBack / goForward and reload. In single process configuration of desktop Firefox, <xul:browser> (which represents a tab) operates on docshell through nsIWebNavigation, as shown in the right figure.

- code: mozilla/docshell/

- bugzilla: Core::Document Navigation

- documentation: DocShell:Home Page

Session History

In order to keep the session history of subframes after the root document is unloaded and docshells of subframes are destroyed, only the root docshell of a docshell tree manages the session history (this does not match the conceptual model in the HTML5 spec and may be subject to change).

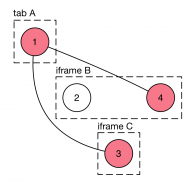

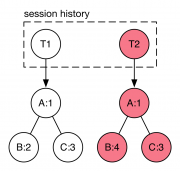

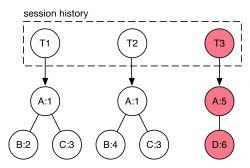

As an example to explain the structure of session history implementation in Gecko, consider there's a tab A, which loads document 1; in document 1, there are iframe B & C, which loads document 2 & 3, respectively. Later, an user navigates iframe B to document 4. The following figures show the conceptual model of the example, and the corresponding session history structure.

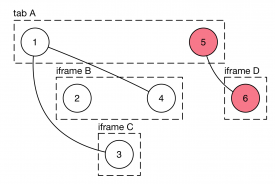

In Gecko, a session history object holds a list of transactions (denoted as T1 & T2 in the figure); each transaction points to a tree of entries; each entry records the docshell it associated to, and a URL. Now that if we navigate the tab to a different root document 5, which includes an iframe D with document 6, then it becomes:

Embedding

To be written (and maybe rewritten if we get an IPC embedding API).

Multi-process and IPC

In a multi-process desktop Firefox, a tab is managed by <xul:remote-browser>. It still operates on nsIWebNavigation, but in this case the docshell is in a remote process and can not be accessed directly. The encapsulation of remote nsIWebNavigation is done by the javascript-implemented RemoteWebNavigation.

In this configuration, the frameloader of a root docshell lives in parent process, so it can not access docshell directly either. Instead, it holds a TabParent instance to interact with TabChild in child-process. At C/C++ level, the communication across processes for a single tab is through the PBrowser IPC protocol implemented by TabParent and TabChild, while at the javascript level it's done by message manager (which is ontop of PBrowser). RemoteWebNavigation, for example, sends messages to browser-child.js in content process through message manager.

Networking

The network library Gecko uses is called Necko. Necko APIs are largely organized around three concepts: URI objects, protocol handlers, and channels.

Protocol handlers

A protocol handler is an XPCOM service associated with a particular URI scheme or network protocol. Necko includes protocol handlers for HTTP, FTP, the data: URI scheme, and various others. Extensions can implement protocol handlers of their own.

A protocol handler implements the nsIProtocolHandler API, which serves three primary purposes:

- Providing metadata about the protocol (its security characteristics, whether it requires actual network access, what the corresponding URI scheme is, what TCP port the protocol uses by default).

- Creating URI objects for the protocol's scheme.

- Creating channel objects for the protocol's URI objects

Typically, the built-in I/O service (nsIIOService) is responsible for finding the right protocol handler for URI object creation and channel creation, while a variety of consumers queries protocol metadata. Querying protocol metadata is the recommended way to handle any sort of code that needs to have different behavior for different URI schemes. In particular, unlike whitelists or blacklists, it correctly handles the addition of new protocols.

A service can register itself as a protocol handler by registering for the contract ID "@mozilla.org/network/protocol;1?name=SSSSS" where SSSS is the URI scheme for the protocol (e.g. "http", "ftp", and so forth).

URI objects

URI objects, which implement the nsIURI API, are a way of representing URIs and IRIs. Their main advantage over strings is that they do basic syntax checking and canonicalization on the URI string that they're provided with. They also provide various accessors to extract particular parts of the URI and provide URI equality comparisons. URIs that correspond to hierarchical schemes implement the additional nsIURL interface, which exposes even more accessors for breaking out parts of the URI.

URI objects are typically created by calling the newURI method on the I/O service, or in C++ by calling the NS_NewURI utility function. This makes sure to create the URI object using the right protocol handler, which ensures that the right kind of object is created. Direct creation of URIs via createInstance is reserved for protocol handler implementations.

Channels

Channels are the Necko representation of a single request/response interaction with a server. A channel is created by calling the newChannel method on the I/O service, or in C++ by calling the NS_NewChannel utility function. The channel can then be configured as needed, and finally its asyncOpen method can be called. This method takes an nsIStreamListener as an argument.

If asyncOpen has returned successfully, the channel guarantees that it will asynchronously call the onStartRequest and onStopRequest methods on its stream listener. This will happen even if there are network errors that prevent Necko from actually performing the requests. Such errors will be reported in the channel's status and in the status argument to onStopRequest.

If the channel ends up being able to provide data, it will make one or more onDataAvailable on its listener after calling onStartRequest and before calling onStopRequest. For each call, the listener is responsible for either returning an error or reading the entire data stream passed in to the call.

If an error is returned from either onStartRequest or onDataAvailable, the channel must act as if it has been canceled with the corresponding error code.

A channel has two URI objects associated with it. The originalURI of the channel is the URI that was originally passed to newChannel to create the channel that then had asyncOpen called on it. The URI is the URI from which the channel is reading data. These can be different in various cases involving protocol handlers that forward network access to other protocol handlers, as well as in situations in which a redirect occurs (e.g. following an HTTP 3xx response). In redirect situations, a new channel object will be created, but the originalURI will be propagated from the old channel to the new channel.

Note that the nsIRequest that's passed to onStartRequest must match the one passed to onDataAvailable and onStopRequest, but need not be the original channel that asyncOpen was called on. In particular, when an HTTP redirect happens the request argument to the callbacks will be the post-redirect channel.

TODO: crypto?

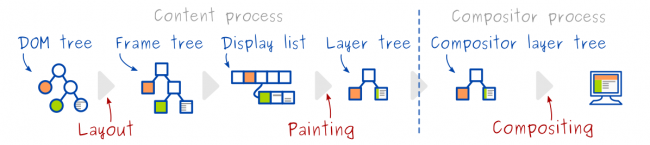

Document rendering pipeline

Some of the major components of Gecko can be described as steps on the path from an HTML document coming in from the network to the graphics commands needed to render that document. An HTML document is a serialization of a tree structure. (FIXME: add diagram) The HTML parser and content sink create an in-memory representation of this tree, which we call the DOM tree or content tree. Many JavaScript APIs operate on the content tree. Then, in layout, we create a second tree, the frame tree (or rendering tree) that is a similar shape to the content tree, but where each node in the tree represents a rectangle (except in SVG where they represent other shapes). We then compute the positions of the nodes in the frame tree (called frames) and paint them using our cross-platform graphics APIs (which, underneath, map to platform-specific graphics APIs).

Further documentation:

- Talk: Fast CSS: How Browsers Lay Out Web Pages (David Baron, 2012-03-11): slideshow, all slides, audio (MP3), session page

- Talk: Efficient CSS Animations (an updated version of the previous talk with a slightly different focus) (David Baron, 2014-06-04): slideshow, all slides, video

- Talk: Overview of the Gecko Rendering Pipeline (Benoit Girard, 2014-10-14)

Parser

The parser's job is to transform a character stream into a tree structure, with the help of the content sink classes.

HTML is parsed using a parser implementing the parsing algorithm in the HTML specification (starting with HTML5). Much of this parser is translated from Java, and changes are made to the Java version. This parser in parser/html/. The parser is driven by the output of the networking layer (see nsHtml5StreamParser::OnDataAvailable). The HTML5 parser is capable of parsing off the main thread which is the normal case. It also parses on the main thread to be able to synchronously handle things such as innerHTML modifications.

The codebase still has the previous generation HTML parser, which is still used for a small number of things, though we hope to be able to remove it entirely soon. This parser is in parser/htmlparser/.

XML is parsed using the expat library (parser/expat/) and code that wraps it (parser/xml/). This is a non-validating parser; however, it loads certain DTDs to support XUL localization.

DOM / Content

The content tree or DOM tree is the central data structure for Web

pages. It is a tree structure, initially created from the tree

structure expressed in the HTML or XML markup. The nodes in the tree

implement major parts of the DOM (Document Object Model) specifications.

The nodes themselves are part of a class hierarchy rooted at

nsINode; different derived classes are used for things such

as text nodes, the document itself, HTML elements, SVG elements, etc.,

with further subclasses of many of these types (e.g., for specific HTML

elements). Many of the APIs available to script running in Web pages

are associated with these nodes. The tree structure persists while the

Web pages is displayed, since it stores much of state associated with

the Web page. The code for these nodes lives in the content/ directory.

The DOM APIs are not threadsafe. DOM nodes can be accessed only from the main thread (also known as the UI thread (user interface thread)) of the application.

There are also many other APIs available to Web pages that are not APIs on the nodes in the DOM tree. Many of these other APIs also live in the same directories, though some live in content/ and some in dom/. These include APIs such as the DOM event model.

The dom/ directory also includes some of the code needed to expose Web APIs to JavaScript (in other words, the glue code between JavaScript and these APIs). For an overview, see DOM API Implementation. See Scripting below for details of the JS engine.

TODO: Internal APIs vs. DOM APIs.

TODO: Mutation observers / document observers.

TODO: Reference counting and cycle collection.

TODO: specification links

Style System

The style system section has been moved to https://firefox-source-docs.mozilla.org/layout/StyleSystemOverview.html

Layout

The layout section has been moved to https://firefox-source-docs.mozilla.org/layout/LayoutOverview.html

Dynamic change handling along the rendering pipeline

The dynamic change handling section has been moved to https://firefox-source-docs.mozilla.org/layout/DynamicChangeHandling.html

Refresh driver

Graphics

Further documentation:

- Jargon: Helpful list of terms used in Graphics

- Talk: 2018 SF All-Hands Graphics Overview (nical/mattwoodrow/gw)

- Talk: Introduction to graphics/layout architecture (Robert O'Callahan, 2014-04-18)

- Talk: An overview of Gecko's graphics stack (Benoit Jacob, 2014-08-12)

- Talk: 2D Graphics (Jeff Muizelaar, 2014-10-14)

- Blog: Mozilla gfx blog's "technical" tag.

- Blog: WebRender/gfx newsletter.

WebRender vs Layers

Until recently, there were two different rendering paths in Gecko. The new one is WebRender. "Layers" is the name of the previous / non-WebRender architecture, and was removed in bug 1541472.

Here's a very high level summary of how it works: [1]

From a high level architectural point of view, the main differences are:

- Layers separate rendering into a painting phase and a compositing phase which usually happen in separate processes. For WebRender, on the other hand, all of the rendering happens in a single operation.

- Layers internally expresses rendering rendering commands through a traditional canvas-like immediate mode abstraction (Moz2D) which has several backends (some of which use the GPU). WebRender works directly at a lower and GPU-centric level, dealing in terms of batches, draw calls and shaders.

- Layers code is entirely C++, while WebRender is mostly Rust with some integration glue in C++.

WebRender

- WebRender's main entry point is a display list. It is a description of a visible subset of the page produced by the layout module.

- WebRender and Gecko's display list representations are currently different so there is a translation between the two.

- The WebRender display list is serialized on the content process and sent to the process that will do the rendering (either the main process or the GPU process).

- The rendering process deserializes the display list and builds a "Scene".

- From this scene, frames can be built. Frames represent the actual drawing operations that need to be performed for content to appear on screen. A single scene can produce several frames, for example if an element of the scene is scrolled.

- The Renderer consumes the frame and produces actual OpenGL drawing commands. Currently WebRender is based on OpenGL and on Windows the OpenGL commands are transparently turned into D3D ones using ANGLE. In the long run we plan to work with a vulkan-like abstraction called gfx-rs.

The main phases in WebRender are therefore:

- Display list building

- Scene building

- frame building

- GPU commands execution

All of these phases are performed on different threads. The first two happen whenever the layout of the page changes or the user scrolls past the part of the page covered by the current scene. They don't necessarily happen at a high frequency. Frame building and GPU command execution, on the other hand, happen at a high frequency (the monitor's refresh rate during scrolling and animations), which means that they must fit in the frame budget (typically 16ms). In order to avoid going over the frame budget, frame building and GPU command execution can overlap. scene building and frame building can also overlap and it is possible to continue generating frames while a new scene is being built asynchronously.

WebRender has a fallback mechanism called "Blob images" for content that it does not handle (for example some SVG drawing primitives). It consists in recording and serializing a list of drawing commands (the "blob") on the content process and sending it to WebRender along with the regular display list. Blobs are then turned into images on the CPU during the scene building phase. The most of WebRender treats blob images as regular images.

Some important internal operations and data structures:

- The clip-scroll tree (TODO)

- The render task graph (TODO)

- Culling: https://mozillagfx.wordpress.com/2018/11/08/webrender-culling/

- Batching: https://mozillagfx.wordpress.com/2018/11/21/webrender-batching/

- Picture-caching: https://mozillagfx.wordpress.com/2018/11/02/webrender-picture-caching/

The WebRender code path reuses the layers IPC infrastructure for sharing textures between the content process and the renderer. For example The ImageBridge protocol described in the Compositing section is also used to transfer video frames when WebRender is enabled. Asynchronous Panning and Zooming (APZ) described in a later sections is also relevant to WebRender.

Painting/Rasterizing (Layers aka Non-WebRender)

Painting/rendering/rasterizing is the step where graphical primitives (such as a command to fill an SVG circle, or the internally produced command to draw a rounded rectangle) are used to color in the pixels of a surface so that the surface "displays" those rasterized primitives.

The platform independent library that gecko uses to render is Moz2D. Gecko code paints into Moz2D DrawTarget objects (the DrawTarget base class having multiple platform dependent subclasses).

Compositing

The compositing stage of the rendering pipeline is operated by the gfx/layers module.

Different parts of a web page can sometimes be painted into intermediate surfaces retained by layers. It can be convenient to think of layers as the layers in image manipulation programs like The Gimp or Photoshop. Layers are organized as a tree. Layers are primarily used to minimize invalidating and repainting when elements are being animated independently of one another in a way that can be optimized using layers (e.g. opacity or transform animations), and to enable some effects (such as transparency and 3D transforms).

Compositing is the action of flattening the layers into the final image that is shown on the screen.

We paint and composite on separate threads (it is called Off-main-thread compositing, or OMTC, and a long time ago Firefox did not have this separation).

The Layers architecture is built on the following notions:

- Compositor: an object that can draw quads on the screen (or on an off-screen render target).

- Texture: an object that contains image data.

- Compositable: an object that can manipulate one or several textures, and knows how to present them to the compositor

- Layer: an element of the layer tree. A layer usually doesn't know much about compositing: it uses a compositable to do the work. Layers are mostly nodes of the layer tree.

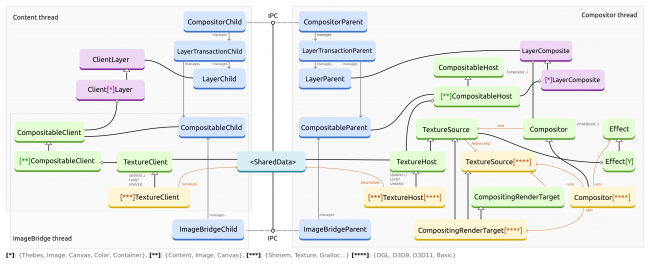

Since painting and compositing are performed on different threads/processes, we need a mechanism to synchronize a client layer tree that is constructed on the content thread, and a host layer tree that is used for compositing on the compositor thread. This synchronization process must ensure that the host layer tree reflects the state of the current layer tree while remaining in a consistent state, and must transfer texture data from a thread to the other. Moreover, on some platforms the content and compositor threads may live in separate processes.

To perform this synchronization, we use the IPDL IPC framework. IPDL lets us describe communications protocols and actors in a domain specific language. for more information about IPDL, read https://wiki.mozilla.org/IPDL

When the client layer tree is modified, we record modifications into messages that are sent together to the host side within a transaction. A transaction is basically a list of modifications to the layer tree that have to be applied all at once to preserve consistency in the state of the host layer tree.

Texture transfer is done by synchronizing texture objects across processes/threads using a couple TextureClient/TextureHost that wrap shared data and provide access to it on both sides. TextureHosts provide access to one or several TextureSource, which has the necessary API to actually composite the texture (TextureClient/Host being more about IPC synchronization than actual compositing. The logic behind texture transfer (as in single/double/triple buffering, texture tiling, etc) is operated by the CompositableClient and CompositableHost.

It is important to understand the separation between layers and compositables. Compositables handle all the logic around texture transfer, while layers define the shape of the layer tree. a Compositable is created independently from a layer and attached to it on both sides. While layers transactions can only originate from the content thread, this separation makes it possible for us to have separate compositable transactions between any thread and the compositor thread. We use the ImageBridge IPDL protocol to that end. The Idea of ImageBridge is to create a Compositable that is manipulated on the ImageBridgeThread, and that can transfer/synchronize textures without using the content thread at all. This is very useful for smooth Video compositing: video frames are decoded and passed into the ImageBridge without ever touching the content thread, which could be busy processing reflows or heavy javascript workloads.

It is worth reading the inline code documentation in the following files:

- gfx/layers/Compositor.h

- gfx/layers/ShadowLayers.h

- gfx/layers/Layers.h

- gfx/layers/host/TextureHost.h

- gfx/layers/client/CompositableClient.h

To visualize how a web page is layered, turn on the pref "layers.draw-borders" in about:config. When this pref is on, the borders of layers and tiles are displayed on top of the content. This only works when using the Compositor API, that is when WebRender is disabled, since WebRender does not have the same concept of layers.

Blog posts with information on Layers that should be integrated here:

- Layers: Cross-Platform Acceleration

- Layers

- Retained Layers

- Shadow Layers, and learning by failing

- Accelerated layer-rendering, and learning by (some) success

- Mask Layers

- Building a mask layer

- Mask Layers on the Direct3D backends

- Graphics API Design

Async Panning and Zooming

On some of our mobile platforms we have some code that allows the user to do asynchronous (i.e. off-main-thread) panning and zooming of content. This code is the Async Pan/Zoom module (APZ) code and is further documented at Platform/GFX/APZ.

Scripting

JavaScript Engine

Gecko embeds the SpiderMonkey JavaScript engine (located in js/src). The JS engine includes a number of Just-In-Time compilers (or JITs). SpiderMonkey provides a powerful and extensive embedding API (called the JSAPI). Because of its complexity, using the JSAPI directly is frowned upon, and a number of abstractions have been built in Gecko to allow interacting with the JS engine without using JSAPI directly. Some JSAPI concepts are important for Gecko developers to understand, and they are listed below:

- Global object: The global object is the object on which all global variables/properties/methods live. In a web page this is the 'window' object. In other things such as XPCOM components or sandboxes XPConnect creates a global object with a small number of builtin APIs.

- Compartments: A compartment is a subdivision of the JS heap. The mapping from global objects to compartments is one-to-one; that is, every global object has a compartment associated with it, and no compartment is associated with more than one global object. (NB: There are compartments associated with no global object, but they aren't very interesting). When JS code is running SpiderMonkey has a concept of a "current" compartment. New objects that are created will be created in the "current" compartment and associated with the global object of that compartment.

- When objects in one compartment can see objects in another compartment (via e.g. iframe.contentWindow.foo) cross-compartment wrappers or CCWs mediate the interaction between the two compartments. By default CCWs ensure that the current compartment is kept up-to-date when execution crosses a compartment boundary. SpiderMonkey also allows embeddings to extend wrappers with custom behavior to implement security policies, which Gecko uses extensively (see the Security section for more information).

Further documentation:

- Talk: Baseline JIT (Kannan Vijayan, 2014-10-14)

XPConnect

XPConnect is a bridge between C++ and JS used by XPCOM components exposed to or implemented in JavaScript. XPConnect allows two XPCOM components to talk to each other without knowing or caring which language they are implemented in. XPConnect allows JS code to call XPCOM components by exposing XPCOM interfaces as JS objects, and mapping function calls and attributes from JS into virtual method calls on the underlying C++ object. It also allows JS code to implement an XPCOM component that can be called from C++ by faking the vtable of an interface and translating calls on the interface into JS calls. XPConnect accomplishes this using the vtable data stored in XPCOM TypeLibs (or XPT files) by the XPIDL compiler and a small layer of platform specific code called xptcall that knows how to interact with and impersonate vtables of XPCOM interfaces.

XPConnect is also used for a small (and decreasing) number of legacy DOM objects. At one time all of the DOM used XPConnect to talk to JS, but we have been replacing XPConnect with the WebIDL bindings (see the next section for more information). Arbitrary XPCOM components are not exposed to web content. XPCOM components that want to be accessible to web content must provide class info (that is, they must QI to nsIClassInfo) and must be marked with the nsIClassInfo::DOM_OBJECT flag. Most of these objects also are listed in dom/base/nsDOMClassInfo.cpp, where the interfaces that should be visible to the web are enumerated. This file also houses some "scriptable helpers" (classes ending in "SH" and implementing nsIXPCScriptable), which are a set of optional hooks that can be used by XPConnect objects to implement more exotic behaviors (such as indexed getters or setters, lazily resolved properties, etc). This is all legacy code, and any new DOM code should use the WebIDL bindings.

WebIDL Bindings

The WebIDL bindings are a separate bridge between C++ and JS that is specifically designed for use by DOM code. We intend to replace all uses of XPConnect to interface with content JavaScript with the WebIDL bindings. Extensive documentation on the WebIDL bindings is available, but the basic idea is that a binding generator takes WebIDL files from web standards and some configuration data provided by us and generates at build time C++ glue code that sits between the JS engine and the DOM object. This setup allows us to produce faster, more specialized code for better performance at the cost of increased codesize.

The WebIDL bindings also implement all of the behavior specified in WebIDL, making it possible for new additions to the DOM to behave correctly "out of the box". The binding layer also provides C++ abstractions for types that would otherwise require direct JSAPI calls to handle, such as typed arrays.

Reflectors

Whether using XPConnect or Web IDL, the basic idea is that there is a JSObject called a reflector that represents some C++ object. The JSObject ensures that the C++ object remains alive while the JSObject is alive. The C++ object does not necessarily keep the JSObject alive; this allows the JSObject to be garbage-collected as needed. If this happens, the next time JS needs access to that C++ object a new reflector is created. If something happens that requires that the actual object identity of the JSObject be preserved even though it's not explicitly reachable, the C++ object will start keeping the JSObject alive as well. After this point, the cycle collector would be the only way for the pair of objects to be deallocated. Examples of operations that require identity-preservation are adding a property to the object, giving the object a non-default prototype, using the object as a weakmap key or weakset member.

In the XPConnect case, reflectors are created by XPCWrappedNative::GetNewOrUsed. This finds the right JSClass to use for the object, depending on information provided by the C++ object via the nsIClassInfo interface about special class hooks it might need. If the C++ object has no clasinfo, XPC_WN_NoHelper_JSClass is used. GetNewOrUsed also determines the right global to use for the reflector, again based on nsIClassInfo information, with a fallback to the JSContext's current global.

In the Web IDL case, reflectors are created by the Wrap method that the binding code generator spits out for the relevant Web IDL interface. So for the Document interface, the relevant method is mozilla::dom::Document_Binding::Wrap. The JSClass that is used is also created by the code generator, as part of the DOMJSClass struct it outputs. Web IDL objects provide a GetParentObject() method that is used to determine the right global to use for the reflector.

Web IDL objects typically inherit from nsWrapperCache, and use that class to store a pointer to their reflector and to keep it alive as needed. The only exception to that is if the Web IDL object only needs to find a reflector once during its lifetime, typically as a result of a Web IDL constructor being called from JS (see TextEncoder for an example). In that case, there is no need to get the JS object from the C++ one, and no way to end up in any of the situations that require preserving the JS object from the C++ side, so the Web IDL object is allowed to not inherit from nsWrapperCache.

Security

Gecko's JS security model is based on the concept of compartments. Every compartment has a principal associated with it that contains security information such as the origin of the compartment, whether or not it has system privileges, etc. For the following discussion, wrappee refers to the underlying object being wrapped, scope refers to the compartment the object is being wrapped for use in, and wrapper refers to the object created in scope that allows it to interact with wrappee. "Chrome" refers to JS that is built in to the browser or part of an extension, which runs with full privileges, and is contrasted with "content", which is web provided script that is subject to security restrictions and the same origin policy.

- Xray wrappers: Xray wrappers are used when the scope has chrome privileges and the wrappee is the JS reflection of an underlying DOM object. Beyond the usual CCW duties of making sure that content code does not run with chrome privileges, Xray wrappers also ensure that calling methods or accessing attributes on the wrappee has the "expected" effect. For example, a web page could replace the

closemethod on its window with a JS function that does something completely different. (e.g.window.close = function() { alert("This isn't what you wanted!") };). Overwriting methods in this way (or creating entirely new ones) is referred to as expando properties. Xrays see through expando properties, invoking the original method/getter/setter if the expando overwrites a builtin or seeing undefined in the expando creates a new property. This allows chrome code to call methods on content DOM objects without worrying about how the page has changed the object. - Waived xray wrappers: The xray behavior is not always desirable. It is possible for chrome to "waive" the xray behavior and see the actual JS object. The wrapper still guarantees that code runs with the correct privileges, but methods/getters/setters may not behave as expected. This is equivalent to the behavior chrome sees when it looks at non-DOM content JS objects.

- For more information, see the MDN page

- bholley's Enter the Compartment talk also provides an overview of our compartment architecture.

Images

Plugins

Platform-specific layers

- widget

- native theme

- files, networking, other low-level things

- Accessibility APIs

- Input. Touch-input stuff is somewhat described at Platform/Input/Touch.

Editor

Base layers

NSPR

NSPR is a library for providing cross-platform APIs for various platform-specific functions. We tend to be trying to use it as little as possible, although there are a number of areas (particularly some network-related APIs and threading/locking primitives) where we use it quite a bit.

XPCOM

XPCOM is a cross-platform modularity library, modeled on Microsoft COM. It

is an object system in which all objects inherit from the nsISupports interface.

components and services, contract IDs and CIDs

prior overuse of XPCOM; littering with XPCOM does not produce modularity

Base headers (part of xpcom/base/) and data structures. See also mfbt.

Threading

xptcall, proxies

reference counting, cycle collection

Further documentation:

- XPCOM and Internal Strings in Gecko (talk by David Baron, 2014-03-20): video, speaking notes

String

XPCOM has string classes for representing sequences of characters. Typical C++ string classes have the goals of encapsulating the memory management of buffers and the issues needed to avoid buffer overruns common in traditional C string handling. Mozilla's string classes have these goals, and also the goal of reducing copying of strings.

(There is a second set of string classes in xpcom/glue for callers outside of libxul; these classes are partially source-compatible but have different (worse) performance characteristics. This discussion does not cover those classes.)

We have two parallel sets of classes, one for strings with 1-byte units (char, which may be signed or unsigned), and one for strings with 2-byte units (char16_t, always unsigned). The classes are named such that the class for 2-byte characters ends with String and the corresponding class for 1-byte characters ends with CString. 2-byte strings are almost always used to encode UTF-16. 1-byte strings are usually used to encode either ASCII or UTF-8, but are sometimes also used to hold data in some other encoding or just byte sequences.

The string classes distinguish, as part of the type hierarchy, between strings that must have a null-terminator at the end of their buffer (ns[C]String) and strings that are not required to have a null-terminator (ns[C]Substring). ns[C]Substring is the base of the string classes (since it imposes fewer requirements) and ns[C]String is a class derived from it. Functions taking strings as parameters should generally take one of these four types.

In order to avoid unnecessary copying of string data (which can have significant performance cost), the string classes support different ownership models. All string classes support the following three ownership models dynamically:

- reference counted, copy-on-write, buffers (the default)

- adopted buffers (a buffer that the string class owns, but is not reference counted, because it came from somewhere else)

- dependent buffers, that is, an underlying buffer that the string class does not own, but that the caller that constructed the string guarantees will outlive the string instance

In addition, there is a special string class, nsAuto[C]String, that additionally contains an internal 64-unit buffer (intended primarily for use on the stack), leading to a fourth ownership model:

- storage within an auto string's stack buffer

Auto strings will prefer reference counting an existing reference-counted buffer over their stack buffer, but will otherwise use their stack buffer for anything that will fit in it.

There are a number of additional string classes, particularly nsDependent[C]String, nsDependent[C]Substring, and the NS_LITERAL_[C]STRING macros which construct an nsLiteral[C]String which exist primarily as constructors for the other types. These types are really just convenient notation for constructing an ns[C]S[ubs]tring with a non-default ownership mode; they should not be thought of as different types. (The Substring, StringHead, and StringTail functions are also constructors for dependent [sub]strings.) Non-default ownership modes can also be set up using the Rebind and Adopt methods, although the Rebind methods actually live on the derived types, which is probably a mistake (although moving them up would require some care to avoid making an API that easily allows assigning a non-null-terminated buffer to a string whose static type indicates that it is null-terminated).

Note that the presence of all of these classes imposes some awkwardness in terms of distinctions being available as both static type distinctions and dynamic type distinctions. In general, the only distinctions that should be made statically are 1-byte vs. 2-byte sequences (CString vs String) and whether the buffer is null-terminated or not (Substring vs String). (Does the code actually do a good job of dynamically enforcing the Substring vs. String restriction?)

TODO: buffer growth, concatenation optimizations

TODO: encoding conversion, what's validated and what isn't

TODO: "string API", nsAString (historical)

- Code: xpcom/string/

- Bugzilla: Core::String

Further documentation:

- XPCOM and Internal Strings in Gecko (talk by David Baron, 2014-03-20): video, speaking notes