Identity/CryptoIdeas/06-Queue-Sync-CouchDB

Queue-Sync with CouchDB-ish Backend

- Chris Karlof, Brian Warner, July 2013

Overview

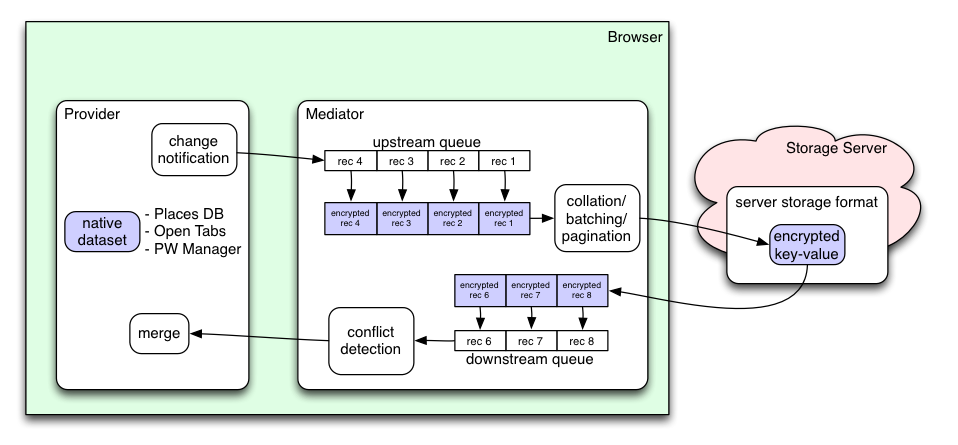

Each datatype (bookmarks, saved-passwords, open-tabs, etc) is managed by an instance of a Provider. This component is responsible for handling the native datastore (places.db, etc), preparing and parsing the published representation (a JSON object, with key and revision id), and managing/merging conflicts.

Each datatype also has an associated Mediator instance. This component behaves the same way for all datatypes, the main exception being whether it must handle inter-row dependencies or not (the algorithm is simpler if not). The Mediator is responsible for calculating revision ids, encryption/decryption, batching records for transmission to the server, deciding when upstream records have been successfully delivered or rejected because of a lost race, and delivering conflict/merge information to the Provider. The Mediator manages the network connection to the Storage Server.

The Mediator manages several queues. The "unsent queue" contains changes that have landed in the native dataset, but have not yet been sent to the storage server. Changes may linger here to improve network efficiency (the Nagle algorithm), to avoid splitting up atomic sets (multiple changes that must be delivered in a group), or because the storage server is not currently available.

The "sent queue" contains changes that have been sent to the storage server, but which have not yet been acknowledged. The "downstream queue" accumulates records that must be applied in an atomic batch. Downstream records are applied as soon as possible: they are not delayed for efficiency purposes like upstream records.

Records

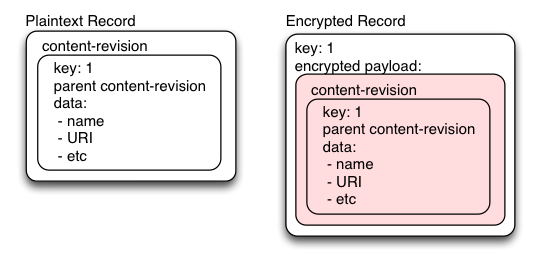

The Provider can store its data in whatever format it likes. When these records are delivered to (or accepted from) the Mediator, they should be in a particular format. This format includes a key, a content-revision, a parent content-revision, and the data itself.

The "content-revision" is a unique description of the content of the record, created by the Provider. It can either be a sequence number combined with a randomly-generated string, or a hash of the record contents. The content-revision should not repeat if the record is returned to an earlier state: including the parent content-revision in the hash is a simple way to obtain this property. Two different records with the same data, for different keys, should have different content-revisions: including the key in the hash provides this.

The "parent content-revision" is used to keep track of record parentage. The first version for any given key uses an empty parent-content-revision. Subsequent versions are derived from, or at least with knowledge of, an earlier version: the earlier version's content-revision is used as the parent-content-revision of the new record. This is used by the Mediator to detect conflicts when the record is sent to the server.

The Mediator is responsible for encrypting the record before sending it to the server. The encrypted payload includes the content-revision, the key (to prevent records from being misapplied to the wrong key), and the parent-content-revision. Neither content-revision is visible outside the encrypted envelope: the server does not see them.

Other fields may be added outside the envelope if they should be visible to the server and do not need to be validated by the client: likely fields include priority (to facilitate partial downloads on small devices) and expire-at timestamps.

Server Revisions

Each record sent to (or received from) the server is associated with a "server revision". Like the content-revision, this is a non-repeating description of the record's (encrypted) contents. As the name implies, it is created by the storage server, not the client. The storage server is assumed to provide a compare-and-swap operation that tests the old server-revision before overwriting the record. CouchDB offers compare-and-swap through either the single-record "PUT /db/doc" API, or the multi-record "POST _bulk_docs" API, by including a _rev field in the document being written.

The Revision Table

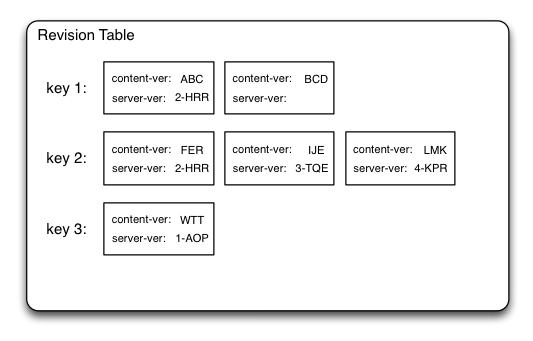

The Provider does not see server revisions. The server does not see content-revisions. The Mediator is the only component which sees both, and maintains a Revision Table to track the mapping between the two.

The revision table keeps a small number of (content-revision, server-revision) pairs for each key. A new pair is added (and a new key, if necessary) when the Provider delivers a new upstream record, or when the server delivers a new downstream record.

The table entry for upstream records is initially lacking a server-revision. This remains blank until the server publishes the record in the downstream _changes feed. The Mediator decrypts the downstream records to obtain the content-revision, looks up the corresponding revision-table entry, then fills in the server-revision if necessary.

For each record, its parent-content-revision is looked up in the revision table to find the corresponding previous-server-revision. This is submitted to the server for the compare-and-swap operation. This ensures that the server can correctly reject client changes that do not incorporate the latest server state.

The revision table must be persisted, but can be regenerated by performing a re-sync. Therefore it does not strictly need to be flushed to disk after each operation: instead, we can set a dirty-bit upon the first write, and clear the bit at shutdown after the Provider has confirmed that it has committed all the downstream records. If the browser crashes rather than shutting down cleanly, it can perform a re-sync upon next boot.

The table should also store the highest server-side sequence number that has been processed (changes delivered to the Provider). This will allow the next reboot to pick up from the right place.

One potential future optimization: get the server-revision for accepted records from the _bulk_docs return value, rather than using _include_docs=True for the _changes feed. The _changes handler will need to turn around and fetch the full document for all new downstream records (those which come from other clients, instead of being reflections of our own upstream changes). This would reduce the downstream bandwidth needs.

Lifetime of a Change

Upstream Change

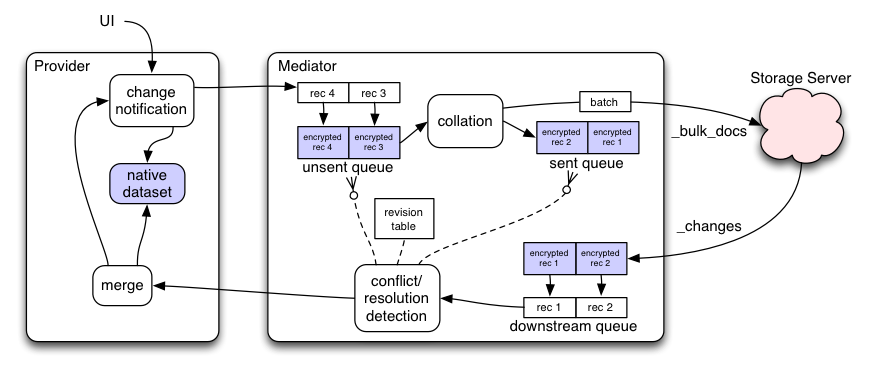

When the UI instructs the Provider to change its stored data (e.g. a bookmark is added, modified, or deleted), the Provider creates a record and delivers it to the Mediator before commiting the data to the native dataset. The Mediator encrypts the record and adds the encrypted form to the unsent queue, along with the key being changed. It also updates the revision table by adding a new record (in which the server-revision is blank).

The unsent queue is an ordered list of encrypted records. For each one, the queue also remembers its key, content-revision, and parent-content-revision.

If the datatype has inter-row constraints, some records in the unsent queue may be grouped together into atomic sets. The rest of the Mediator code will keep these records together, delivering and applying them in all-or-nothing transactions.

As an optimization, the Mediator may be allowed to coalesce records in the unsent queue together, if they modify the same key, and if the merge is not prohibited by other transactionality requirements (e.g. if it creates an intermediate state that violates inter-row constraints, like an orphaned bookmark entry).

Eventually, the collation module decides to deliver some portion of the unsent changes to the server. This portion must be a contiguous span of records including the head of the queue. All records must have a previous-server-revision (i.e. the record must have a parent-content-revision which exists in the revision table, and that table entry must have a corresponding server-revision). Note that this requirement necessitates multiple roundtrips when the same key is modified multiple times (no pipelining), unless they could be coalesced into a single change.

The selected records are moved from the unsent queue to the sent queue, and a CouchDB POST _bulk_docs API message is sent to the server. Each record is given a _revid property with the previous-server-revision value. We use _bulk_docs in the per-record compare-and-swap mode (no all_or_nothing:true, no new_edits:false). In this mode, the response reports the new revision id for successful records, and an error for the failed changes. However, we ignore the record-by-record response, only paying attention to a general failure like server-is-unreachable. (a future performance improvement is to use this response, but since we also need data from _changes, and the two messages are unpredictably interleaved, the algorithm is simpler when we only pay attention to _changes).

Downstream Changes

The Mediator subscribes to the server's _changes feed to hear about both new downstream changes (created by other clients) and its own submitted upstream changes (which are either accepted, or rejected because another client submitted a different change first).

It can either poll (remembering the CouchDB sequence number as a starting point), or use one of the more pubsub-like modes (long-polling or continuous) for efficiency at the expense of keeping an SSL connection up for long periods of time (one per collection). It uses include_docs:true to retrieve the full document for each new record. (a future optimization could use False instead, and only fetch full records for changes coming from other clients. it would need to queue the partial records until these additional queries resolved)

The Mediator decrypts each record and extracts the key (which must match the outer copy of the key) and content-revision. It updates the revision table with the server-revision/content-revision mapping.

Accepted Changes

If the record is recognized as matching an entry in the sent queue (same key, same content-revision), the record is removed from the sent queue and processing terminates. This represents a successful uncontested upstream change.

Rejected Changes

If the record matches a key in the sent queue, but has a different content-revision, this is a conflict: some other client delivered a change to the same record to the server before our own change landed. The Mediator scans and removes any records from the unsent queue with the same key (since these will surely fail), as well as from the sent queue (since this will fail, if it hasn't already).

It then delivers the downstream record to the Provider in a merge() call. The downstream record, combined with the current dataset value for the same key, provide the "theirs" and "ours" sides of a 3-way merge function (although we cannot provide the common ancestor record without some other form of storage). The Provider compares these records and decides what to do:

- accept the server's version: the Provider writes this version into the native dataset, does *not* produce a new revision, and does not notify the Mediator about a new version

- merge the two records: the Provider updates the native dataset with a merged copy (setting parent-content-revision equal to the downstream record's content-revision), and notifies the Mediator about the new revision, prompting a new record to be sent upstream

- stick with the local version: this behaves just like a merge, except the merged copy is the same as the original local version, but with an updated parent-content-revision. We still send a "new" record up to the server.

New Changes

If the downstream record's key does not exist in the sent queue, this is a new change (created by some other client). This can be delivered to the Provider just like a merge(), but perhaps with a flag that indicates we do not expect it to require merging (maybe indicating a preference for accept-server-version).

If the Provider and the Mediator data structures are managed by separate threads, a race could still require a merge even if the upstream queue did not reference the key being changed. This should behave like the rejected change described above.

TODO: downstream records which match keys in the unsent queue (but not the sent queue) also indicate conflicts. They should probably be handled similarly (remove the entries from the unsent queue, notify Provider.merge).

TODO

Things to figure out:

- how to represent deletion properly

- initial synchronization

- basic idea is to fetch all records, do one-key-at-a-time merges on each. Merges should be more heroic than during normal in-sync operations (e.g. search for bookmarks with same-title same-URL and combine them, try to identify duplicate folders, etc) and look at records in combination, not just separately.

- persistence and transactionality of the unsent queue

- how previous-server-version and previous-content-version are obtained for new downstream records

- how to detect and handle server-side rollback to a previous backup