User:GuanWen/SpeechSynthesis

SpeachSynthesis

Introduction

Speech synthesis is a feature of Web speech API. It aims to provide web developer a text-to-speech service.

You can check the more details at https://dvcs.w3.org/hg/speech-api/raw-file/tip/speechapi.html

WebAPI

The following is a sample codes of using speech synthesis on web page.

var txt = new SpeechSynthesisUtterance('Hello Mozilla');

speechSynthesis.speak(txt);

To make speech synthesis API speak the sentences or words, They should be wrapped into a SpeechSynthesisUtterance object, and pass it to speechSynthesis.speak() as parameter. There are more details in following sections.

SpeechSynthesisUtterance

SpeechSynthesisUtterance is an interface that contains the information about how and what content the system should speech.

attribute DOMString text; //The content system should speech attribute DOMString lang; //The language system should choose attribute SpeechSynthesisVoice? voice; //The voice system should use attribute float volume; //The speech volume attribute float rate; //The speech rate attribute float pitch; //The speech pitch

SpeechSynthesis

SpeechSynthesis is an interface of the speech system. developer can control it through following methods.

void speak(SpeechSynthesisUtterance utterance); //Make the system speak the SpeechSynthesisUtterance you pass. void cancel(); //Cancel the speak request and all the request in speak queue. void pause(); //Pause the current speak task. void resume(); //Resume the task from pause state. sequence<SpeechSynthesisVoice> getVoices();

With speak(), cancel(), pause() and resume(), SpeechSynthesis can control the play/stop of an Utterance. Also, it can use getVoices() to figure out what kind of language or speaking tone in the system.

Components

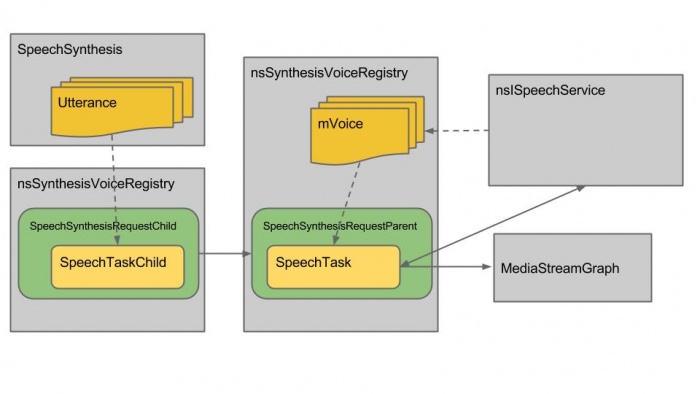

There are four main components of speech synthesis in the gecko system: SpeechSynthesis, nsSynthVoiceRegistry, nsSpeechTask and nsISpeechService.

There are two sides in SpeechSynthesis: parent side and child side. In child side, system will receive the requests from web pages or apps and pass it to parent side after wraps it into nsSpeechTask.

In the parent side, system will find a proper voice for each received nsSpeechTask and pass it to nsISpeechService for voice data. There are several ways to implement the nsISpeechservice, that will be mentioned in following sections.

SpeechSynthesis

SpeechSynthesis manages all the speech requests. There is a queue in SpeechSynthesis. When receiving a request, it will append the nsSpeechSynthesisUtterance to the queue. SpeechSynthesis controls and manages the queue according the functions like pause(), resume(), cancel() and speak(). When processing the queue, SpeechSynthesis will pass the nsSpeechSynthesisUtterance to nsSynthVoiceRegistry.

nsSynthVoiceRegistry

nsSynthVoiceRegistry is in charge of managing the incoming nsSpeechTask to the proper nsISpeechService. There is an array of voice options in nsSynthVoiceRegistry. When a TTS service is enabled, it will register its supported voices to nsSynthVoiceRegistry.

When receiving nsSpeechSynthesisUtterance from SpeechSynthesis in child side. nsSynthVoiceRegistry will wrap it into a nsSpeechTaskChild and pass ti to parent side.

In parent side, for each nsSpeechTask it received, nsSynthVoiceRegistry will find a proper voice from its voice array and pass it to the related nsISpeechService.

nsISpeechService

The actual component to do the text-to-speech, it will take initiate to register voice to nsSynthVoiceregistry. Two kinds of VoiceService

1. Indirect audio - the service is responsible for outputting audio. The service calls the nsISpeechTask.dispatch methods directly. Starting with dispatchStart() and ending with dispatchEnd or dispatchError().

2. Direct audio - the service provides us with PCM-16 data, and we output it. The service does not call the dispatch task methods directly. Instead, audio information is provided at setup(), and audio data is sent with sendAudio(). The utterance is terminated with an empty sendAudio().

nsISpeechService implement

In firefox OS, we use Pico as our TTS system. We are trying to enable SpeechSynthesis on other platform. You can check it in [Bug 1003439](https://bugzilla.mozilla.org/show_bug.cgi?id=1003439)