User:Rkentjames:Bug228675

I have proposed a simple algorithm in bug 228675 to limit the size of the spam token store. To help reviewers of the patch, as well as possible users, I want to present some data tests.

I have created an extension that I can use to run the TREC 2005 and TREC 2006 spam corpuses through Thunderbird for testing purposes.

The proposed patch uses a very simple algorithm to limit the size of the token store. When the number of tokens (good+bad) reach a certain limit, then the counts for all tokens are divided by two. Any tokens that previously had a count of 1 are thus eliminated. In addition, old training will have half of the effect of new training.

So, does this work or not? It depends on your definition of "work". First, as a general rule spam detection accuracy increases when more tokens are available. So eliminating tokens will almost always reduce your accuracy. You eliminate tokens for performance reasons, not for accuracy reasons. Also, you always have the choice of simply not training any more, as that will also stop your token count from growing. However, the nature of your spam and ham changes with time, so if you don't keep training, then your accuracy will also go down since your newer messages will be different than the older ones.

So the point of limiting the size of the token data store is to allow you to continually train the spam token data, without causing the token data store to get so large that performance problems result. In the past, the only choice that you had was to eliminate all of the data, and start over again. With the proposed patch, you should be able to set the desired size of the token data store, continually train, and let the program keep the token data store at your desired size.

Although there are many possible algorithms that might be used for deciding how to prune the token data, I wanted to start with a method that did not require any changes to the existing training.dat file. Hence the proposed simple algorithm.

But, does it actually work? Let me show you some results to give you an idea. To fairly compare this algorithm, we need to compare it to the alternative of using a static data store that does not increase in size. I divide the SPAM corpus into 100 random groups. I train on 5 of these groups, and see how many tokens result. The proposed algorithm sets a maximum size for the number of tokens, but typically varies between half the maximum and the maximum. I want to set a maximum token limit that gives the same average number of tokens as the static data. Let N be the desired maximum token count, and M be the desired average token count. Then M = (N + N/2) /2, or N = (4/3) M So in my tests, after I determine the number of tokens M that were obtained by training the first five groups, I set the maximum tokens to (4/3)M for the comparison test.

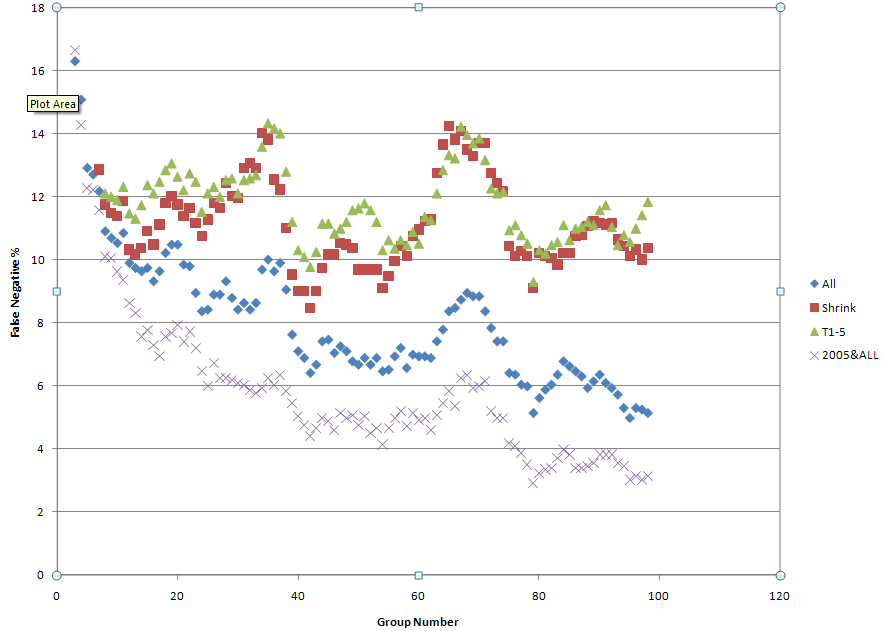

Here is a typical result. In this case, I am using the TREC 2006 corpus. The vertical axis is the false negative rate for spam detection (that is, the percentage of messages that were spam but marked as ham). The horizontal axis is the group number, with group 1 being the first group trained or scored. All data is presented as moving averages of five groups.

Data set 1 "All" starts with an empty token store, scores each messages, then trains according to the known status. There are no token limits.

Data set 2 "Shrink" applies the new algorithm, limiting the token count to 4/3 of the token count from training on the first 5 groups.

Data set 3 "T1-5" is like 1, except training stops after 5 groups.

Data set 4 "2005&ALL" is the only set that starts with a non-empty training file. It uses a training file obtained by training on all of the data from the TREC 2005 corpus. Note this corpus is roughly 92,000 messages (compared to 37821 for the 2006 corpus) so that is a lot of previous training!

For the combined TREC 2005+2006 corpus, there were 52309 good messages, 77568 junk messages, 593,186 good tokens, and 484,172 junk tokens. training.dat size is 43 megabytes. That's what gives the best results!

Training using 5/100 of the TREC 2006 corpus gave 27108 good tokens, 31002 junk tokens, combined is 58110 tokens. For the test using the patch, I limited tokens to 4/3 of that, or 77480.

Discussion

The main point is to compare the graphs for the "Shrink" to the "T1-5" data. They use the same number of average tokens, but "Shrink" is being continually trained. The point is that there is little difference between them, so that the proposed 228675 patch gives the same result as static training, for a given number of average tokens.

The other two graphs show the value of more tokens. "All" shows the effect of training on all of the data. Clearly performance is getting better as more tokens are added.

"2005&ALL" is interesting. When the large number of tokens from TREC 2005 training are applied to the TREC 2006 data, initially the performance is lousy, that is virtually identical to the results from starting with no data. But after just a little bit of training with the new data, this graph pulls away from the other data, and shows the best performance in the end. This shows that the best performance comes when you use both recent data, as well as lots of data.