Buildbot/Talos/Sheriffing/Alert FAQ: Difference between revisions

m (→Why does Alert Manager print -xx%: - minor cleanup) |

(Redirected page to TestEngineering/Performance/Sheriffing/Alert FAQ) |

||

| (4 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

#REDIRECT [[TestEngineering/Performance/Sheriffing/Alert_FAQ]] | |||

= FAQ = | = FAQ = | ||

= What alerts are displayed in Alert Manager = | = What alerts are displayed in Alert Manager = | ||

[[ | [[https://treeherder.mozilla.org/perf.html#/alerts Perfherder Alerts]] defaults to talos alerts that are untriaged. It is a goal to keep this list empty! You can view alerts that are improvements or in any other state (i.e. investigating, fixed, etc.) by using the drop down at the top of the page. | ||

= Do we care about all alerts/tests = | = Do we care about all alerts/tests = | ||

Yes we do. Some tests are more | Yes we do. Some tests are more commonly invalid, mostly due to the noise in the tests. We also adjust the threshold per test, the default is 2%, but for dromaeo it is 5% | ||

Here are some platforms/tests which are exceptions | Here are some platforms/tests which are exceptions about what we run: | ||

* Windows XP - we don't run dromaeo*, kraken, v8 | * Windows XP - we don't run dromaeo*, kraken, v8 | ||

* Linux64 - the only platform which | * Linux64 - the only platform which we run dromaeo_dom | ||

* Windows 7 - the only platform that supports xperf (toolchain is only installed there) | * Windows 7 - the only platform that supports xperf (toolchain is only installed there) | ||

| Line 27: | Line 19: | ||

On almost all of our tests, we are measuring based on time. This means that the lower the score the better. Whenever the graph increases in value that is a regression. | On almost all of our tests, we are measuring based on time. This means that the lower the score the better. Whenever the graph increases in value that is a regression. | ||

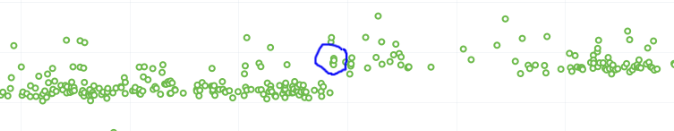

Here is a view of a regression: | |||

dromaeo_css | |||

dromaeo_dom | [[File:Regression.png]] | ||

v8 version 7 | |||

canvasmark | We have some tests which measure internal metrics. A few of those are actually reported where a higher score is better. This is confusing, but we refer to these as reverse tests. The list of tests which are reverse are: | ||

* dromaeo_css | |||

* dromaeo_dom | |||

* v8 version 7 | |||

* canvasmark | |||

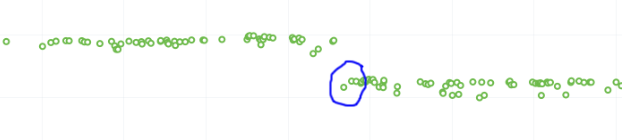

Here is a view of a reverse regression: | |||

[[File:Reverse_regression.png]] | |||

= Why does Alert Manager print -xx% = | = Why does Alert Manager print -xx% = | ||

The alert will either be a regression or an improvement. For the alerts we show by default, it is regressions only. It is important to know the severity of an alert. For example a 3% regression is important to understand, but a 30% regression probably needs to be fixed ASAP. This is annotated as a XX% in the UI. | The alert will either be a regression or an improvement. For the alerts we show by default, it is regressions only. It is important to know the severity of an alert. For example a 3% regression is important to understand, but a 30% regression probably needs to be fixed ASAP. This is annotated as a XX% in the UI. there are no + or - to indicate improvement or regression, this is an absolute number. Use the bar graph to the side to determine which type of alert this is. | ||

NOTE: for the reverse tests we take that into account, so | NOTE: for the reverse tests we take that into account, so the bar graph will know to look in the correct direction. | ||

* [https://wiki.mozilla.org/Performance_sheriffing/Alert_FAQ go to new page] | |||

Latest revision as of 13:14, 6 August 2019

Redirect to:

FAQ

What alerts are displayed in Alert Manager

[Perfherder Alerts] defaults to talos alerts that are untriaged. It is a goal to keep this list empty! You can view alerts that are improvements or in any other state (i.e. investigating, fixed, etc.) by using the drop down at the top of the page.

Do we care about all alerts/tests

Yes we do. Some tests are more commonly invalid, mostly due to the noise in the tests. We also adjust the threshold per test, the default is 2%, but for dromaeo it is 5%

Here are some platforms/tests which are exceptions about what we run:

- Windows XP - we don't run dromaeo*, kraken, v8

- Linux64 - the only platform which we run dromaeo_dom

- Windows 7 - the only platform that supports xperf (toolchain is only installed there)

Lastly, we should prioritize alerts on the Mozilla-Beta and Mozilla-Aurora branches since those are affecting more people.

What does a regression look like on the graph

On almost all of our tests, we are measuring based on time. This means that the lower the score the better. Whenever the graph increases in value that is a regression.

Here is a view of a regression:

We have some tests which measure internal metrics. A few of those are actually reported where a higher score is better. This is confusing, but we refer to these as reverse tests. The list of tests which are reverse are:

- dromaeo_css

- dromaeo_dom

- v8 version 7

- canvasmark

Here is a view of a reverse regression:

Why does Alert Manager print -xx%

The alert will either be a regression or an improvement. For the alerts we show by default, it is regressions only. It is important to know the severity of an alert. For example a 3% regression is important to understand, but a 30% regression probably needs to be fixed ASAP. This is annotated as a XX% in the UI. there are no + or - to indicate improvement or regression, this is an absolute number. Use the bar graph to the side to determine which type of alert this is.

NOTE: for the reverse tests we take that into account, so the bar graph will know to look in the correct direction.