State Of The Internet/Surveillance Economy/consentandidentity

Powering-up Consent and Identity

Changing the consent conversation from “control over your privacy” to “power over your identity.”

The Problem to Solve

None of us are the same person all day long. Our minds and behaviors jump from context to context, sometimes by the minute.

“Home” you likes to hang out with the kids, watching Netflix’s “Kids” profile, all safe under the eye of your home security system. “Work” you deals with passwords, HR websites, training materials, and your inbox. “Monday Night Football” you Ubers to the bar, shares Fantasy Drafts, and uses Venmo to pay for your half of the bill. In some moments you want to be “fully you” online. In others, you want to be anonymous or pseudonymous. And every side of you expects your technology to understand that. Different sharing preferences, different security levels, different amounts and types of data you want accessed.

Yet, we have no choice but to present our “full” selves to every online service.

Since there is little risk to collecting as much personal data as possible, companies extract everything they can about us— our “full selves.” Right now, all of this data is available to the highest bidder. Without us knowing, our surplus data is moved, traded, “enriched” and exchanged amongst brokers every day–to the point where truly knowing what’s out there about us and how it shapes our lives is next to impossible.

We want a world in which data is shared—under strong protections—on a need-to-know basis.

Overall Approach

By giving people power over their faceted identities, and the information that is exchanged in every context, we will prevent service providers from being able to harvest as much data as possible. This would shift the conversation from control over privacy to power over identity.

Users are people

Change the consent conversation from “control over your privacy” to “power over your identity.”

Restore collective bargaining power

By transferring the responsibility of consent from every single user in the moment to a larger, trusted collective, we hope to restore people’s bargaining power.

Need-to-know data permission

Allow people to present what they want about themselves, when they want. Data is shared on a need to know basis.

Tactics to Explore

Creating platforms and services that allow people to 'own' and manage variable identities, collectively align on consent models, and eventually create smart identity agents.

Assumptions to Test

That there is enough risk and benefit for companies to acquiesce to collecting less data.

If we can control the volume and relevancy of data which services collect, we will…

Reduce Exposure…

By balancing the power between users and providers.

Reduce Exclusion…

By allowing users to truly represent themselves, and reduce presumptions, assumptions, and marginalization.

Reduce Exploitation...

By increasing transparency of what data is stored where, and allowing users to see and understand that.

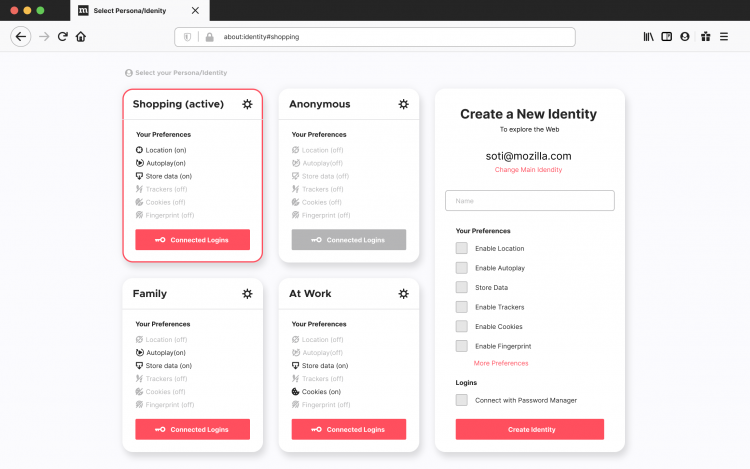

Phase 1: Diving In: Variable Dynamic Personas and Identities

Imagine a future where variable, dynamic identities create a barrier between you and the services that track, isolate, target and manipulate you.

Instead of having all your data fully exposed across digital and physical environments, this system would offer online self-sovereignty: you would control your various online identities—including anonymity and pseudonymity—and the system would limit access requests based on sharing controls you’ve set. As your context shift, so do your data preferences.

What problem does it solve

Users of all ages increasingly want more contextual, privacy protected lives. This is particularly true for many Gen Z'ers, who prefer platforms that leave temporary digital footprints, like Instagram Stories or Snapchat (favored by 46% of American teenagers), over the town hall of Facebook.

How does it solve it?

A B2C product where users switch from one persona/identity to another, similar to Netflix profiles. Requests for data access are blocked based on the selected identity. Ultimately, a ‘data agent’ would understand how and in what context to do this for you, seamlessly.

With whom might we work?

- New startups working in the digital identity and tracking space. For example, InCountry (15m Series A), OneTrust ($200m at a $1.3bn valuation), TrustArc ($70m), Privitar ($40m), BigID ($80m+ Series B).

- Progressive advocates in government and civil society, such as Mozilla Fellows like Karolina Iwańska (of the Panoptykon Foundation), Fieke Jansen (of the Data Justice Lab), Anouk Ruhaak (data governance and consent) and Richard Whitt (GliaNet), among others

How could we start?

These ideas incorporate feedback and builds from employees and community members at Mozilla's January 2020 All Hands.

- Build on our simple take on consent through the browser (drawing on the Global Consent Manager Add-on, the Identity Access Management project, other password manager work, and Fx Accounts) to understand more complex user needs and behaviors around identity, authentication, and consent, including ephemeral or pseudonymous IDs and the differences between 'trust me' and verifiable. What might we learn from the Emerging Markets team's work on phone call and SMS blocking?

- How might we build “zero knowledge proof” algorithms to support user control of these identities and the data flow? Check out the academic-led standards collaboration, ZKProof as well as Mozilla 'one per person' proposal.

- What might we build on from Mozilla’s Persona project?

- Map identities to patterns of technology touch points to recognize segmentation.

- How could we help people generate anonymous identifiers to use online, such as email addresses, phone numbers, and perhaps even credit cards? If done at scale, would this promote even worse behavior from companies and people?

- Collaborate with digital media companies and progressive brands to understand how we might foster an advertising system that's not based on invasive profiling, ad fraud, and brand degradation. How might we work with publishers on better consent management platforms/CMPs? And perhaps also with opt-in ad providers, like Good Loop?

- Check out Global Public Inclusive Infrastructure’s work in the area of dynamic identities, which are very difficult in practice.

- How might Mozilla's project around improving private browsing relate?

- Understand and build on the online behaviour of teenagers. They often live and breath multiple identities online and offline.

- Review the years of discussion around 'intention casting' from Project VRM

- How can we move personally identifiable information away from server farms and back to local control?

Other observations from All Hands include:

- “Mix up the steps. Create data storage and ownership. Provide a way to consent to transaction via a commons. Don't rely too heavily on the algorithms to start, make that and opt-in an add on later as a premium service?”

- “I think this is valuable at disrupting the cost of the data and making it available to startups. This disruption can kill the market of tracking across website as we can have quality and quantity.”

- “Identity is a word which those with privilege identify with. How do you diversify and stretch framing so it appeals to low agency groups too?”

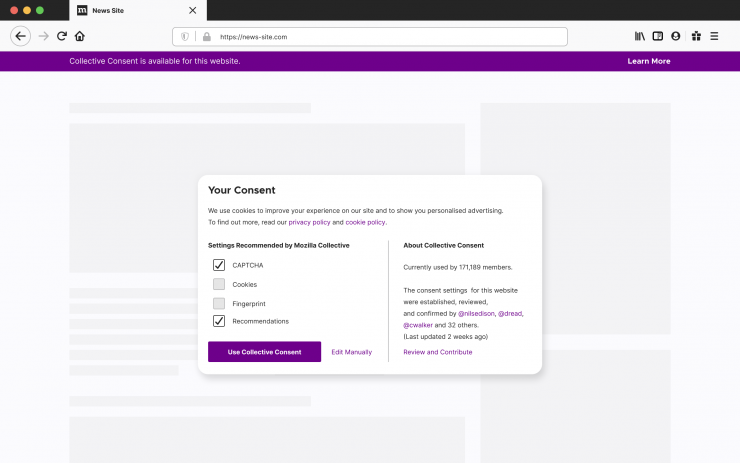

Phase Two: Building Momentum: Collective Consent Manager

Imagine a future where collectives negotiate things like consent, terms of service, and EULAs. For example, citizens of Des Moines could collectively red line and curate how the city can track them and use their data. Or employees of corporations could restrict tracking of their focus and attention. Online gamer groups could take collective action against third party conversation collection and storage.

What problem does it solve?

Users have no power, as atomized individuals, to negotiate the terms and conditions of the way surveillance technologies track and manipulate them across digital and physical environments.

How does it solve it?

A B2C product that associates your identities with various collectives (e.g., your city, your school, your credit union, your peers), creating a path to collective action around consent to restore power balance.

With whom might we work?

- Collective interest groups and privacy advocates

- Cities (e.g. Barcelona)

- Creative Commons and EFF

How could we start?

- What might a community hub for consent look like?

- How could we create open source terms and condition certificates?

- How could we create “playlists” of consent to which people could subscribe, and what could we learn from this?

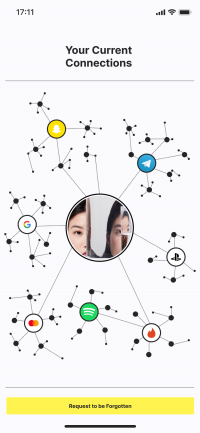

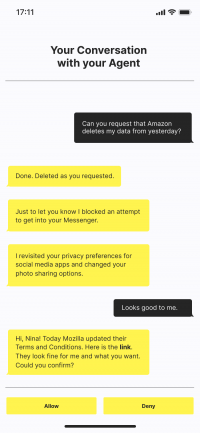

Phase Three: Reaching Our Destination: Differential Data Agents

Imagine a future of trusted digital intermediaries, enabled by intelligent gathering, collation, and presentation of users’ identities. This differential data agent would negotiate value exchanges with service providers (be that a server, an IoT device, or smart city elements) on behalf of the user—and even their collectives—based on set priorities, preferences, and contexts.

What problem does it solve?

For users, computational agents that support individuals’ needs through collective action could inject both convenience and trust.

For companies, allows them to use data from a large group of people without being exposed to the compliance risks of being responsible for that data.

How does it solve it?

A B2B product where collective consent can understand where exposures are for both sides of the marketplace—becoming the collective mediator. By gaining a critical mass of company exposures, Mozilla could understand and quantify how the data breaches will affect a single business and charge a service fee or consultancy around how to rectify the situation.

With whom might we work?

- Legal firms or insurance providers.

- Startups in the digital consent space.

- Mozilla Fellow Richard Whitt's work with Glia.net's decentralized ecosystem of digital trust

How could we start?

Research agent-agent protocols, and explore possibilities for negotiation.