Necko/Cache

Overview

The disk cache stores resources fetched from the web so that they can be accessed quickly at a later time if needed. The main characteristics of Necko disk cache are:

- The cache should not grow unbounded so there must be an algorithm for deciding when to remove old entries. Currently, we use a very simple LRU (least recently used).

- Access to previously stored data should be reasonably efficient, and it should be possible to use synchronous or asynchronous operations. HOWEVER...

- All I/O should be off the main thread.

External Interface

The majority of cache access happens through nsICacheSession and nsICacheEntryDescriptor.

An entry is identified by its key, which is just the name of the resource (for example http://www.mozilla.org/favicon.ico), plus some possible additional flags (protocol type, post ID, etc). Once an entry is created, the data for that particular resource is stored in separate virtual files: one for the metadata (key, response headers, security info, etc) and another one for the actual data.

Disk Structure

All the files that store the disk cache live in a single folder (you guessed it, it is called cache), and every file inside that folder is part of the cache.

There are, at a minimum, 4 files and 16 directories. The files are the cache map and the block files. If any of these files is missing, we trash the whole thing and start from scratch. The 16 directories are the top-level directories for separate-file data and metadata entries (0-F). Any data or metadata that can't fit in one of the block files is stored in a separate file named for its hash number (hashed version of its key). See below for information on what sizes can be fit into block files.

Cache Address

Every piece of data or metadata stored by the disk cache has a given "cache address". The cache address is simply a 32-bit number that describes exactly where the data is actually located.

The structure of a cache address is defined in netwerk/cache/nsDiskCacheMap.h, and basically tells if the required data is stored inside a block file or as a separate file and the number of the file (block or otherwise). If the data is part of a block file, the cache address also has the number of the first block with the data, the number of blocks used and the type of block file.

These are few examples of valid addresses:

| 0x00000000 | not initialized |

| 0x80xxxxyy | separate file of size xxxx bytes and generation yy |

| 0x91010003 | block file 1, 2 blocks, starting at block 3. |

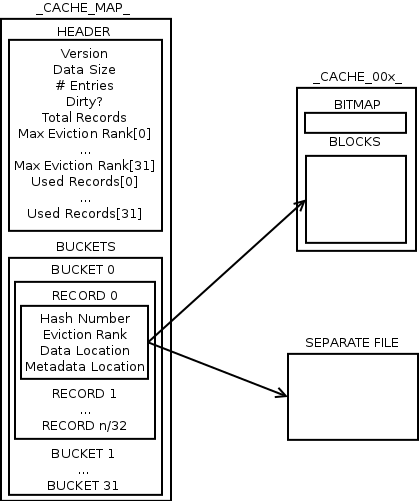

Cache Map File Structure

The index file structure is specified in netwerk/cache/nsDiskCacheMap.h. It is an nsDiskCacheHeader structure followed by a large block of nsDiskCacheRecords. The cache map file is named _CACHE_MAP_. There are at least kMinRecordCount records divided evenly into kBuckets buckets (grouped by the least significant bits of the hash number; note that kBuckets is always a power of 2, currently it is 32). There may be a number of unused records in each bucket, so nsDiskCacheHeader::mEntryCount tells how many entries are used total. The number of used records in each bucket is stored in the nsDiskCacheHeader::mBucketUsage array.

This entire file is read into memory when the cache is opened.

IMPORTANT NOTE

The array of records is searched in each of the following cases:

- Cache Miss (not in cache or doomed)

- Any cache hit where we don't have an existing binding to the record

- Any cache hit where all existing bindings to the record are in use

This search is O(n) on the total number of records in the map, both used and unused.

Block File Structure

The block-file structure is specified in netwerk/cache/nsDiskCacheBlockFile.h. It is a bitmap followed by a variable number of fixed-size data blocks. Block files are named _CACHE_00x_, where x is the file number.

The bitmap is read into memory to make it easy to find/free blocks in the file.

The three block files contain blocks of size 256B (file 1), 1kB (file 2), and 4kB (file 3). Up to 4 contiguous blocks in the file may be used by a single entry.

It is important to realize that once an entry has been written, its size cannot be increased beyond the end of its existing last block. The only way to grow an entry is to read it, delete it from the file, and write it with its new size. If an entry needs to be grown beyond the maximum size available in a particular block file, it can either be moved to the next sized block file, or (in the case it is already in the 4kB block file) moved to a separate file.

Separate File Structure

Each separate file may hold either a data entry or a metadata entry. The naming scheme for separate files is based on the hash number of the entry (the hash number is just an entry's ASCII key run through a hash function to turn it into a 32-bit integer). There are 2 directory levels for separate files, broken down by hash number. If you were to have an entry whose hash number is 0x1234ABCD, its metadata would be stored in 1/23/4ABCDm01 and its data would be stored in 1/23/4ABCDd01 (assuming both metadata and data were stored outside the block files and were both on their first generation).

Data Entries

For a virtual file that holds a data entry, it is quite simply the data exactly as it was received off the network. It may therefore be gzip encoded, base64 encoded, plain text, image, etc. Note that it is only the response body that is considered data, so response headers and other information are kept in metadata entries.

Metadata Entries

The entry metadata format is defined in netwerk/cache/nsDiskCacheEntry.h. The key is stored on disk as a series of bytes terminated by a nul byte. The free-form metadata at the end is stored on disk in the form <key0>\0<value0>\0<key1>\0<value1>\0....

A Diagram

Memory Structure

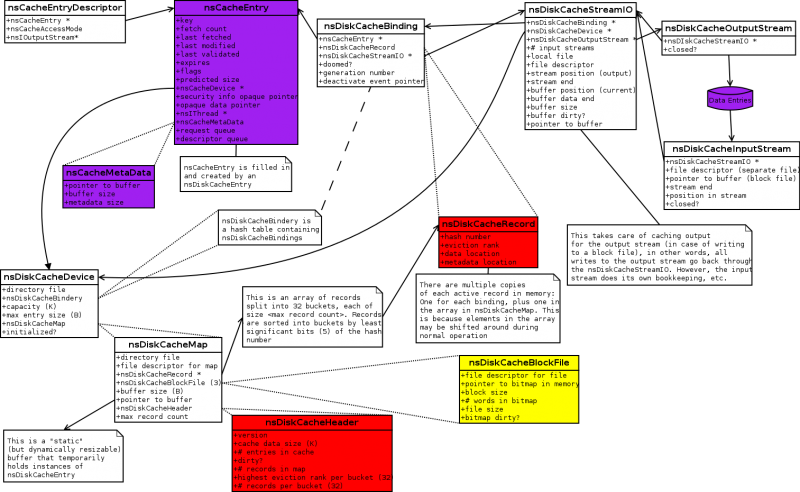

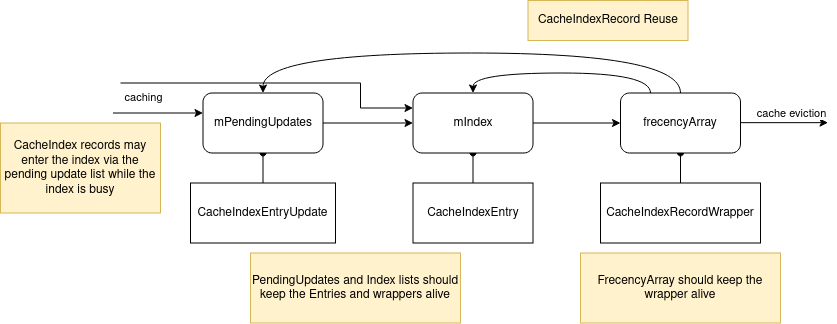

NOTE: This refers to the in-memory structures used for the disk cache, not for the memory cache, which is a separate entity entirely.

In this diagram, classes colored red come (in whole or in part) from _CACHE_MAP_, classes colored yellow come (in whole or in part) from _CACHE_00x_, classes colored purple come (in whole or in part) from block files or separate files, and all other classes are purely transitory and are never persisted to disk.

Some Walkthroughs

So, how does all this translate into getting something out of the cache? Let's walk through a few simple operations to see how it's done. Note that there are a LOT of details being skipped in these walkthroughs, they are just here to serve as overviews. If you want full details, jump into the code (links are provided at what are hopefully key points in each process).

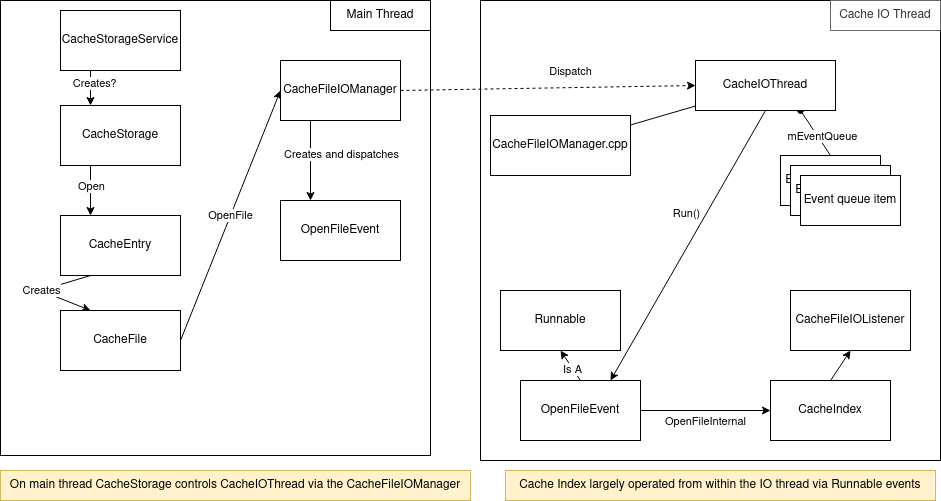

Cache Read/Write

Let's start in nsHttpChannel. When its Connect function is called (for the first time), it makes a call to nsHttpChannel::OpenCacheEntry, which calls through to nsHttpChannel::OpenNormalCacheEntry. This creates the hash key from the URI (see nsHttpChannel::GenerateCacheKey), determines where to store/look for the cache entry (nsHttpChannel::DetermineStoragePolicy), determines what access is needed for the cache entry (nsHttpChannel::DetermineCacheAccess), and asks the singleton nsHttpHandler to give it an nsICacheSession. We then move on to an asynchronous call to nsCacheSession::AsyncOpenCacheEntry.

When the nsCacheSession is asked to open a cache entry (either synchronously or asynchronously), it calls the class static function nsCacheService::OpenCacheEntry. nsCacheService is a collection of class static functions as well as a singleton instance. nsCacheService::OpenCacheEntry uses the singleton to create a request which is then dispatched to the cache I/O thread. The nsCacheRequest is used as an argument to nsCacheService::ProcessRequest.

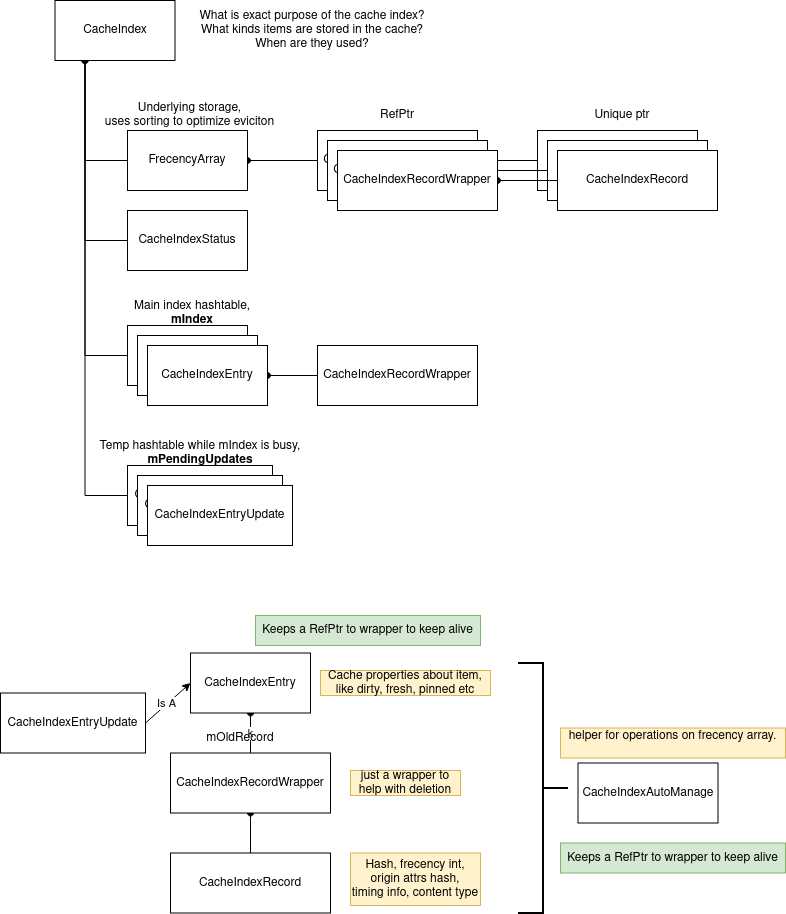

The first step in processing a cache request is to find a cache entry appropriate for the request. This is the job of nsCacheService::ActivateEntry, which first searches the hash of active entries. If there is no active entry for the request, nsCacheService::SearchCacheDevices is called. If there is no such entry on the disk device (or any other possible devices), we will create a new entry (if the client has requested to be able to write to the cache). Once we have an entry, we mark it as fetched and activate it (by placing it in the active entries hash).

If we have to search the cache devices, the nsCacheService singleton will first open the disk device (if necessary, see Opening The Cache below), and then call nsDiskCacheDevice::FindEntry. This first looks in the nsDiskCacheBindery hash to see if there is an nsDiskCacheBinding that has the same key (ie, not a hash collision) and is no longer active but has not yet been marked as such. If such a binding exists, we've found an appropriate entry. If not, we first search for the nsDiskCacheRecord for this key. nsDiskCacheMap::FindRecord is O(nvalid_records_in_bucket). Once we have the record, we read the entry metadata from disk (nsDiskCacheMap::ReadDiskCacheEntry), create an nsCacheEntry out of that, and create an nsDiskCacheBinding to tie the nsCacheEntry and nsDiskCacheRecord together.

Once we have our cache entry (which may or may not be bound to a device at this point), we make a callback to nsHttpChannel::OnNormalCacheEntryAvailable, which then calls nsHttpChannel::Connect for the second time. Assuming we actually had a cache entry to read from, we validate that it isn't expired or otherwise unusable (a process that is O(nmetadata_bytes)) and then call nsHttpChannel::ReadFromCache. This calls nsCacheEntryDescriptor::OpenInputStream and reads the cache data from the returned input stream asynchronously. nsDiskCacheStreamIO (which implements the input stream along with nsDiskCacheInputStream) will either read the cache entry directly from disk (if it's stored in a separate file), or will slurp the blocks from a block file into memory and present that as an input stream to the consumer. If there was no cache entry, when the response is being processed, nsHttpChannel::InstallCacheListener is called, which sets up an I/O tee to send the data both to the main listener as well as the nsDiskCacheOutputStream (note that this could also conceivably to the memory cache).

During the setup for reading or writing a disk cache entry (as well as other events, such as validation, changing the size of an entry, and entry deactivation), nsCacheService::EnsureEntryHasDevice will ensure that a binding exists by calling nsDiskCacheDevice::BindEntry. This may call nsDiskCacheMap::AddRecord, which will add a record to the appropriate bucket. This operation could involve growing the size of every record bucket and shifting all the records around, an O(nrecords) operation, where nrecords is at least 512 (each record takes 16 bytes). A similar operation happens when shrinking the cache during eviction proceedings.

Opening The Cache

As mentioned above, the nsCacheService singleton is what takes care of opening the disk cache, using nsCacheService::CreateDiskDevice. The main work is driven by nsDiskCacheDevice::Init, which first sets up the hash table that is the nsDiskCacheBindery, and then reads information from the disk (nsDiskCacheDevice::OpenDiskCache). This does makes sure the cache directory exists, possibly deletes an old, corrupt cache directory (asynchronously), and then calls nsDiskCacheMap::Open.

When the cache map is opened, after performing some sanity checks (making sure all the right files exist) the first thing is to store an open file descriptor for _CACHE_MAP_ and slurp the contents of the header into memory. It then allocates an array for the nsDiskCacheRecords and slurps those into memory, as well. Each of these structures is converted from NBO to HBO. If this is a new cache, it creates both these files from scratch with a minimum of 512 nsDiskCacheRecords. After that, nsDiskCacheMap::OpenBlockFiles is called, which iterates through each of the block files and calls nsDiskCacheBlockFile::Open on each of them. This rather simply stores a file descriptor for the block file, reads the bitmap into memory (converting from NBO to HBO), and makes sure the size of the file is approximately what's expected. Finally, the dirty bit is set in the nsDiskCacheHeader is set, which is then flushed to disk (using fsync(2) or similar).

Closing The Cache

The driver for this is nsDiskCacheDevice::Shutdown. First, this evicts cache entries if necessary (see Cache Eviction below). It then waits until the cache I/O thread is done doing any work it needs to do, and then closes the nsDiskCacheMap. In nsDiskCacheMap::Close, each of the block files is closed after force-flushing the bitmap to disk, each of the nsDiskCacheMapRecords is unswapped, then the full array of them is written to disk, the dirty bit is cleared in the nsDiskCacheHeader, and the header is force-flushed to disk.

Cache Eviction

Eviction is used to describe the process of clearing out extra space in the cache (not to be confused with Doom, which is what happens when we get rid of a cache entry that is no longer valid). There are two ways eviction can occur: (1) by requesting the eviction through the UI or for a particular cache client (we'll call this "Client Eviction"), or (2) by noticing that we have filled up our cache and need to clear some things out (we'll call this "Automatic Eviction").

Client eviction is a relatively straightforward process, it is driven by nsDiskCacheMap::VisitRecords. This goes through each entry in the cache. If it matches the client ID for which we are evicting records, it removes that record from its bucket, and moves an already-checked (and therefore not evicted) record into the place that was held by the now evicted record. At the end, if any records were evicted from any buckets, the eviction ranks are updated (which requires walking through all non-evicted entries one more time). This type of eviction is an O(nentries) process. One thing to note is, if the user has requested that we clear the entire cache, we treat it as if we were starting up with a corrupted cache: move the old cache out of the way, delete it in a background thread, and create a new cache from scratch.

Automatic eviction is slightly more complicated. It is driven by nsDiskCacheMap::EvictRecords. The main process runs in a loop until we have determined that we have freed up enough space. On each iteration of this loop, we figure out which bucket in the cache has the most evictable entry. For each entry in that bucket, we determine whether or not its eligible to be evicted (ie, its eviction rank is >= the rank we used to determine which bucket to use), and if it is, we move a non-evicted record into its place in the bucket. Once we've gone through the whole bucket, we update the eviction rank for that bucket and continue on through the next iteration of the outermost loop. This type of eviction is an O(nentries2) process in the pathological case, although in actuality it is more like O(nevict2) where nevict is the number of entries in some hypothetical bucket that contains all the entries we will evict during the process, and ONLY those entries.

Doom

Dooming an entry is what happens when we notice an entry is no longer valid (it is also part of the eviction process, in the case that a record to be evicted is currently in use). Initially, this is a very simple procedure. When we notice a record is due to be doomed, its record is removed from the nsDiskCacheMap. When all references to a doomed cache entry are closed (deactivated), that entry's data is removed from disk. When the cache is closed, all entries in the nsDiskCacheBindery that are marked as doomed (and have not yet been deactivated) are cleaned from storage.

Implementation & Performance Notes

nsCacheService, nsDiskCacheDevice, and nsDiskCacheMap are all either explicitly or effectively singletons while a profile is open.

Resizing the nsDiskCacheMap can be a somewhat expensive operation: at a minimum, at least 512 16-byte records will be moved, or 8k of data. For reference, the newest processors Intel has released (i7) have 32k of L1 d-cache, 1M of shared L2 cache, and 8M of shared L3 cache. While we may not lose our place in cache (especially the outer caches) at a smaller size, the maximum size disk cache has 16k entries, which is 256k of data. Of course, I imagine we don't resize this very often, so it's probably not a big deal (we should maybe get telemetry on this). For reference, things that can change the size of the nsDiskCacheMap are

- New cache entry

- Eviction

- Deactivate doomed entry

- Change in size of existing entry

We duplicate in-memory data by copying nsDiskCacheRecords from the nsDiskCacheMap every time we activate a record. We need to get telemetry to see how many active records we have at once, but if there is a significant number of active cache entries at any given time, duplicating those 16 bytes per entry could add up.

Eviction could be very slow (though again, we don't have any hard data on this). If we could somehow keep entries sorted by eviction rank we could avoid this possible cost (which is a task that would probably also go hand-in-hand with de-duplicating nsDiskCacheRecords).

We do keep data read from block files in memory for all entries that have not been deactivated, which can speed up later cache hits on these entries.

Getting values from metadata is an O(n) operation, where n is the number of bytes in the <key>\0<value>\0... string that represents the metadata of an entry. Given that we do a lot of these lookups during cache validation in nsHttpChannel, it seems as if we may be better served sticking this information in a hash? We already have the code infrastructure in place to do this. However, again, we have no hard data on this so we don't know if the speedup is worth the memory trade off.