QA/Execution/Meetings/2009-11-18/MeasuringQuality

< QA | Execution | Meetings | 2009-11-18

Jump to navigation

Jump to search

Measuring Quality Notes and Thoughts

At the next qa meeting, lets continue our discussion on some concrete thoughts and ideas on quality measurement going into 2010. Please jot them down below on the wiki or notepad and come prepared to discuss on wednesday. The goal of this discussion is to come up with a collaborative approach to defining quality and a release criteria, and to put it into practice across our projects.

Some questions as a baseline:

- How would you measure quality in the project that you own?

- How would you define a release criteria to ensure you are shipping a quality release?

- What are some ways you can demonstrate more leadership on your project?

Tony

- spending 30mins every 2 weeks with a feature developer, going over a list of fixed bugs and talking about test strategy

- Look for more regression trends across bugs after every 3.6 release. Need to get a feel on how many checkins is a "good number". for example, is 150 bugfixes landing within a week of beta a good thing? how many regressions would that cause? Or maybe it is a good number, but need some price points to compare with.

- figure out a better way to track qawanted. If we're not keeping up with it, developers will not use it. Currently, i see damons using it the most, but then he has to send an seperate ping to get our attention. We need to be more proactive on qawanted bugs.

- I'd like to get developers when giving their component area updates, to also include any hints or areas that could use some extra testing in their area that week. For example, if Layout is making big changes to CSS, then it'd be good for them to mention to check more sites with fullscreen flash or div heavy tables. We can get in the practice of asking people, but the goal is to get them to volunteer information.

- Shifting mindset to not just testing the fix that a developer is asking you, but really ask the questions: "What other areas of exposure? What is the overall impact?" Scan related bugs.

Tracy

- Less manual pre-formatted regression testing (trust automation to do that)

- More proactive hammering on currently unstable areas.

- A stronger QA presence in Bugzilla

- work mostly in the highest priority areas

- get Shaver to keep to his promise of returning QA ownership to us

Aakash

- We need to advertise a lot more

- Just not enough initiated chatter with community members (not enough people or ineffective communication?) from the entire group on the whole

- We don't experiment with what quality means in general and for specific groups

- focus groups

- test pilot proposals (feature usage)

- Need better tracking for bugzilla components (all opened bugs, resolved <different states>, verified over time)

- Community members have a hard time finding information on day-to-day tasks we do

- We don't know enough about the under-the-cover aspects of everyone's projects and it causes confusion

- Each project has it's own strengths and weaknesses and the quality of the project has to be assessed on its own

- We should know a little bit more of the touch points in the code to write more focused tests and bugs (MozMill)

Juanb

- Maintenance Release Testing

- Bug verifications are important in as much as they lead us to explore paths not considered in the bug fix. These are most useful when they lead to re-opening a bug, or opening a new, related bug because the problem was not fixed or because it wasn't fixed completely.

- We should stop doing super-extensive updates checks. Using a pairwise testing approach, such that we don't spend more than a half hour per stage (betatest, releasetest, etc...) should be enough. Traditionally we have been testing many paths because in the past we did not have update verification scripts as part of the build process. Once this script is able to test complete update paths randomly, we will have even less need to check these. The most important paths are the most recent.

- We should go to beta as soon as we spot-check builds, as opposed to going through smoketests, BFTs, l10n first, before going to beta. This will make the builds available to many users, increasing the chance they will catch something we will not even look at while going through the usual tests suites. Meanwhile, while the builds are being used by beta users, we could continue our usual tests.

- We should have something that indicate to us where to focus our testing, based on the bug fixes for a particular release. Whether it be a human or an automated system that goes through the release bugs' content and comes up with a list of areas to look. Then, when we have the ability to create customized test suites/runs, we will be able to create a test run more suitable for that release, which will increase the chance of catching a regression.

- As a follow up to the above note, we should not have to run BFTs every release, but focused testing based on changes.

- We should do everything we can to make it easy for volunteers to jump in and help, at any point during any of the stages of release testing, and we should be constantly communicating to the community the timelines for our testing. I have several ideas for this :)

- Major Release Testing

- Feature completion should not be the only criterion for release. In the planning stages of major releases all the teams committed to it should create a release criteria that is acceptable to those involved and which can help us decide whether something meets the balance between features and quality. This should include any rough measurements of crashiness and stability and ways to compare it to previous releases. How crashy could the application be, based on whatever metrics we have available, and still feel comfortable for us to release? How many locales should we ship with, less than before, the same as before, more? How many beta users should we have, and for how long should we let them exercise the candidate builds, before we feel comfortable shipping? What percentage of popular add-ons should be compatible before it is ok to ship? How long should we allow ourselves to message the coming of a release before we say go? What should be the release criteria of less popular platforms, and to what extent could these affect our releasing to major platforms?

- WE, together with developers, should do everything we can to make it easy for volunteers from a wide range of skills to help us out in triaging, testing, developing, promoting, caring for a feature.

- We should invest a great portion of our time triaging bugs and testing features, and we should be working on new features from the beginning, establishing a relationship with the developers working on it so they know who to turn to when they need help with their stuff.

- Along the lines of working with developers and community, we should feel comfortable asking them to provide testing scenarios and verification steps that almost anyone can follow.

- More thoughts ...

Ctalbert

- QA should be actively involved in blocker nomination triage. That can serve as a forum to talk to developers about what is going into the product and what is not. We were involved with that in 3.5, but we were not actively involved. We should make the time to study the bugs and form good opinons on what should and should not go into the product.

- More thoughts...not enough time...

Timr

- Make sure we spend time with developers to understand what is important to them to work on, where they are writing tests, and where we should create tests. We can monitor and track tests for functional coverage. We should be able to articulate what we are doing and where we are focusing based on this.

- Measure and make more visible how many tests are available for or are going in with a feature or bug fix.

Murali

- I want QA to have better empirical evidence tracking to see that we are making a difference in the quality.

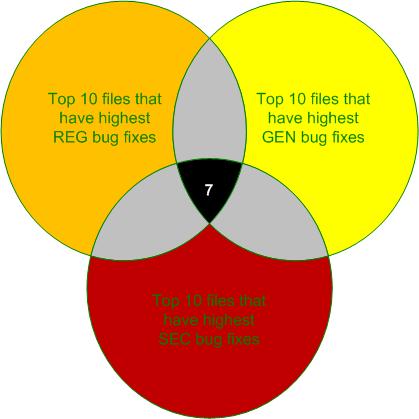

- For example : As of today, if I stack rank all the source files in Firefox in descending order based on number of General bug fixes, or regression bug fixes or security bug fixes , I see a lot of intersection in the files across all the three criteria.

- As of today, if I pick up TOP 10 files that have SEC bug fixes in them, TOP 10 files that have REG bug fixes in them and TOP 10 files that have GEN bug fixes in them. The above venn diagram explains the current situation.

- The union of all three criteria is a total of 15 files.

- The intersection is SEVEN files.

- Now, lets take these files and work with development to deep dive into these SRC files.

- after a month, if we take a snap shot of the fixes that went in during the entire month, and check the top 10 files in each criteria and check the intersection, that should ideally not be the same as the set we found in the previous month.

- if over a month to month study of many cycles, if the same set of files seem to be the fix leaders of the pack, then we have not succeeded in containing the buggyness.

- Now, lets take these files and work with development to deep dive into these SRC files.

StephenD

- Need to work closer with developers, to understand the scope of bugs -- do this early for the main features for each release

- Increase automated coverage for core features, to free up time to test manually (ad-hoc)

- Ask development and product managers to look/talk over your current coverage

Henrik

- We need a central place people can use to send and view reports. That will allow us to start a collaboration between all active users

- Having an ongoing review cycle for tests to land in the repository

- Tests should absolutely be runnable with current Mozmill release. There should not be a need to clone the Mozmill repository. Users can get annoyed.

- Reducing the amount of external content to lower the number of

failures due to website changes. With that we can work on real failures and bugs.

- Running tests on a daily basis (smoketests/bft/update) to add more

trust and catching regressions earlier

- In-detail project documentation (overview, roadmap, API's, ...) and details of ongoing projects

- Only run Mozmill tests which are not broken due to any known bug of Firefox. It will reduce the noise of broken tests.

- Making current status more prominent and talk about it in regularly meetings

(testdev, dev meeting?)

- Get attention of developers for bugs found with Mozmill

- Getting more contributors on different platforms

- Actively use the Mozmill list to keep people up to date

Discussion Notes

- How do we be more impactful and vocal in a project?

- being more analytical in nature

- Murali: analysis of the files. taking common files across different analysis. how can we take common analysis across these common bugfix files? (eg. intersection of security bugs, regression bugs, general bugs.

- Tracy: doing less manual grind, trust more of our automation test passes

- Developers live in bugzilla, see us working in their area more, they can continue to catch that

- how can we quantify quality? How do we get a feel of what quality is at? Getting an idea of when our product has a measurable goal, will help us change/adjust our process down the road.

- QA to be more part of the decision process. Example, if we knew we had a high # of bugs left to be fixed, is it really ready for RC quality?

- Aakash: Advertise more on quality to the different outlets we can find (eg. social networking, not initiating enough chatter in newsgroups) What exactly is advertising quality to the community mean?

- Explaining more to users how to use quality channels, awareness of what goes on with the mozilla project, showing charts and graphs of why firefox is better in quality now, advertising feedback tools

- Using more bug dashboards to trend what the right bug queries we can use

- Community still has a hard time finding out what to do with a project. We advertise projects, but necessarily what they can do.

- We dont know what others are doing on a project basis. We know the update on whats going on now, but we dont see the in-depth of the known issues of whats going on

- We need to anticipate better strengths and weaknesses of earlier projects like weave and mobile (lack of historical data)

- Juan: make it easier for anyone can follow what projects and tasks we want people to help with (a template, a spreadsheet, detailed explicit instructions)

- time to reassess how we test maintenance releases. Doing BFTs every cycle is not the best use of our time.

- Develop a way to look at bugs landing in release, find a place in litmus to just run the latest bugfixes that have landed. (focused testing)

- (eg. parse the bug and component, and see which bugs are related to it. This way break down the related components (eg. tab browsing), and try to narrow down the component testing) We need better guesstimates.

- did more focus testing around areas we dont often touch (eg. security)

- Timr: spend your time really talking to developers, picking where the risks are

- its easy to just re-run the same tests. key things is to work with developers to see where they are making changes, creating more testcases

- like crash community, we know there are more problematic websites out there. but no way to determine what those sites are?

- QA to get involved back into triage meetings, actually look at those bugs. start asking questions on those bugs. push for those on 3.7 community triage meetings. And for mobile also.

- We dont have a good grasp on how many testcases are going on, how many testcases are covered there. Having a way to measure how many devs have unit tests, how many we have functional tests... can we work toward that?

Draft 2 (Summarized)

Internal Communication

- Continued active conversation with development. Through bug triage, feature test planning/updates, large or small group. really asking what the overall impact of the bugfix is.

- Trending the way we look at regression bugs during the beta release period. Determining how many bugs are community finds/mozilla finds.

Products

- run automation frequently and trust results. spend more time on focused testing

- For 3.next releases, Spot check builds, and reduce smoketest/bfts/update paths. more pairwise testing

- Security builds: trust automated smoketests/bfts/updates/l10n, and devote manual coverage to spot checks; focus areas; website testing

Community

- constantly communicate the timelines of our projects

- A easy and feasible way for volunteers to hop in and join a project.

- Understanding and experimenting with how other groups and projects measure quality. Eg. focus groups, website usage,

- more attention, more mentoring, more direction