Services/F1/Server/Architecture

Goals

- provide a scalable architecture to serve up to 100M users

- make sure the server is immune against third-party failures (Twitter is down)

- isolate parts that need to be specifically secured in our infrastructure

Expected Load

Based on AMO ADU, we have 17k daily users (as of March 21) and a daily share rate of 1600 shares, translating to .094 requests per day per user.

If we transpose it to 100M users, the number of requests per day will be 9,400,000 so 109 requests per second.

The architecture we're building should therefore support at least this load. 200 RPS seems a good goal.

Since calls are done on third party servers, there's an incompressible time spent there. One request can last up to 3 seconds. So in order to support 200 RPS, we need to be able to support a real load of 600 simultaneous clients.

The RPS is getting bigger if we're doing an asynchronous architecture since the client will do at least one more request per transaction, but the number of simultaneous client will be very low.

data points

The ADU is pulled from AMO, other data is from log analysis in Splunk.

- ADU (March 21): 17K

- Shares per day: 1600

- Shares per IP address per day: 2 (very stable number)

- Twitter: 47%

- Facebook: 36%

- Gmail: 17%

- Others: <1%

- Error Rate (1): 0.04%

(1) errors can be anything from expired oauth tokens or invalid recipient email addresses for gmail to twitter outages.

Share Times:

These are fairly stable.

- Twitter: 300-400ms, peak: 700ms

- Facebook: 800-1500ms, peak: 2300ms

- Gmail SMTP: 2500-3500ms, peak: 7600ms

data size

The average size of data a user transfers through our servers is 16 bytes per day. So if a queue stores this data, it represents 1.5 Gbyte / day for 100M users.

quick stress test

Gozer ran a quick stress test of the system using grinder produced [these results].

Synchronous vs Asynchronous

Synchronous:

- ++ fast to set up (uses the existing app)

- + can evolve to an async version later (by adding a GET /result)

- - need to keep connections open

- -- any service failure ends up with a 503 to the client

Asynchronous:

- ++ short services down times are transparent to the client

- + no need to keep connections opened

- (+) (potentially) allow smart requests (batches) over services

- - more traffic (polling)

- - more pieces in the chain : more work to tweak/stabilize the architecture.

- -- longer to do - requires much more coding, more tweaking

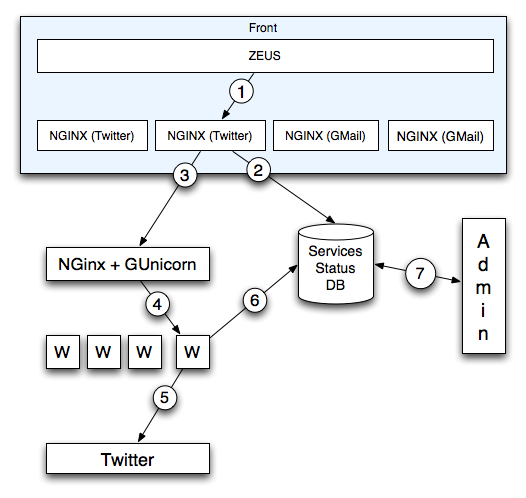

Synchronous Architecture

The synchronous architecture is composed of two layers:

- The front layer, which proxies calls to the back layer. Every request is routed to a back server.

- The back layer, which calls the third-party service and returns the response synchronously.

Both front and back layer interacts with the Services Status DB, a key/value storage global to the cluster that indicates the status of each service.

Detailed transaction

1. Zeus uses a X-Target-Service header that indicates which service is going to be called. For each service there's at least two servers that can be load balanced to receive requests. Zeus uses a round-robin strategy.

2. The server checks in the Service Status DB if the service is up, down or broken. If it's down, it returns immediately a 503 + Retry-after. For each service there's a threshold for the ratio value under which the service is considered unreliable (==broken). The Retry-After value is provided by the Service Status DB.

3. If the service is up, it proxies the request to one back-end server that is in charge of the service. There can be several back-end servers and the front server uses a round-robin strategy.

4. The back-end server proxies to the Gunicorn server, which picks a worker.

5. The worker does the job and returns the result.

6. The worker pings the Service Status DB In order to indicate whether the request was a success or a failure.

7. An admin application let Ops view the current status of the Services, but also shut down a specific service if needed.

Services Status DB

See https://wiki.mozilla.org/Services/F1/Server/ServicesStatusDB

Failures

1. Each service has at least two front servers. If they are all down, Zeus returns a 503 + Retry-After

2. Each front server ask the services DB the status of the service. If the DB is unreachable, it sends the request to a back-end server nevertheless. If the DB returns a value that indicates that the service is down, it returns a 503 + Retry-After.

3. Each front server has at least two back-end servers to proxy requests to. If they are all down, it returns a 503 + Retry-After

4. The back-end server pass the request to a worker. If the worker fails to reach the service after a given time, it returns a 503 + Retry after and indicates to the service DB the status.

5. If the worker cannot join the Service DB, it ignores it.

6. If the worker crashes while processing the request, a 500 is returned and the Service DB is pinged.

Security

The API keys for each services are stored in the back-end layer only. The front end layer, accessible from outside, does not have the keys and just pass the requests.

Long polling

The Synchronous architecture keeps the connection open during the whole transaction. The Front and back end web servers both pile up requests until they are over.

The Gunicorn server uses asynchronous workers that are based on GEvent which allow cooperative sockets. The immediate benefit is the ability to handle a lot of simultaneous requests that are I/O bound.

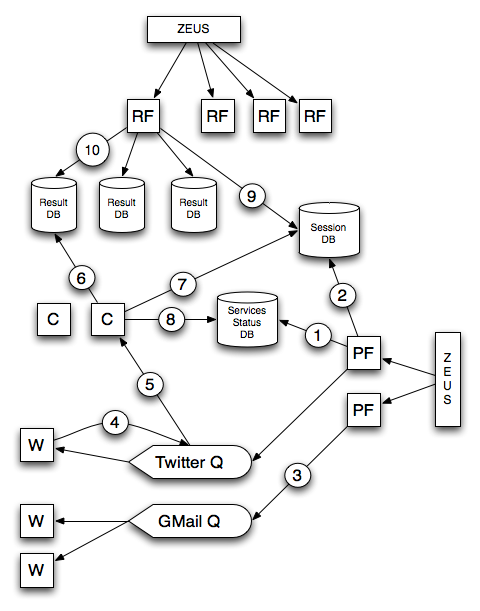

Asynchronous Architecture

Big Picture

- The Post office Front server (PF) receives a send request.

- The request is pushed into a queue

- A worker (W) picks the job and push the result into a key/value storage

- the client grabs back the result via the Receiver Front server (RF)

Detailed transaction

1. PF checks the status of a service by looking at the Services Status DB. Each service has a (GR / BR) ratio stored in that DB. GR = Number of good responses. BR = Number of bad responses. What are "Good" and "Bad" responses is to the workers discretion.

Given a threshold, PF can decide to return a 503 immediately, together with a Retry-After header.

2. PF creates a unique Session ID for the transaction and stores it into the Session DB.

3. PF push the work to be done, together with the session id, into the right queue. It then return a 202 code to the client.

4. A worker picks the job and do it. Once it gets the result back, it sends back the result in a response queue.

5. A Consumer picks the jobs that are done in the response queue.

6. The consumer selects a Result DB server by using a simple modulo-based shard algorithm. It pushes the result into the select Result DB.

7. The consumer updates the Session DB by writing there the Result Server ID associated to the Session ID. Once this is done, the job is removed from the queue.

8. The consumer updates the Service status DB with the status (G or F) provided by the worker.

9. When the user ask for the result via RF, RF looks in the Session DB what is the Response DB that has the stored result.

10. The RF then picks the result and returns it.

Databases

The Session DB and Services Status DB are key/value stores global to the cluster and replicated in several places. They can be eventually consistent.

The Result DB is a key/value store local to a single server. It holds the result for a specific transaction.

The queues are providing a Publisher/Subscriber pattern, where each worker picks jobs to be executed on a specific service.

Failure Scenarios and persistency

The general idea is that a transaction can be re-tried manually by the user if there's an issue in our system. Although our system should prevent clients to send more work if a third party service is down.

- if PF cannot reach on of those, a 503+Retry after is sent back:

- the services status DB

- the session DB

- the queue

- if the queue crashes, the data stored should not be lost. Workers should be able to resume their subscriptions

- The job should be left in the queue until the transaction is over

- a worker that picks a job marks it as being processed

- if a worker crashes while doing the job, the marker should decay after a TTL so another worker can pick up the job

- if a consumer cannot reach the result DB, the job stays in the queue and eventually goes away after a ttl.

- if a consumer cannot reach the session DB the job stays in the queue and eventually goes away after a ttl.

- if a consumer cannot reach the services status DB, nevermind.

XXX

Security

XXX explains the level of security of each piece -- who holds the app key, the tokens, etc

OAuth Dance

XXX