State Of The Internet/Surveillance Economy/datatakeover

Data Takeover

Bringing new value, transparency, and power to the people through control and ownership.

The Problem to Solve

With little value, little understanding, and little usability of their data, people have little incentive to demand control over it.

Our data, on its own, has little value.

But when presented together, as a collective, our patterns and trends create the insights and training models innovation might need, especially for pressing problems in complex systems like health, transportation, climate, and government. While we must narrow the collection and usage of data in ways that harm the individual and public, there must also be a way to use data collections for good.

Today’s data sets are created under opaque and often exploitative conditions, making them deeply flawed.

Inferences and labeling are created by exploited individuals from a narrow demographic group, unchecked and unprotected against bias and exclusivity.

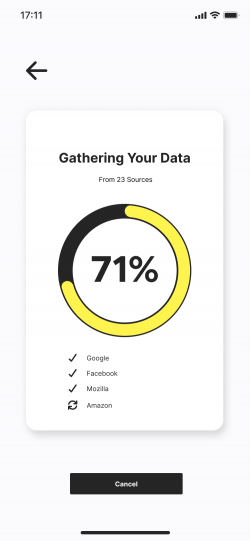

Requesting personal data is a right, but designed to protect the current system and be as user-unfriendly as possible.

Even though everyone has the right to request their personal data with CCPA and GDPR, it is almost impossible to get a grasp of who owns what parts of your data and how that shapes your private and public life. When requested data is shared back, it is usually very complicated, and rarely interoperable or portable

Overall Approach

Give people power over their faceted identities, to reduce the amount of data risk they’re exposed to.

By giving people visibility into and control over their collected data, we can help them claim their ownership and take back power from major data aggregators and brokers.

Create a transparent data supply chain. In a post data breach world, people should have clarity and transparency around how, where, and why their data is used. Open up the blackbox so people can see how their experiences and choices are shaping, and are shaped by, machine learning.

Create ‘value’ from data requests.

Right now, people can request their data, but they have no way to make sense of it or find new value for it. As more legislation like CCPA and GDPR come into play, people will need this capability to make good on the promise of policy.

Tactics to Explore

Allow people to collectively contribute to open datasets, understand algorithmic impacts on our lives with new transparency, and eventually create personal data storage and access applications.

By curating and standardizing common datasets, we can create platforms and services that allow people to collectively contribute to open datasets, understand algorithmic impacts on our lives with new transparency, and eventually create personal data storage and access applications.

Assumptions to Test

If we can bring new value, transparency, and power to the people through control and ownership, we can...

Reduce Exposure… By finding value in open, collective datasets.

Reduce Exclusion… By creating demand for unbiased data with provenance and other details, and by helping companies without troves of data have access to good, collective sets.

Reduce Exploitation...

By shining a light on algorithmic impact on our lives.

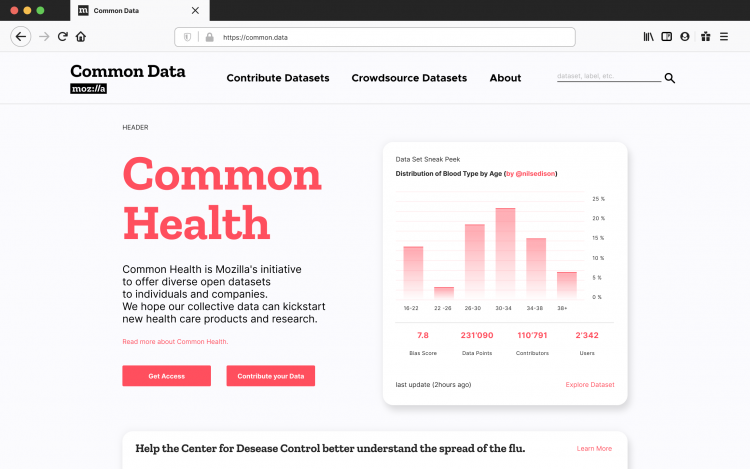

Phase 1: Diving In: Collectively Owned Open Data

Imagine an option where datasets are no longer treated like confidential business assets, but as dynamic, accessible, transparent resources for everyone to access—from students to start-ups, from NGOs to Coca-Cola.

AI-driven services could prioritize people’s control through a high quality, ‘ethically sourced’ data system, making data less biased. Access could then be licensed on a usage basis for academics, small businesses, and corporations. The increased transparency these datasets offer would give people—including legislators—the information and power needed to demand change.

What problem does it solve

For users, it opens up the blackbox so people can see how their experiences and choices are shaping, and are shaped by, machine learning. Opt-in data collectives also provide a foundation to begin making data from CCPA and GDPR requests usable.

For companies, which often lack the internal capabilities to do much with the troves of data they gather and for whom outright owning this data creates risk. For companies, data is often unrefined, low quality, and therefore perpetuates bias. This could provide higher quality, ‘ethically sourced’ data on a ‘need to know’ basis.

How does it solve it?

For companies and other organizations, a B2B product like Common Voice, with dynamic and genuinely representative datasets, is far more useful for companies.

For the user, services that show how their lives at the individual and collective level are shaped by algorithms could be built on top of these data collectives. These insights could inform future choices and effective regulation as well as spur further innovation.

With whom might we work?

- Pre-series B startups who lack adequate ‘ethical’ data.

- Companies that are trying to solve this data infrastructure and capabilities problem, like Databricks and Digi.me.

- Academic researchers and government.

- Groups of people who would find immediate value in sharing such data (e.g. people with mental health issues who are being ‘redlined’ with insurance coverage)

- Institute for Local Self Reliance (US focus)

How could we start?

- Enable Firefox users to send CPPA and GDPR requests from within the browser and share their data in a privacy-preserving collective. Could start with 'lead users' willing to experiment.

- GDPR + CCPA means people can get all sorts of data. What could we help them do with this data and find value with? E.g., data from Uber drivers. In which verticals is there the most opportunity?

- Enable Firefox users to opt-in and share their browsing data with select researchers who can analyze how their digital lives are shaped by machine learning ("Pioneer v2")

- Evaluate and prioritize where there is a public benefit and business need for clean, unbiased datasets that could be crowdsourced. For example, where are there questionable business practices in which collective ownership could help change the dynamics or provide parallel ‘oversight’? (e.g. Uber drivers and their data) Expand the Common Voice model and direct CCPA and GDPR requests in those areas.

- Evaluate the technical and business needs around collating, cleaning, and curating existing open data sets.

- Sponsor competitions to make the ‘unknown’ known and actionable. How could we help the average person understand who has what data about them and make taking appropriate action—and seeing the resulting benefits—convenient and easy?

- Investigate how to incentivize contribution.

- What might we learn from Netscape’s DMOZ directory project? And how might we work with our communities (e.g. Mozilla “maven” users) to create and share recommendations for devs to use.

- How might we inject more risk into the system by linking Firefox Monitor alerts on data breaches to actual legal action victims can take

- How can this concept support decentralized data?

- What might collective consent look like in this digital concept? Relationship to digital signing?

- Might WASM be a useful tool for data mining in this more ethical and just model?

- (related to all Explorations...) Cross-org cross-functional deepdive into how might we leverage FxA as a starting point to giving control back to users.

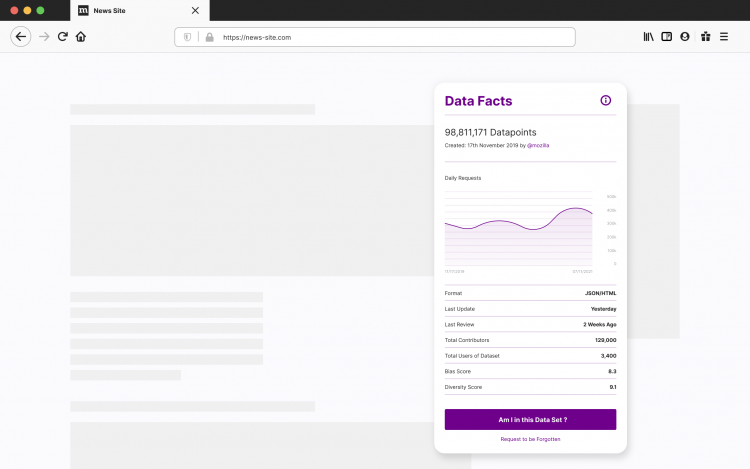

Phase 2: Building Momentum: Unboxing the Black Box

Imagine a way to make the algorithmic impacts on our lives transparent. Through customer demand, regulation, and procurement, this new knowledge could drive a movement for less data collection, ‘ethically sourced’ data, and changes elsewhere in the ‘AI stack.’ Dataset users could apply quality ratings, including for lack of bias and fraud, and add recommendations or refinements to the dataset description—each one labeled like food packaging nutrition details.

What problem does it solve?

For users, in a post data breach world, they get clarity and transparency around how, where, and why their data is used. Pew: Just 9% of Americans believe they have “a lot of control” over the information that is collected about them, versus 74% that say it’s important to be in control of who can get information about them.

How does it solve it?

Begins to create a data supply chain that works to the public’s benefit by creating demand for unbiased data with provenance. Licensing around the datasets—and enforcement—can drive better behaviour by the companies using the data.

With whom might we work?

‘Explainable AI’ practitioners and advocates (e.g., companies like IBM or Stitchfix that are building tech that quantifies value from data and where it shows up). Publishers, brands and companies that work on ad attribution.

How could we start?

- Build out ‘ethically sourced’ datasets for developers working with voice technologies. How might we combine our vision for an open voice tech stack with such datasets, starting with Common Voice? How might we use this to learn more about data labels people and developers care about. E.g., source, where is it being used?

- Work with Firefox ‘lead users’ and researchers to create a way for browsing behaviour to be shared. In combination with other data sources, analyze the impact of AI on their lives as well as the data risk to select companies. Use this as a benchmark against which to show the benefits of a lean-data, provenance-based system.

- Evaluate what a ratings system might be for ‘ethically sourced’ data.

- How might we use blockchain/tokens to prove and enforce provenance?

- Explore how we could anonymize data in a way that maintains quality yet allows for provenance monitoring.

- How might we repurpose ad-blocking tech to detect bad data behaviour and even provide rigour to a data quality ratings system?

- Begin using our policy strength to require transparent ‘data provenance’ in AI-driven areas that are crucial to public welfare, either because of the level of damage or benefit they could bring.

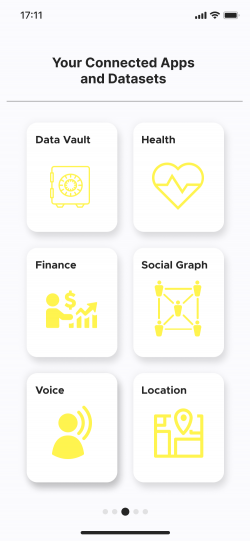

Phase 3: Reaching Our Destination: Personal Data Storage and Applications

Imagine a portable, encrypted and interoperable platform from which data could be accessed, stored on- or offline, and requested from 3rd parties. New products and services could be created based upon data owned and managed by users, at the individual or collective level. People could create their own social graph, run algorithms locally on health data, analyze their own data and telemetry.

What problem does it solve?

This would take power away from major data brokers and aggregators. Most current brokers (>4,000) engage in systematic dark patterns around the 500 million global consumers they harvest.

How does it solve it?

For the user, individuals and collectives effectively become data brokers and disrupt the power imbalance. Mozilla could become the GitHub for user-and-collective controlled data brokering and monetize the positive externalities by building services on top, or by allowing others to do so.

With whom might we work?

Blockchain and the broader distributed ledger community on the tech side who have solved an analogous problem elsewhere, such as in finance.

How could we start?

- Drive for standardization for how PII is shared through CCPA and GDPR requests.

- Create a centralized way to request personal data from a distributed, federated source. How we might do this while supporting local control of the data?

- Work with marginalized communities to understand what applications would help their lives. Prioritize these applications.

- Launch challenges—or work with existing grand challenges, like MIT Solve—to drive app development around things that actually matter to the world, not what matters to Silicon Valley VCs.

Comments, Stay Informed + Get Involved

Email: state-of-the-internet@mozilla.com

Join #stateoftheinternet on Slack (Staff + NDA Contributors)

Other Explorations

Powering-up Consent and Identity

Changing the consent conversation from “control over your privacy” to “power over your identity.”

Productizing our healthier data practices and infrastructure and galvanizing a ‘better data’ movement.