Foundation/AI: Difference between revisions

(→2020 OKRs: removed table, converted to bulleted list.) |

(→Trustworthy AI Brief V0.9: replaced with readme text and opening of mark's blog.) |

||

| Line 12: | Line 12: | ||

<div style="display:block;-moz-border-radius:10px;background-color:#b7b9fa;padding:20px;margin-top:20px;"> | <div style="display:block;-moz-border-radius:10px;background-color:#b7b9fa;padding:20px;margin-top:20px;"> | ||

<div style="display:block;-moz-border-radius:10px;background-color:#FFFFFF;padding:20px;margin-top:20px;"> | <div style="display:block;-moz-border-radius:10px;background-color:#FFFFFF;padding:20px;margin-top:20px;"> | ||

= Trustworthy AI | = Background: Mozilla and Trustworthy AI = | ||

'' | ''Downloadable versions of previous issue briefs are available here: [https://mzl.la/AIIssueBrief https://mzl.la/AIIssueBrief], [https://mzl.la/IssueBriefv01 v0.1], [https://drive.google.com/file/d/1o8bK5qmMYzABk9aEO21bjW3_y1vKuXgB/view?usp=sharing v0.6], and [https://mzl.la/IssueBriefV061 v0.61].'' | ||

In 2019, Mozilla Foundation decided that a significant portion of its internet health programs would focus on AI topics. We launched that work a little over a year ago, with a post arguing that: [https://marksurman.commons.ca/2019/03/06/mozillaaiupdate/ if we want a healthy internet -- and a healthy digital society -- we need to make sure AI is trustworthy]. AI, and the large pools of data that fuel it, are central to how computing works today. If we want apps, social networks, online stores and digital government to serve us as people -- and as citizens -- we need to make sure the way we build with AI has things like privacy and fairness built in from the get go. | |||

Since writing that post, a number of us at Mozilla -- along with literally hundreds of partners and collaborators -- have been exploring the questions: What do we really mean by ‘trustworthy AI’? And, what do we want to do about it? | |||

'''How do we collaboratively make trustworthy AI a reality?''' | |||

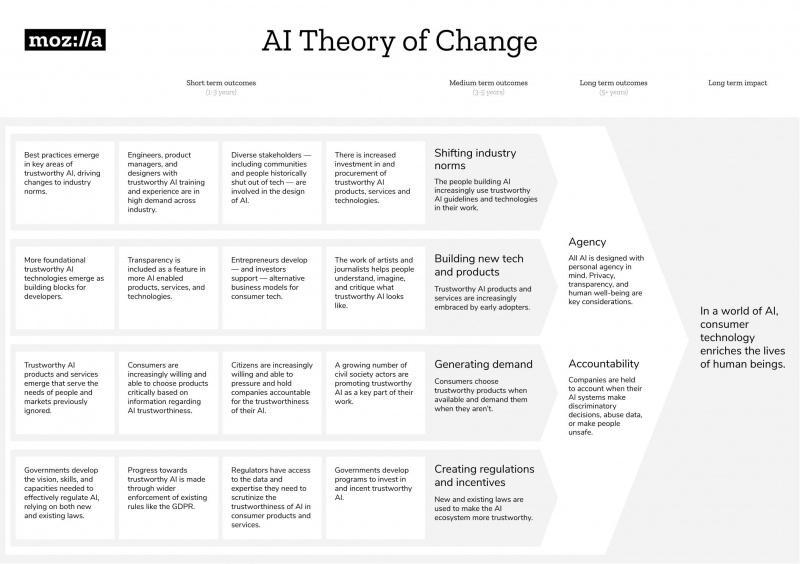

We think part of the answer lies in collaborating and gathering input. In May 2020, we launched a request for comment on v0.9 of Mozilla’s Trustworthy AI Whitepaper -- and on the accompanying theory of change (see below) that outlines the things we think need to happen. | |||

'' What is trustworthy AI and why? '' | |||

We have chosen to use the term AI because it is a term that resonates with a broad audience, is used extensively by industry and policymakers, and is currently at the center of critical debate about the future of technology. However, we acknowledge that the term has come to represent a broad range of fuzzy, abstract ideas. Mozilla’s definition of AI includes everything from algorithms and automation to complex, responsive machine learning systems and the social actors involved in maintaining those systems. | |||

Mozilla is working towards what we call trustworthy AI, a term used by the European High Level Expert Group on AI. '''Mozilla defines trustworthy AI as AI that is demonstrably worthy of trust. Privacy, transparency, and human well-being are key considerations and there is accountability for harms.''' | |||

Mozilla’s | Mozilla’s theory of change (below) is a detailed map for arriving at more trustworthy AI. It focuses on AI in consumer technology: general purpose internet products and services aimed at a wide audience. This includes products and services from social platforms, apps, and search engines, to e-commerce and ride sharing technologies, to smart home devices, voice assistants, and wearables. | ||

AI | |||

'' About Mozilla '' | '' About Mozilla '' | ||

Mozilla | The ‘trustworthy AI’ activities outlined in the white paper are primarily a part of the movement activities housed at the Mozilla Foundation — efforts to work with allies around the world to build momentum for a healthier digital world. These include: thought leadership efforts like the Internet Health Report and the annual Mozilla Festival, fellowships and awards for technologists, policymakers, researchers and artists, and advocacy to mobilize public awareness and demand for more responsible tech products. Mozilla’s roots are as a collaborative, community driven organization. | ||

Mozilla’s roots are as a collaborative, community driven organization. We are constantly looking for | Mozilla’s roots are as a collaborative, community driven organization. We are constantly looking for | ||

allies and collaborators to work with on our trustworthy AI efforts. | allies and collaborators to work with on our trustworthy AI efforts. | ||

For more on Mozilla’s values, see: [https://www.mozilla.org/en-US/about/manifesto/]. Our Trustworthy AI | For more on Mozilla’s values, see: [https://www.mozilla.org/en-US/about/manifesto/]. Our Trustworthy AI | ||

| Line 160: | Line 51: | ||

and building an internet that enriches the lives of individual human beings (principles 3). | and building an internet that enriches the lives of individual human beings (principles 3). | ||

For more on Trustworthy AI programs, see [https://wiki.mozilla.org/Foundation/AI https://wiki.mozilla.org/Foundation/AI] | |||

<div style="display:block;-moz-border-radius:10px;background-color:#666666;padding:20px;margin-top:20px;"> | <div style="display:block;-moz-border-radius:10px;background-color:#666666;padding:20px;margin-top:20px;"> | ||

Revision as of 16:00, 12 May 2020

In 2019, Mozilla Foundation decided that a significant portion of its internet health programs would focus on AI topics. This wiki provides an overview of the issue as we see it, our theory of change and Mozilla's programmatic pursuits for 2020. Above all, it opens the door to collaboration from others.

Watch our January, 2020 All Hands Plenary for more information.

Background: Mozilla and Trustworthy AI

Downloadable versions of previous issue briefs are available here: https://mzl.la/AIIssueBrief, v0.1, v0.6, and v0.61.

In 2019, Mozilla Foundation decided that a significant portion of its internet health programs would focus on AI topics. We launched that work a little over a year ago, with a post arguing that: if we want a healthy internet -- and a healthy digital society -- we need to make sure AI is trustworthy. AI, and the large pools of data that fuel it, are central to how computing works today. If we want apps, social networks, online stores and digital government to serve us as people -- and as citizens -- we need to make sure the way we build with AI has things like privacy and fairness built in from the get go.

Since writing that post, a number of us at Mozilla -- along with literally hundreds of partners and collaborators -- have been exploring the questions: What do we really mean by ‘trustworthy AI’? And, what do we want to do about it?

How do we collaboratively make trustworthy AI a reality?

We think part of the answer lies in collaborating and gathering input. In May 2020, we launched a request for comment on v0.9 of Mozilla’s Trustworthy AI Whitepaper -- and on the accompanying theory of change (see below) that outlines the things we think need to happen.

What is trustworthy AI and why?

We have chosen to use the term AI because it is a term that resonates with a broad audience, is used extensively by industry and policymakers, and is currently at the center of critical debate about the future of technology. However, we acknowledge that the term has come to represent a broad range of fuzzy, abstract ideas. Mozilla’s definition of AI includes everything from algorithms and automation to complex, responsive machine learning systems and the social actors involved in maintaining those systems.

Mozilla is working towards what we call trustworthy AI, a term used by the European High Level Expert Group on AI. Mozilla defines trustworthy AI as AI that is demonstrably worthy of trust. Privacy, transparency, and human well-being are key considerations and there is accountability for harms.

Mozilla’s theory of change (below) is a detailed map for arriving at more trustworthy AI. It focuses on AI in consumer technology: general purpose internet products and services aimed at a wide audience. This includes products and services from social platforms, apps, and search engines, to e-commerce and ride sharing technologies, to smart home devices, voice assistants, and wearables.

About Mozilla

The ‘trustworthy AI’ activities outlined in the white paper are primarily a part of the movement activities housed at the Mozilla Foundation — efforts to work with allies around the world to build momentum for a healthier digital world. These include: thought leadership efforts like the Internet Health Report and the annual Mozilla Festival, fellowships and awards for technologists, policymakers, researchers and artists, and advocacy to mobilize public awareness and demand for more responsible tech products. Mozilla’s roots are as a collaborative, community driven organization.

Mozilla’s roots are as a collaborative, community driven organization. We are constantly looking for

allies and collaborators to work with on our trustworthy AI efforts.

For more on Mozilla’s values, see: [1]. Our Trustworthy AI

goals framework builds on key manifesto principles including agency (principle 5), transparency (principle 8)

and building an internet that enriches the lives of individual human beings (principles 3).

For more on Trustworthy AI programs, see https://wiki.mozilla.org/Foundation/AI

Theory of Change

The Theory of Change update will enable Mozilla & our allies to take both coordinated and decentralized action in a shared direction, towards collective impact on trustworthy AI.

It seeks to define:

- Tangible changes in the world we and others will pursue (aka long term outcomes)

- Strategies that we and others might use to pursue these outcomes

- Results we will hold ourselves accountable to

Many people have tried to come up with the right word to describe what 'good AI' looks like -- ethical, responsible, healthy.

The term we find most useful is 'trustworthy AI', as used by the European High Level Expert Group on AI. Mozilla's simple definition is:

"AI that is demonstrably worthy of trust. Privacy, transparency and human well being are key design considerations - and there is accountability for any harms that may be caused. This applies not just to AI systems themselves, but also the deployment and results of such systems."

We plan to use this term extensively, including in our theory of change and strategy work.

2020 OKRs

MoFo 2020 OKRs [draft - March 27, 2020]

The following outlines the organization wide objectives and key results (OKRs) for Mozilla Foundation for 2020.

Theory of change

These objectives have been developed as a part of a year long strategy process that included the creation of a multi-year theory of change for Mozilla’s trustworthy AI work. The majority of objectives are tied directly to one or more short term (1 - 3 year) outcomes in the theory of change.

Partnerships

Mozilla Foundation’s overall focus is on growing the movement of organizations around the world committed to building a healthier internet. A key assumption behind this work is that Mozilla maintains a small staff that is skilled at partnering, with most of its resources going into networking and supporting individuals and organizations within the movement. The 2020 OKRs include a strong focus on deepening our partnership practice.

Below is a bulleted list of our OKRs. You can read more about them here.

1. Thought Leadership

Short Term Outcome: Clear "Trustworthy AI" guidelines emerge, leading to new and widely accepted industry norms.

2020 Objective: Test out our theory of change in ways that both give momentum to other orgs taking concrete action on trustworthy AI and establish Mozilla as a credible thought leader.

Key Results:

- Publish a whitepaper theory of change (H1)

- 250 people and organizations participate in mapping to show who is working on key elements of trustworthy AI and offer feedback on the whitepaper

- 25 collaborations with partners working on concrete projects that align with short term outcomes in the theory of change

2. Data Stewardship

Short Term Outcome: More foundational trustworthy AI technologies emerge as building blocks for developers (e.g. data trusts, edge data, data commons).

2020 Objective: Increase the number of data stewardship innovations that can accelerate the growth of trustworthy AI.

Key Results:

- $3 million raised to support bold, multi-year, cross movement initiatives on data stewardship as an indicator of growing philanthropic support in this area.

- 10 awards or fellowships for prototypes or other concrete exploration re: data stewardship.

- 4 concentric “networks of practice” utilize Mozilla-housed Data x Lab

3. Consumer Power

Short Term Outcome: Citizens are increasingly willing and able to pressure and hold companies accountable for the trustworthiness of their AI.

2020 Objective: Mobilize an influential consumer audience using pivotal moments to pressure companies to make ‘consumer AI’ more trustworthy.

Key Results:

- 3m page views to ‘trustworthy AI’ content on Mozilla channels (MoFo website, social media, YouTube, etc.).

- 50k new subscribers drawn from sources (partnerships, contextual advertising, etc.) oriented towards people ages 18-35.

- 25k people share information with us (stories, browsing data, etc.) in order to gather evidence about how AI currently works and what changes are needed.

4. Movement Building

Short Term Outcome: A growing number of civil society actors are promoting trustworthy AI as a key part of their work.

2020 Objective: Partner with diverse movements to deepen intersections between their primary issues and internet health, including trustworthy AI, so that we increase shared purpose.

Key Results:

- 30% increase in partners with whom we (have both) published, launched, or hosted something that includes shared approaches to their issues and internet health (e.g. shared language, methodologies, resources or events).

- 75% of partners from these diverse movements report deepening intersection between their issues and internet health/AI.

- 4 new partnerships in the Global South report deepened intersection between their work and ours.

Timeline

Trustworthy AI fits within the big picture internet health issues that we have been tackling collectively over the past few years. And, over the last 18 months we've been collaboratively working to make this effort crisper.

Below, is the paper trail of these efforts, they collectively tell the story of how Mozilla got to this goal and why.

Existing MoFo Theory of Change (January 2018)

- Mozilla's long term strategy for making the internet a healthier place. The new theory of change shared above built on this earlier work.

Impact goal summary (November 2018)

- Summarizes our recommendation to Mozilla's Board of Directors on why the impact goal focus should be 'better machine decision making' (now, trustworthy AI).

Better machine decision making issue brief (November 2018)

- Describes how Mozilla understands the issue of machine decision making and the beginning of a roadmap on areas for improvement to get us to 'better'.

Slowing Down, Asking Questions, Looking Ahead (November 2018)

- This blog summarizes the two resources above -- the 'why' of trustworthy AI and how we got here.

Mozilla, AI and internet health: an update (March 2019)

- This blog post draws direct connections between our movement building theory of change and how trustworthy AI fits into that. It answers the question: how will we shape the agenda, rally citizens and connect leaders around trustworthy AI?

Why AI + consumer tech? (April 2019)

- In April, 2019 we narrowed in on consumer technology as the key area where Mozilla can have the biggest impact in the AI field.

Consider this: AI and Internet Health (May 2019)

- This blog explores the aspects of consumer technology that Mozilla should focus on. The list had been narrowed to: accountability; agency; rights; and open source.

Update: Digging Deeper on ‘Trustworthy AI’ (August 2019)

- Here, we share our long term outcomes and long term trustworthy AI goal. We had landed on agency and accountability as our outcomes and "in a world of AI, consumer technology enriches the lives of human beings" as our goal.

Privacy, Pandemics and the AI Era (March 2020)

- This blog explores the connection between the COVID-19 pandemic, and the technological solutions being proposed. These issues are central to the long term impact of AI.

Privacy Norms and the Pandemic (April 2020)

- Similar to the post above, here we explore the long term data governance implications of technology deployment during the pandemic.

You can read more about the background for this project here.