Accessibility/Video a11y requirements

Accessibility for Web audio & video is an interesting beast. It is mainly referred as a need of extending content with functionality to allow people of varying disabilities (in particular deaf / blind) access to Web content.

However, such functionalities are not only usable by disabled people - they become quickly very useful to people that are not generally regarded as "disabled", e.g. subtitles for internationalisation or in loud environments, or the "mis"-use of captions for deep search and direct access to subparts of a video. (See for example the use cases at WHATWG wiki).

Therefore, the analysis of requirements for accessibility cannot stop at the requirements for disabled people, but will need to go further.

The aim here is to analyse as many requirements for attaching additional information to audio & video files for diverse purposes. Then we can generalise a framework for attaching such additional information, in particular to Ogg files/streams. And as a specialisation of this, we can eventually give solutions for captions and audio annotations in particular.

As an alternative to embedding some of this information into Ogg files, it is possible to keep them in a separate file and just refer to them as related to the a/v file. However, when we are talking about receiving a/v inside a Web browser (or more generally a Web client), we do not want to open a random number of connections to a Web server to receive an a/v stream. The aim is to receive exactly one stream of data and to have this stream of data flexibly include more or less additional information over the pure audio or video data. Also, video a11y should be the task of the video author, not the web page author.

The description of how to include a certain data type into Ogg is generally straight-forward from its description and is therefore included below as a suggestion.

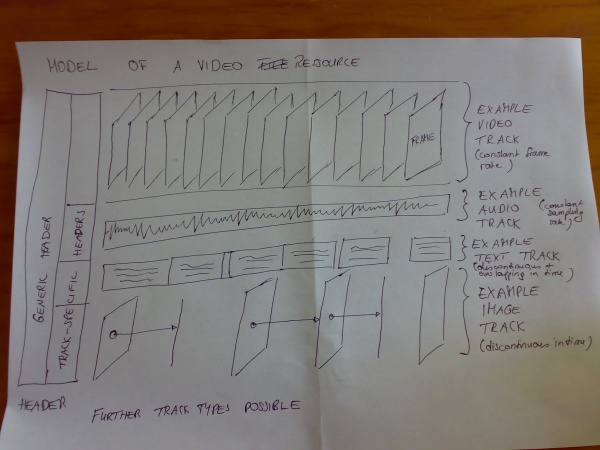

Additional Data tracks required for audio & video

Requirements for hearing-impaired people

Hearing-impaired people need a visual or haptic replacement for the auditive content of an audio or video file.

The following channels are available to replace the auditive content of an audio or video file:

- textual representation: text (or braille, which is a text display)

- visual representation: sign-language

The following are actual technologies that can provide such channels for audio & video files:

Burned-in ("open") captions

- caption text comprises the spoken content of an audio/video file plus a transcription of non-speech audio (speaker names, music, sound effects)

- open captions are caption text that is irreversibly merged in the original video frames in such a way that all viewers have to watch them

- open captions are in use today mostly for providing open subtitles on TV

Discussion:

- (-) open captions are restrictive because they are just pixels in the video and the actual caption text is not directly accessible other than through OCR

- (-) open captions can only be viewed and not (easily) be used for other purposes such as braille or search indexing

- (-) in a digital world where the distribution of additional information can easily be achieved without destroying the format of the original data (both: video and text in this case), such a transfer of captions is not preferred

- (-) no flexibility to turn off the captions

- (-) you can have only one of them per video track (eg, one video per language)

- (-) doesn't usually play nice with video

- (+) no special software needs to be implemented to enable display of open captions in a video player

MULTIPLEX INTO OGG: not necessary, since it is part of the video track

Textual (closed) captions

- closed captions are caption text that can be activated/deactivated to be displayed on top of the video

- while there is a multitude of methods to encode closed captions into TV signals, we will here refer to closed captions only as a textual representation of captions relating to a digital video file

Discussion:

- (+) textual captions can be accessed as a textual representation of the video and used for purposes that go beyond the on-screen display, e.g. for display as braille or search purposes

- (+) textual captions can be internationalised and given in multiple languages

- (-) textual captions contain essentially the represented spoken content together with music description, sound effect description, and metadata like the speaker name together in one representation means, which makes them semantically difficult to distinguish

- (-) being extra data that accompanies a video or audio file, a player needs to be specifically adapted to support display and handling of this kind of data

MULTIPLEX INTO OGG: as extra timed text track(s)

Bitmap (closed) captions

- sometimes closed captions are represented as a a sequence of transparent bitmaps which are overlayed onto the video at given time offsets to display the closed captions (sometimes used on DVDs)

Discussion:

- (-) like open captions, bitmap captions are restrictive because they are just pixels in the video and the actual caption text is not directly accessible other than through OCR

- (-) bitmap captions can only be viewed and not (easily) be used for other purposes such as braille or search indexing

- (+) bitmap captions can be internationalised and given in multiple languages

- (+) you can have total control over the appearance of the text (eg,

move it to where it doesn't interfere with viewing, add font style with no need for player support, etc)

- (-) in a digital world where the distribution of additional information can easily be achieved without destroying the format of the original data (both: video and text in this case), such a transfer of captions is not preferred

- (+) compared to open captions, bitmap captions can actually be selectively turned on and off

- (-) being extra data that accompanies a video or audio file, a player needs to be specifically adapted to support display and handling of this kind of data

MULTIPLEX INTO OGG: probably as extra timed bitmap track(s) (or extra video track)

Sign-language

- a sign language is a set of gestures, body movements, face expressions and mouth movements to communicate between people

- a sign-language is defined for a specific localized community, e.g. American Sign Language (ASL), English Sign Language (ESL)

- there are a large number of different sign languages "spoken" around the world

- sign-language does not typically relate in geographic area to any spoken language (ASL and ESL are totally unrelated languages)

- there is not typically a simple means to automatically translate a spoken language word to a sign-language representation, because sign-language works typically more on ideas and concepts rather than letters and words; in addition, multiple things can be communication in one sign language sign that may take multiple sentences to communicate in a spoken language

- for most sign languages there is no written representation available - people rather use as a written communication means the written representation of their local spoken language

- multiple sign-language tracks are necessary to cover internationalisation of sign-language

Discussion:

- (-) sign languages can generally only be represented through a visual recording of a person performing the language

- (-) automated analysis, transcription, or translation between text and sign-language is generally not possible

- (-) being extra data that accompanies a video or audio file, a player needs to be specifically adapted to support display and handling of this kind of data

MULTIPLEX INTO OGG: as extra video tracks, one per sign-language

Requirements for visually impaired people

Visually impaired people need a replacement of the image content of a video to allow them to follow the display of a video or any other image-based content (e.g. an image track with slides).

The following channels are available to replace the visual content of an audio or video file:

- haptic representation: braille text

- aural representation: auditive descriptions, text-to-speech (TTS) screen-reader

The following are actual technologies that can provide such channels for audio & video files:

Auditive audio descriptions

- auditive descriptions are descriptions of all that is happening only visually in a video; this includes e.g. scenery, people & objects appearing/disappearing/movement, face expressions, weather

Discussion:

- (-) an auditive description of the visual scene is restrictive because the description is spoken and not directly accessible other than through speech recognition

- (-) auditive descriptions can only be listened to and not (easily) be used for other purposes such as braille or search indexing

- (-) it can be problematic to synchronise the auditive description with the actual timeline of the video, because the auditive description may sometimes take longer to play than the original video provides a break for

- (-) in a digital world where the distribution of additional information can easily be achieved without destroying the format of the original data (both: text description and the video sync in this case), such a transfer of scene descriptions is not preferred

- (+) it is possible to play back the aditive description on a different audio channel to the rest of the video's audio and be separately sped up in playback time

- (-) being extra data that accompanies a video file, a player needs to be specifically adapted to support playback and handling of this kind of data

MULTIPLEX INTO OGG: as extra audio track with editing of breaks into original a/v file (preferrably Speex encoded, since it's a pure speech track)

Textual audio descriptions

- textual representation of an audio description

Discussion:

- (+) a textual scene description can be made accessible in a multitude of ways, e.g. through a TTS & screenreader, through braille

- (+) it is possible to play back the aditive description on a different audio channel to the rest of the video's audio and be separately sped up in playback time

- (+) the textual scene description can be used for other purposes, such as search indexing

- (+) the textual scene description together with captions may coincide in many ways with the annotation needs of archives

- (-) the textual scene description may be too unstructured to be usable as a semantic representation of video

- (-) being extra data that accompanies a video file, a player needs to be specifically adapted to support playback and handling of this kind of data

MULTIPLEX INTO OGG: as extra timed text track

Requirements for internationalisation

- subtitles:

- transcription of what is being said in different languages

- same options as open / closed text / closed bitmap captions above

Recommendation: closed text as timed text track

- dubbed audio tracks:

- translation of what is being said into different language in audio

MULTIPLEX INTO OGG: as extra audio tracks (could be speex, if the sound background is being kept in a separate file and both are played back at the same time)

Requirements for entertainment

- karaoke:

- remove spoken words from audio track and add timed text track

- similar to subtitles with style information

MULTIPLEX INTO OGG: as timed text track

Requirements for archiving

- metadata & semantic annotations:

- structured annotations that describe the scene and semantics

- similar to audio description, but tends to be structured along different fields

MULTIPLEX INTO OGG: as timed text track

- story board:

- sequence of images that represent the original way in which the video was scripted

- if it is possible to align it with the resulting video, provides insights into the video planning and production

- if it cannot be time-aligned with the video, it should be referenced separately (text with url on webpage)

MULTIPLEX INTO OGG: as timed image track

- transcript:

- textual description of everything that happens in the video

- basically similar to what captions and audio annotations provide together

- it is probably better to keep as much of the different types of annotations separate as possible and call the complete textual annotations available to a audio/video file its "transcript"

- if it cannot be time-aligned with the video, it should be referenced separately (text with url on webpage)

MULTIPLEX INTO OGG: as timed text track

- script:

- original description of the video for production purposes

- if it is possible to align it with the resulting video, provies insights into the video planning and production

- if it cannot be time-aligned with the video, it should be referenced separately (text with url on webpage)

MULTIPLEX INTO OGG: as timed text track

Functionalities required around additional data tracks & audio / video

Access to text from Web page

- Any text multiplexed inside an audio / video file should be exposed to the Web page, so we can use live region semantics where assistive technologies are notified when there is new text.

Solutions:

- One way to get the text into the Web page is through inclusion into the DOM as subelements of the <video> or <audio> element.

- Another way would be to provide a javascript API for the text elements.

Specification needs

- Timed text tracks need to:

- specify their primary language at the start (e.g. lang="en")

- if there are sections given in a different language they need to be specified as such

- Audio tracks should:

- specify their primary language in the header

- Sing language video tracks should:

- specify their primary language in the header

- Hyperlinks should:

- specify what language the document is in that they link to (hreflang)

Access to structure of video / audio from Web page

- Similarly to the access of text, it is important to be able to access the structure of the multiplexed text through the Web page.

- This is of particular importance for blind users that cannot use the mouse to access random offset points in videos but need to rely on tabbing.

- Video (in particular video that has audio descriptions) needs to have a structure that can be accessed via tabbing through it, to allow (blind) people to directly jump to areas of interest

Discussion:

- (+) providing video with a structure for direct access to fragments is useful not just to blind people

- (+) audio should also be able to be structured in this way

Solutions:

- Both options: DOM and javascript API are possible.

Text Display

- Text available in association to a video should not be hidden from the user but made available.

- Text that is displayed in a Web page needs to be stylable.

Solutions:

- Define default display mechanisms for the different types of text.

- Define for the different text types which are open by default, and which can be toggled on.

- For text that can be toggled on, default icons need to be specified.

- Use style sheet mechanisms and style tags for displayable text.

Dynamic content selection

- The most suitable content for a video / audio file should be made available to the user in accordance with user's abilities and preferences.

- This includes the ability to have the user agent ask the Web server about the available content tracks for a video.

- This also includes the ability to have the user agent tell a Web server the specific preference settings of a user, e.g. the supression of video content because of a blind user, so the Web server can adapt the content and provide only the tracks that the user prefers.

- Another example where content may need to be dynamically extended with a11y text is where a caption author may provide the captions for a video that he does not host, but his server would be able to multiplex the two together and deliver them to a user agent.

Solution:

- The Web server needs to have the ability to dynamically compose a video together from its constituent tracks according to a user agent's request. The different tracks may possibly be reused from different Web servers.

- There needs to be a protocol (possibly a HTTP extension) that allows user agent and Web server to communicate about the composition.

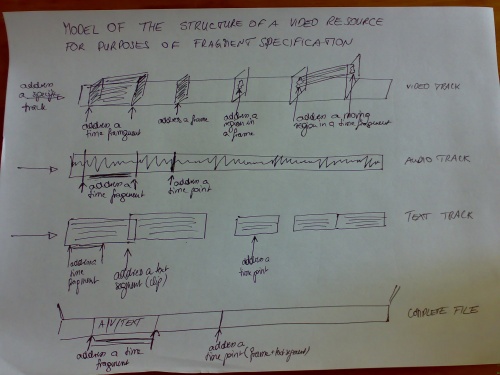

Direct access to fragments

- In search results, in particular search results on large audio / video files, the results need to point to the fragments that actually contain the content that relates to the query.

- This makes content more accessible to any user, including disabled users.

- When the fragment is selected for playback, it should only include the fragment and not the full video.

Solution:

- A URI mechanism to address the fragment is required (see Media Fragments WG at W3C).

- A Web server needs to be enabled to deliver just the subpart of a video / audio that is requested (see Metavid for an example).

Hyperlinking out of audio / video

- The power of the Web comes from hyperlinks between resources; data that has no outgoing hyperlinks is essentially dead data on the Web.

- Hyperlinks from within a/v to other Web resources allow users of varying abilities to link to related information and return back for further viewing.

- Imagine as a blind user using a Web of audio files - hyperlinks will highly improve the usability of the Web for blind users, who are still relatively underrepresented on the Web because of the dominantly visual nature of Web browsing.

Solution:

- One option is to enable the inclusion of <a href ..> into all timed text. The problem with that approach is that if the text is displayed as e.g. a caption inside a video, it appears and disappears too quickly for people to select and activate the link (even if they use 'tab' to get there).

- An alternative is to enable the inclusion of a hyperlink for a fragment of time and display in some special way that a hyperlink is present. (see e.g. CMML for an example)