Firefox OS/Performance/Automation

Firefox OS Performance Automation

Goals

- Provide developers with timely information regarding the performance of their latest code changes

- Provide product and quality owners with enough information to know whether products will be acceptable

- Give piece of mind when Firefox OS performs well

- Inspire appropriate urgency when Firefox OS does not

Tools

Tests

Instrumented

- Launch Latency

- Relaunch Latency

- Frame Rate

- Frame Uniformity

- Checkerboarding

- Memory Consumption

- Battery Life

- Power Consumption

- Keyboard Invocation Latency

- Key Click Latency

- Data Consumption

Development Strategy

Overview

A performance automation metric consists of a type of measurement applied to a series of test cases.

Depending on the metric, these may be one basic test scenario applied to a number of apps (such as launch latency testing) or a number of distinctly designed test cases (such as scenarios for memory or power consumption testing).

The workflow for developing a performance automation test case must be more structured than a standard unit-testing or functional-testing development effort. Reasons are:

- There are many moving parts to pull together for a single test, and often different owners for the moving parts.

- The tests are sometimes more invasive to the system-under-test than a functional test would be. This requires extreme caution; tests can break functionality or even skew their own results.

- Performance tests can be maintenance-intensive. Permanent instrumentation can make the maintenance of the tested code harder too. It's important the test be worth the risk and cost.

- It's very easy to measure the wrong thing, get reasonable looking results, and trust a faulty test or receive false alerts.

- It's very easy to measure the right thing but not control the environment correctly, and trust a faulty test or receive false alerts.

- Performance bugs are hugely expensive to triage, diagnose, and fix; false alerts have significant costs.

- Once the test is running, people don't tend to look for the problems themselves. Tests that don't alert when they should let performance issues slip into field and cause perception of our quality to be lower.

- If performance tests prove untrustworthy, the metric in general will sometimes be written off and even after repair the results may be treated with low priority.

In short, performance tests are a mission-critical system, and require a mission-critical approach. They must be correct, and their behavioral and statistical characteristics need to be well-known. The consequences of having bad performance tests can be worse than the consequences of having no test at all.

Our workflow is described in a series of intermediate deliverables. Reviews for validity and result repeatability are inline with other deliverables to ensure each test brings positive value to Firefox OS.

With only a couple of exceptions, each deliverable depends on the last to be completed before it may be started.

Deliverables

- Design

- Detailed description of the test case's setup needs and procedure, the test procedure, execution details, and results to deliver. This might be for a common scenario, or might be per-case.

- Validity Review

- Review by test stakeholders for whether the test case design meets requirements and will measure the right thing. Tests that measure the wrong thing can still give repeatable results, so it is important to review the initial design.

- Workload

- Data (a fixture) to be preloaded into the system under test prior to execution. There are default workflows described at MDN, but some tests may need special-case workflows to be developed.

- Instrumentation

- Code either injected into or permanently added to Firefox OS or its applications in order to let automation monitor performance details. Some tests do not need this.

- On-Demand Test

- Test that can be run by a developer on command line, try server, or some other way to test a set of arbitrary code.

- Results Review

- Review by test stakeholders of a set of results of the implemented test. We sanity check for whether the results look valid (a result frame rate of 70fps on a 60Hz screen would fail this) and whether they are repeatable (reasonably grouped, assuming no code changes). This is also where we can determine or finalize an estimate of test precision, based on real results.

- Published Results

- Automated results published to a dashboard or similar display. This usually also implies having an automated triggering and execution mechanism (nightly, on-commit, etc.) to generate them.

- Documentation

- Finalized documentation on this wiki and/or MDN of the test case and its usage.

Illustration

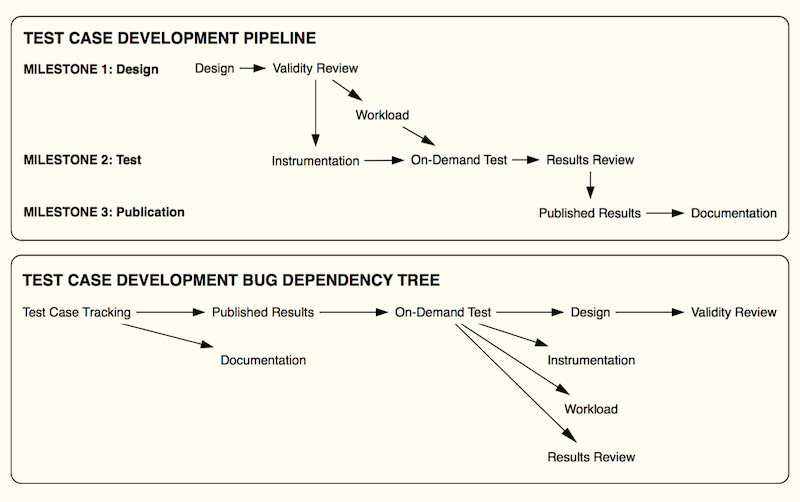

Below are two graphs illustrating the general workflow.

The first is the general order of operations of developing a performance test case, described in the above deliverables. It is split into three milestones. Milestones each result in a final deliverable, and should be each completed as a whole within a single development effort. However, they can be pipelined or freezered on a milestone-by-milestone basis.

The second is a basic bug dependency tree for a performance test case, assuming one bug per deliverable (including reviews) and that all deliverables are necessary.