State Of The Internet/Surveillance Economy

Contents

- 1 Problem Statement

- 1.1 The Surveillance Economy is about more than just privacy. It’s about power.

- 1.2 The Surveillance Economy goes beyond the browser.

- 1.3 The information asymmetry underpinning the Surveillance Economy disables collective action—by design.

- 1.4 Reactionary legislation has shaped one vision for the Information Economy: A Surveillance Economy.

- 2 Research + Community Input

- 3 The Problems

- 4 Towards a Positive Vision

- 5 Three Explorations

- 6 Further Reading

- 7 Stay Informed + Get Involved

Problem Statement

We have little real choice in our digital lives other than to acquiesce to systematic data collection and surveillance by corporations.* Digital experiences are optimized to extract as much data about ourselves, our environment, and our behavior as possible, while providing us with almost no opportunity to understand what data is being collected, who has access to this data, and how it’s being used to shape our behavior and opportunities. The surveillance economy gives the watchers “unprecedented ... power… distinguished by extreme concentrations of knowledge and no democratic oversight.” (Shoshana Zuboff). Yet these watchers are also sloppy and negligent, permitting their systems to be breached and gamed in ways that cause enormous harm to people, businesses, and society.Information technologies—from the printing press to radio and television—have always created new social behaviors. But what’s different about today’s information technologies is that they don’t just broadcast to us, they interact with us. They monitor us in order to provoke specific responses—a click, a purchase, a feeling, a vote, an action. In other words, information technologies have gone from watching our behavior to shaping it. This behavioral manipulation is the engine of the Surveillance Economy, and data is its fuel.

“Google discovered that we’re less valuable than others’ bets on our future behavior. This changed everything.” - Shoshana Zuboff

The Surveillance Economy is about more than just privacy. It’s about power.

Privacy is, indeed, a way for users to regain some control. But privacy is just a salve, not a solve for the dynamics below the tip of the iceberg. With so many parties rallying around privacy today, we need to shine a light on the fact that people have been made powerless.

“Is it privacy we should care for? Or is it the feeling of powerlessness?” - Jess Mitchell, Researcher, Inclusive Design Research Centre, OCAD

“It's interesting how quickly people are willing to surrender power for convenience." - Douglas Rushkoff, Media Theorist and Author

“Making consent more effective is incommensurate with the scope of the problem.” - Ben Wizner, Director of Speech, Privacy & Technology Project, ACLU

The Surveillance Economy goes beyond the browser.

A “data rush” is attracting new players from environments beyond traditional technology. They’re making use of masses of data, innovating ‘smart’ tools to collect more. From smart cars to smart cities, from fitness trackers to toasters, the Internet is now far beyond the browser. These new technologies are Trojan Horses for always-on data collection and manipulation.

“To keep people safe online, we have to be outside of the browser.” - Peter Dolanjski, Director of Firefox Security & Privacy Products, Mozilla

“The older generation is thinking of the Internet as that thing that's on the computer. The younger generation is thinking of it as this stuff that's on my phone, it's in my purse, and then I'm walking around with it. The next generation is going to have it embedded in everything they do. God knows if there will be a browser, because you just move through the world. And in some ways, the more embedded it is in your environment, the less people perceive of it as surveillance.” - Douglas Rushkoff, Media Theorist and Author

The information asymmetry underpinning the Surveillance Economy disables collective action—by design.

There is a huge asymmetry of capacity and information between Google and 1 billion unique Google users. This leaves little space for collective sense-making, organizing, and action. Making matters worse, algorithms are continuously dismantling a sense of collective cohesion between people, further fractionalizing our power against the corporate hegemony.

“When you get this level of concentrated economic power, it very often leads to concentrated political power and these sort of very unhealthy marriages between state actors and corporations.” - Denise Hearn Author

"They know more about me than I know about them. It’s hard to have a fair or productive interaction with me when there is an extreme information asymmetry.” - Mozilla employee

Reactionary legislation has shaped one vision for the Information Economy: A Surveillance Economy.

Today, innovation is still happening faster than legislation can keep track of—which often means regulators find themselves reacting to situations, instead of proactively laying out the rules. From GDPR to CCPA, these policies are reacting to a scenario with marginal protections at best and unintended consequences at worst.

“The masters of the Surveillance Economy persuaded regulators early on, and still now, that innovation is the most important value and that it’s too early for regulation. This left the space unregulated and shaped one version of the Internet—a commercial one.” - Ben Wizner, Director of Speech, Privacy & Technology Project, ACLU

How can Mozilla -- working our communities and partners -- find a path forward that takes advantage of the remarkable new insights of ‘big data’ while protecting individuals and the public?

*We recognize that corporate and government surveillance are intertwined in often problematic ways. However, for this project -- at least at this stage -- we looked only at the corporate aspect and its impact on people.

Research + Community Input

To explore different dimensions of the Surveillance Economy, we started from comments and thoughts shared by employees and community members through employee workshops and MozFest.

We then spoke to over 45 people* representing a wide range of perspectives on this space — from policy wonks, economic theorists, and heads of government to Mozilla product managers, community members, Mozilla Fellows, and diversity, equity, and inclusion activists. We asked each to help us understand the State of the Surveillance Economy: What is it? How did we get here? Why is it so insidious? How, exactly, does it hurt people and companies? And, most importantly, what can we do about it?

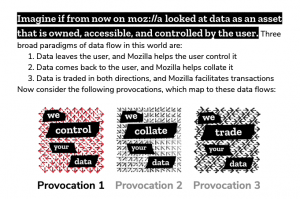

Working from some of our early findings, we created three design provocations and shared them with Mozilla community members and employees for voting and feedback. Mozilla has a remarkable asset in our diverse communities, so including their voices in this conversation was critical to our learning. These 'edge case' departures gave us more insight into what directions resonated most, and helped to bring in new thinking too.In parallel, we ran traditional -- but limited -- market research, built a graph and clustered topics from a Wikipedia crawl to help ensure subject breadth, and generally immersed ourselves in the world of surveillance.

Finally, we incorporated employee and community feedback from All Hands into the final suggested Explorations.

* We did not speak to many 'end users' as part of our inquiry, due to scope and timing limitations. However, the human voice will be critical for the next phase around each Exploration.

The Problems

Through discussion, research and workshops, we distilled three big problems that we felt lie at the heart of the Surveillance Economy:

- Exposed: The burden of protection is put on people—who can’t see or feel the threat.

- Excluded: Big companies are hoarding troves of data and smothering competition.

- Exploited: The only thing not surveilled is how people’s data is used.

Understanding and addressing these problems guided our creation of the Explorations.

Exposed: The burden of protection is put on people—who can’t see or feel the threat.

Opting out is not an option

To engage with the Internet, you have to be willing to give up data. It’s all in or all out, with little control over what is shared in different contexts.

Privacy is not user friendly

It’s clunky and requires continuous time, attention and knowledge to set up and maintain.

This pain is perpetuated by:

Corporations don’t see themselves as responsible

The ideology of Silicon Valley is that technology is agnostic, and so it—and the people that make it—are not accountable to design with people’s privacy in mind.

Always more, and always on

While people have low visibility of corporate surveillance on their lives, corporations are striving to have ever more visibility of people: more touch points, more environments, and more data.

Little power to the people

People have become atomized customers, stripped of any potential power for collectives to demand new protections from corporations.

A mismatched market

People want convenience. Corporations want surplus. Companies supply the convenience people demand, extracting as much data as possible in return. It’s not a fair or transparent exchange.

- “As designers we aim for ease-of-use and don't think about opt-in/out.” - Caroline Sinders, Mozilla Community Member

- “People really don't know how to solve this—it's like the tsunami has already started, and we can't roll back the wave.” - Denise Hearn, Author, The Myth of Capitalism

- “Right now, the choice is binary; opt in or opt out. We need more tools, practices, products, to empower people to take more action.” - Katharina Borchert, Chief Innovation Officer, Mozilla

- “Don't ask me if I want a cookie when I'm reaching for the cookie jar.” - Cory Doctorow, Sci-Fi Author, Special Advisor, EFF

- “It's interesting how quickly people are willing to surrender power for convenience… Most algorithms are there to divide us, because an atomized person is a better customer.” - Douglas Rushkoff, Media Theorist and Author

- “There has been a violation of fiduciary structure. Lawyers and doctors have it —when you have privileged information, there is an asymmetry of power, and the law says these people can’t manipulate people based on it to buy services from you they don’t need, for example. Companies shouldn’t be able to collect data, which is the privileged information of the modern era, without a fiduciary responsibility for its use.” - Daniel Schmachtenberger, Civilization Theorist, Center for Humane Technology

- “Google doesn't need to spy on you—you're intentionally giving them your data.” - Ekr Rescorla, CTO Firefox

- “The way we design user interfaces can have a profound impact on the privacy of a user’s data. It should be easy for users to make choices that protect their data privacy. But all too often, big tech companies instead design their products to manipulate users into surrendering their data privacy. These methods are often called ‘Dark Patterns.’” - Alexis Hancock, Staff Technologist, EFF

Excluded: Big companies are hoarding troves of data and smothering competition

Barriers to entry for disadvantaged players

Monopolies of power and data are killing viable competition and innovation from alternative players. And making matters worse, compliance drastically cripples small, medium, and even large businesses.

Discriminatory decision-making

Decisions based on biased data or algorithms, along with different value ascribed to data produced by different segments of the population, create an exclusionary and discriminatory reality.

This pain is perpetuated by:

Winners take all (the data)

Early entrants have access to the most data and users. This is rigging the game in their favor and creating insurmountable barriers to entry for businesses of all shapes and sizes. Monopolies rule, and true innovation and viable competition is killed.

Data lakes over lean data

The bigger the data hoard, the bigger the value might be—even if we don’t know how to realize that value today. But just because companies are collecting huge quantities of data doesn’t mean it can be used for the right means.

Walled gardens over public parks

Walled gardens don’t allow interoperability, keeping people stuck in the garden, and any other players out.

Garbage in, garbage out

With no standards of collection and many incentives to extract as much data as possible, data quality and provenance information is low. This means decisions made on people’s lives not based on empirical data.

- “It's not just a problem of collection now, but of how people use the stockpiles of data they have.” - Ben Wizner, Director of Speech, Privacy & Technology Project, ACLU

- “The default is: Collect everything, then we'll figure out how to monetize it later.” - David Bryant, Mozilla Fellow

- “I worry we are collecting data because it’s cheap. People think it's a pile of snow, but it's actually a pile of yellow snow. It's not clean.” - Jess Mitchell, Researcher, Inclusive Design Research Centre, OCAD

- “With a ‘collect it all’ approach, you're not creating the clean data sets you need for AI and ML models.” - Jochai Ben-Avie, Head of International Policy, Mozilla

Exploited: The only thing not surveilled is how people’s data is used.

Invisible practices enable invisible human exploitation

Whether someone is a user or a Mechanical Turk worker, their exploitation is invisible and many are unknowingly exposed to risk.

New data businesses are “accidentally” exploitative

As new or transforming companies start to participate in a digital-first world, they have no idea where to start when it comes to data practices. They can either design their own, or rely on surveillant tech products from the big players.

Advertisers and publishers held hostage by ad tech

There are few ad exchange alternatives.

This pain is perpetuated by:

Opacity enables risky business

The data supply chain is hidden by design, with the algorithms involved often black boxes. Invisible practices give big companies cover for shady behavior—from ad fraud to data breaches—and eschews conversations about ethics or regulation.

No knowledge means no power

With the excuse that it’s “too complicated to understand,” companies’ lack of transparency fuels a debilitating information asymmetry: since people don’t know what’s happening, they can’t demand different practices (like they have for other exploitative supply chains).

Little value means little care

Though people create the raw resource of data, they have no way to realize its value. Only companies can convert the raw data resource people produce into something of value.

- “The big change that I've seen in the last few years is that it has become possible for companies to gain market share by emphasizing privacy.” - Arvind Narayanan, Computer Scientist; Associate Professor, Princeton University

- "“How are people supposed to be incentivized to care about the economics of their data, when their data has no economic value?” - David Gehring, Mozilla Fellow; Distributed Media Lab, CEO

- "Black Box" is more of a lack of understandability, because we haven't figured out how to explain things to people—it is not a technical solve, it's a very human thing, like standardization, traceability of decisions, etc.” - Rumman Chowdhury, Managing Director, Accenture AI

- “This whole economy is built on a false premise–targeted advertising is a scam.” - Mark Surman, Executive Director, Mozilla Foundation

- “A real market crash will force brands to pull back ad budget and they will realize that this will not change conversions and a lot of middle men will die overnight.” - Augustine Fou

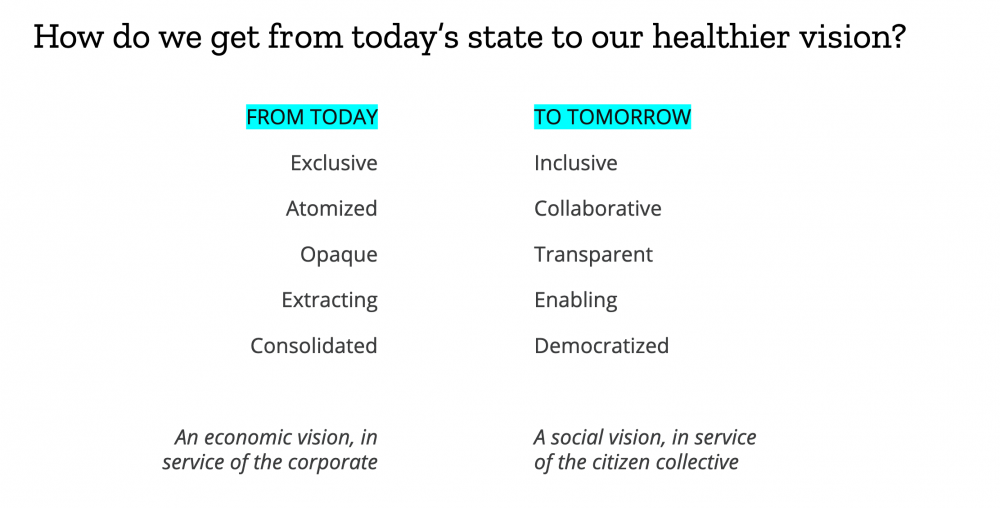

Towards a Positive Vision

The world needs a vision for a healthier alternative to the Surveillance Economy --- and they want Mozilla to help define and create a more favorable future.

- “We need to organize the Internet around new values...Mozilla has these values: privacy, civil society, user control, along with a creative and active legal team.” - Ben Wizner, Director of Speech, Privacy & Technology Project, ACLU

At an early employee workshop in Berlin, someone commented that we lack a positive vision for a 'datified' society. What might that be?

Rather than an exposing, excluding, and exploiting system, we imagine an alternative to the Surveillance Economy.

Not an economy based on exploiting the individual, but a society in service of the collective.

A datified society is not built on manipulating the individual but on empowering its citizens.

This society consists of individuals, businesses, public groups, private social circles, and collectives.

Its citizens are part of a dialogue, an open agreement between one another, their governance, their economy, and their society at large.

Data is their connective thread, currency, and the evidence of their value exchanges.</big>

- “We are not going to move out of this. Data will be a core point of the future value equation. So, how might we have an information economy and create value through AI and data, without being exploitative?” - Mark Surman, Executive Director, Mozilla Foundation

- “Technology made radical asymmetry and the Surveillance Economy possible. But social technology representing the collective can realign this asymmetry. Mozilla can help do this.” - Daniel Schmachtenberger, Civilization Theorist, Center for Humane Technology

- “The world needs Mozilla right now.” - Alan Davidson, VP of Global Policy, Trust and Security, Mozilla

- “This is not a digital revolution, it's a digital renaissance—and a renaissance means the things that were repressed come back...I feel like Mozilla shouldn't just be a Web browser, but a portal to the Internet's functionality. I hope it could somehow expose the Internet under there.” - Douglas Rushkoff, Media Theorist and Author

- “This is one of the biggest and most important things we can be working on right now. Mozilla is a player that matters in this.” - Anonymous

Three Explorations

What is an Exploration?

Explorations are designed to inspire big thinking about how Mozilla can change the game around the Surveillance Economy. Each includes steps we might pursue to create a more positive future. These suggestions are not the only steps, and perhaps not ultimately the right ones. But they will help us ‘learn by doing’ and ‘learn by caring’ — a core principle of this project.

For the Surveillance Economy, we defined three Explorations that we think could transform the power imbalances of the Surveillance Economy. Again, each Exploration opportunity was derived from research, conversations with employees and experts, and analysis of where we think Mozilla’s power can have a disproportionate impact. They are described in three phases, from outlining actions to help us dive in and better test our assumptions around how we might have bolder, broader impact, to building momentum and eventually reaching our destination. These phases are suggestive -- meaning they are not strictly bound to any understood timeline -- and are intended to define what the Exploration's ultimate goal would feel like, and the likely steps and transformations that would have to happen to reach this goal.

The three Explorations are described in length through the following links:

Powering-up Consent and Identity

Changing the consent conversation from “control over your privacy” to “power over your identity.”

Bringing new value, transparency, and power to the people through control and ownership.

Productizing our healthier data practices and infrastructure and galvanizing a ‘better data’ movement.

Employee + Community Sentiment on the Explorations at All Hands

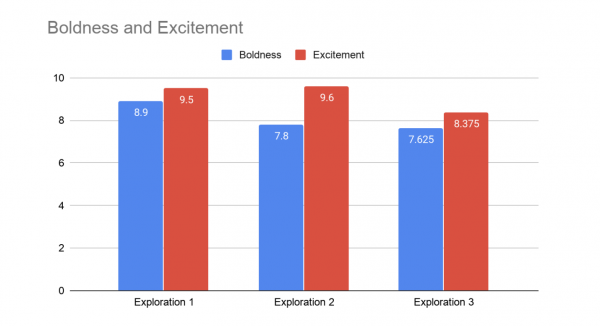

Harkening to the State of the Internet project's goal to understand how we might have bolder, broader, and bigger impact in our mission, we asked those who walked through the Surveillance Economy Exploration exhibit at the January 2020 Mozilla All Hands to weigh in on which Explorations felt bold and which excited them most. Although participation from this self-selected group was low, all three Explorations scored high in both categories.

Further Reading

There is a lot of fantastic documentation and analysis of the surveillance economy, too much for us to keep updated here. However, here are a few of our favorites:

- Shoshana Zuboff, The Age of Surveillance Capitalism (book) + our IRL podcast.

- Mozilla's Internet Health Report.

- Kashmir Hill, ”I Got Access to My Secret Consumer Score. Now You Can Get Yours Too” (paywall)

- New York Times' series The Privacy Project

- Mitchell Baker’s interview with Cheddar about rebuilding consumer trust in the internet

- Follow writings and presentations from Mozilla Fellows

Stay Informed + Get Involved

Email: state-of-the-internet@mozilla.com

Join #stateoftheinternet on Slack (Staff + NDA Contributors)