BMO/ElasticSearch/History

History

Martin Best started the Bugzilla Anthropology Project, which initiated a need for dashboarding the vast repository of information contained in Bugzilla. Metrics has setup an ElasticSearch cluster with all the historical meta data on the bugs in Bugzilla. This includes data on security bugs. Mozilla now has views of this data

Existing Code

We would like to increment on existing ETL. Unfortunately, the complexity of the installation may reduce the number of interested community members. There are six languages involved:

- Manual Process - Running a few command line functions to setup the index, and redirect the alias pointer when done

- bash - For the main ETL loop: Grouping the bugs into 10K groups

- Spoon - A visual programming tool to connect ETL code snippets, data sources, and data sinks

- SQL - To pull data from Bugzilla database directly

- Javascript - Used to write convert the fine-grained delta objects into bug version snapshot records

- Java - Used to push the bug version records into ElasticSearch cluster

New Design

Two Clusters: One Public and One Private

The new ETL will manage two separate clusters:

- Private - The first is like the original; containing non-identifying information on all bugs, including security bugs. This cluster will remain behind Mozilla's LDAP.

- Public - The second is new, and will include all information (including comments) on public bugs. It will be made available to the public for analysis.

Justification for Python

I believe a Python re-implementation of the existing code benefits community involvement by making the installation procedure simpler, and benefits the ongoing enhancement to code base by enabling unit and functional testing:

- The manual process only exists because the outer loop of ETL is in bash, and it is difficult to perform a dynamic state analysis to fully automate the process. A full Python version will make it easier to probe the existing index state and setup indexes and aliases automatically.

- Kettle (Spoon) is a 700Meg piece of software that is specialized for ETL jobs. Many of it's features are not used in this project. The record-level functions provided by this tool must be learnt to fully interpret the other languages' code.

- The heavy lifting is being done with the main Javascript routine. This can be converted, line-by-line to Python.

- SQL can be imported, without change, to the Python version

- The Java code to push rows to ES is significantly simpler in Python, and easier to maintain

- The current design has no debugging facilities, the Python version will allow stepping through code and easily inspecting intermediate states

- The Python version works better with source control; Spoon uses single-large-XML files which are not split by functional group (but admittedly are well behaved with source control)

- Be able to add tests, with a familiar test framework, and verify correctness

Update (23 October 2013)

The Python ETL is a now functional enough for use. The various servers for the public facing bugs has yet to be setup.

This project took more effort than expected. Here are some of the complications that slowed down development. Please keep in mind I only had a couple months of Python before doing this conversion, feel free to take pleasure at my ignorance:

ETL Issues

- Python and Javascript property access is different enough to cause a multitude of bugs when just performing naive conversion: For example, converting Javascript if (!a.b){ ... } to Python if not a["b"]: .... can emit key exceptions and simply take the wrong path when dealing with empty sets.

- Alias analysis is error prone: Users' email addresses can be changed, and there is no record of those changes. The bug activity table has recorded changes for emails that "apparently" do not exist. Well, they do exist, but are aliased. The old ETL used reviews to do some matching. The new version uses the CC lists which have more information. The problem is fundamental corruption in the history caused by (possible) direct poking of the database. This corruption must be mitigated with fuzzy logic.

- It took a while to build up a library of tests that could be used to verify future changes. More tests => more test code => more bugs in test code => more bugs found in production code => more tests. Sometimes it seemed endless.

Python Issues

- Python is slow. Python speed comes from the C libraries it uses, spending time in the Python interpreter is a bad idea. For example, going through the characters in all strings to check for invalid Unicode turned a slow program into an unusable one. The solution was to find a builtin library that did the work for me (or would raise an exception if the conditions were false). This ETL program has significant data structure transformations that can only be done in Python. The solution was to use the PyPy interpreter.

- PyPy does not work well with C libraries. The C libraries had to be removed in favor of pure Python versions of the same. This was not too hard, except when it came to JSON libraries

- JSON generation is slow: The built-in JSON emitter used generators to convert data structures to a JSON string, but the PyPy optimizer is terrible at analyzing generator code. Furthermore, the JSON libraries available to CPython are incredibly fast (Ujson is by almost 2 orders of magnitude faster!) This made the PyPy version appear inferior despite the speed up in the ETL portion of the code. Part of the solution was to use PyPy's own JSON emitter, but also realize PyPy's default JSON emitter (no pretty printing, no sub-classing, etc) has Ujson speeds. The fastest solution I found so far, is to copy the data structure (with sets, Decimal, and other special types) to one with simple dicts, lists and floats and pass it to the default PyPy JSON emitter[1].

- Python has old-school, unintuitive, routine names (strftime, mktime, randrange, etc) these take time to find, and time to confirm there isn't a better library that should be used instead. I opted to add a facade to most of them to re-envowel their names, and isolate myself from the risk of using the wrong lib (or have it behave in unexpected ways).

- Python2.7 strings are confusing: str() can be either Latin1 or UTF8 encoded, but without any typing to indicate which encoding is used. There are also unicode() strings, which look like strings until you try to compare them: "é" != u"é"

- Multithreading was necessary so we can handle multiple network requests at one time, while keeping the code easy to read. Python's threading library is still immature: It has no high level threading constructs to deal with common use cases in an environment that raises exceptions.

- Python2.7 has no exception chaining - added it

In the end we have a high speed ETL solution that is easy to install and execute. There are plenty of improvements that can be made, and definitely in the area of more threads and more multiple processes. But those can wait while we deploy.

SecReview (20 November 2013)

Introduction

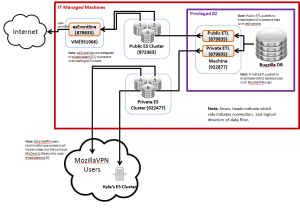

Public Bugzilla data in a publicly accessible ElasticSearch cluster!

- Overview https://wiki.mozilla.org/Auto-tools/Projects/PublicES

- Architecture

- PowerPoint https://bugzilla.mozilla.org/attachment.cgi?id=828667

- png version [2]

- Code https://github.com/klahnakoski/Bugzilla-ETL/tree/bzAliases

- History https://wiki.mozilla.org/index.php?title=Bugzilla_Anthropology/2013-01-29

Goal of Feature, what is trying to be achieved (problem solved, use cases, etc)

- Provide public fast cache of BZ data to:

- demonstrate current work

- allow community to build tools

- allow community to analyze trends, patterns

What solutions/approaches were considered other than the proposed solution?

- Tried to publicize the existing ES cluster information (private bugs with no comments or summary), but there was concern the CC list may reveal the bug's security category (https://bugzilla.mozilla.org/show_bug.cgi?id=823303)

- Using the BZ-API directly requires sophisticated caching, which appears to stall attempts at making snappy dashboards.

Why was this solution chosen?

- ElasticSearch is very fast

- Direct DB access leverages existing code

- Direct DB access puts no load on Bugzilla app

- Proven to work with business intelligence queries, which demand fast aggregate data over thousands of bugs (https://wiki.mozilla.org/Bugzilla_Anthropology/2013-01-29)

Any security threats already considered in the design and why?

- Private bug data leaking into public cluster

- ElasticSearch was not meant for direct public access, proxy added (https://bugzilla.mozilla.org/show_bug.cgi?id=879833)

Update (January 9th, 2014)

Over this past couple of months security reviews have been completed, and the suggested enhancements have been implemented.

ETL Highlights

Alias Analysis

All bugs have a carbon copy (CC) list of users that are mailed when the bug changes. The historical record of this list is kept as a list of added and removed email addresses, with timestamps of course. An issue arises when the user changes their email address: The next change in the historical record will refer to the new email address, and not the old, looking something like this:

| Time | Removed | Added | Resulting CC List |

| Jan 2nd | klahnakoski@mozilla.com | klahnakoski@mozilla.com | |

| Jan 3rd | mcote@mozilla.com | klahnakoski@mozilla.com, mcote@mozilla.com | |

| Jan 4th | kyle@lahnakoski.com | mcote@mozilla.com |

As humans, we know what happened here: Kyle (me) changed his email address (somewhere between Jan2nd and Jan4th), and then removed himself from the CC list for the bug. The ETL script has no such domain knowledge, and simply sees an inconsistency. A naive rebuilding of the CC list history would have to assume kyle@lahnakoski.com was in the CC list since the beginning (which is a legitimate situation, but uncommon, for the first snapshot of a bug). In aggregate, with all these mismatches, the naive rebuilding of historical record resulted in concluding many bugs started with long CC lists, that were eventually paired down over time to what currently exists. This pattern is quite opposite of reality; where a bug starts with usually few people and the list grows.

I implemented an alias analysis that uses the inconsistency in the history, specifically klahnakoski@mozilla.com was added to the CC (+1) but does not exist in the current bug state (+0). mcote@mozilla.com was added (+1) and exists (+1), so the logic is consistent. We must conclude removal is kyle@lahnakoski.com (-1) matches to addition of klahnakoski@mozilla.com (+1) to zero effect (0). Really, we are solving a simple equation:

+ k1 + m - k2 == m => k1 - k2 == 0 => k2 == k1

More complex cases involving simply more equations and more unknowns: Then we solve the system of equations. Lucky for us this is not as hard as algebra class because we have many more equations than we have variables to solve for: Finding a solution does not require all equations, and this helps us with the problem of corrupt history.

Corrupt history? - Yes, the older Bugzilla history is slightly corrupted, which can effect the solutions to these equations. To mitigate this problem, the solutions go through a voting stage to help determine what is the truth. In the case of this alias analysis: I found at least three systems of equations where a solution is found, and that same solution has been found twice as often as any other, then I know there's enough evidence to conclude I have the right solution (one email address matches another).

With alias analysis done, the CC list history looks like they should: Small CC list at the start of a bug's life, growing over time. The alias mapping that results is also used to match review flags (which is another story).

Proving Correctness

Bugzilla contains some bugs that should not be made public. These include bugs with specific security concerns, but also infrastructure specific details and other sensitive items. It is important that these do not leak. Making unit and functional tests is not enough because they can only test the known unknowns. The unknown unknowns are inevitable in any code with reasonable complexity and you can not test for those explicitly. Instead I want to perform the easier task of testing against invariants:

- Private bugs should not be in public cluster

- Private comments should not be in public cluster

- Private attachments should not be in public cluster

To check these tautologies I require datasource that knows what is "private" -- the private cluster has this! It is a simple matter of writing queries that ask for all private entities and check if any are in the public cluster. These queries are computationally expensive because they scan the whole datastore, but the queries are simple enough to be proven correct. Furthermore, these queries can be run outside the main ETL program, allowing us to phase out these expensive checks when we are confident the ETL code is correct.

Reflections (February 5th 2014)

My biggest mistakes were in time estimation. Generally, I underestimated communication/coordination overhead, which can be significant:

- Security reviews take time - The security team was fast and responsive, but it still took time to schedule meetings, and fix the design.

- Not much gets done during holidays - The holidays are for family and good food, not for production deployment. Assume nothing will get done for two weeks.

- Production deployment takes time - The IT group responded quickly, but it took time to setup the app, the machines and the configurations. This can be a couple of weeks at least, much of it communication delay and context switching overhead.

- Debugging production code is hard - If something goes wrong in production, it can be hard to debug.

- Inspecting code for possible bugs that match the problem's signature,

- making a test to validate,

- adding more logging in case you fixed some other bug instead,

- running the battery of tests, and

- scheduling IT to push the next version

- all takes time; a couple of days at least. Compare this to development: where you can squash a bug in an hour.

Another issue, mentioned above, is the extra effort in adding tests to the code-base: More tests => more test code => more bugs in test code => more bugs found in production code => more tests. A very good thing for sure, but I should have recalled from my past that tests account for 2/3 of coding effort. I should have tried to estimate the effort to rewrite the existing code, then doubled it to get an estimate for writing the testing code. I had lazily glossed over that non-trivial amount of effort.

Finally, Python has idiosyncrasies, and the bugs caused by utf8/latin1 were the most time consuming.