TestEngineering/Performance/Sheriffing/Workflow

Context

Performance sheriffs, along with the standard by-the-book definition, are responsible for checking for performance regressions on a daily basis. This is done by reviewing alerts from our performance tests on Perfherder. Any time a test or tests exceed the set threshold for its framework, one or multiple alerts will be generated. The goal of the sheriff is to identify the commit or revision responsible for the regression, and file a bug with a :needinfo flag for the author(s) of that commit or revision.

How to Become a Sheriff

To become a sheriff(and have sheriffing permissions) you will need to file 2 bugs to have sheriffing actions allowed in treeherder.

1) Request to be added to the treeherder sheriff group

- Add your name in the title and in the description add your ldap email address in the linked bug

2) Add yourself to the perf_sheriff group

- Add name and ldap email in title and email in the description of the linked bug

Once both bugs are completed check that you can do sheriffing actions. You should be able to now update alerts through the ability to add notes and tags to alerts.

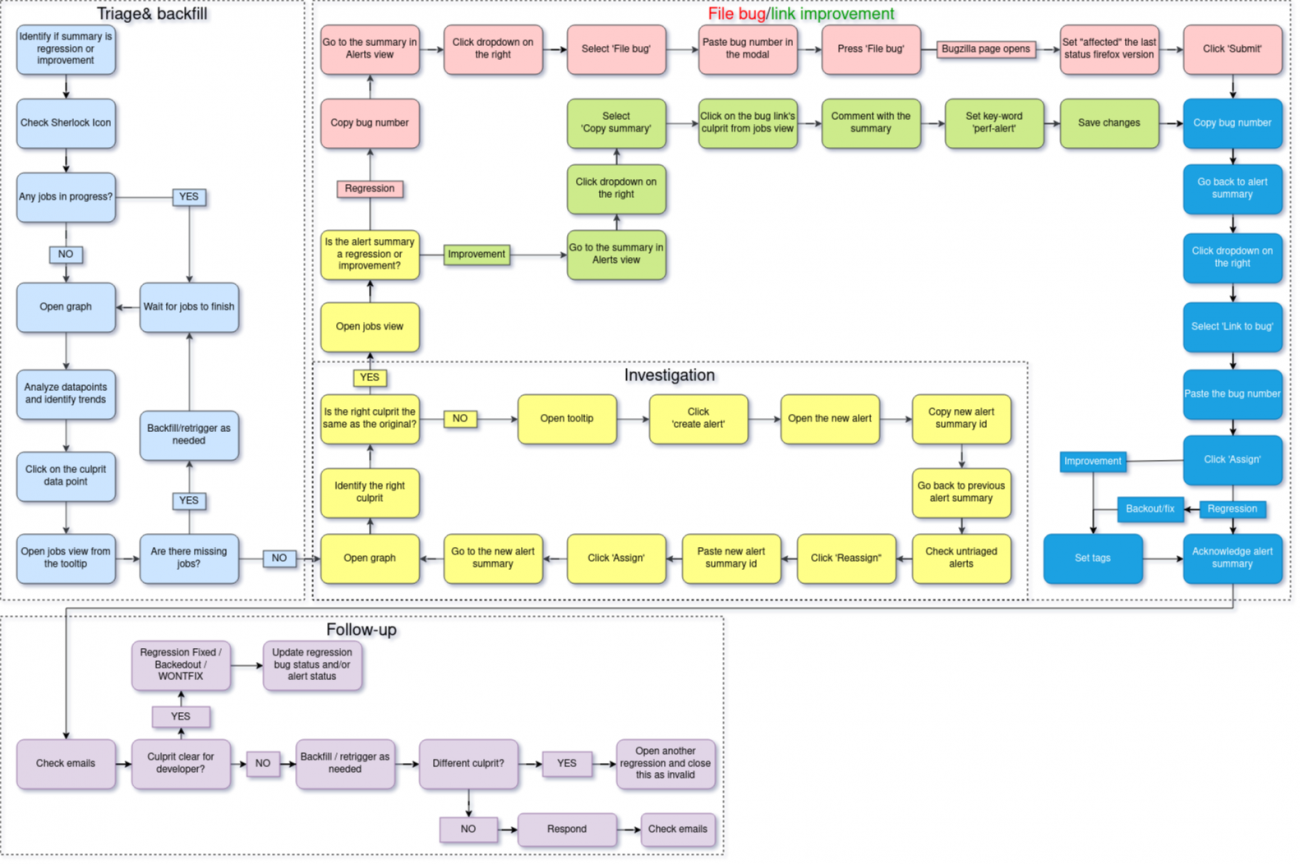

Flowchart

app.diagrams.net link: https://drive.google.com/file/d/1Hpg9AjKTA2jx413Ly4imJWwM_gQHtLU3/view

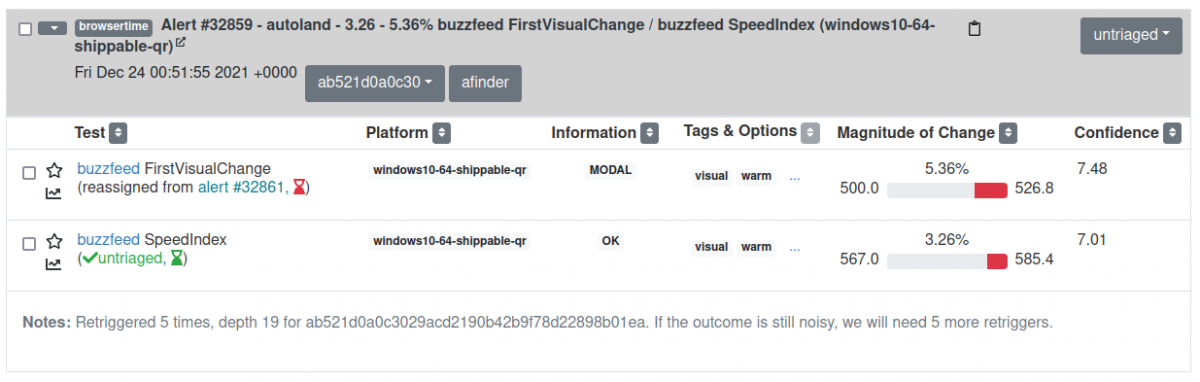

Filtering and reading the alerts

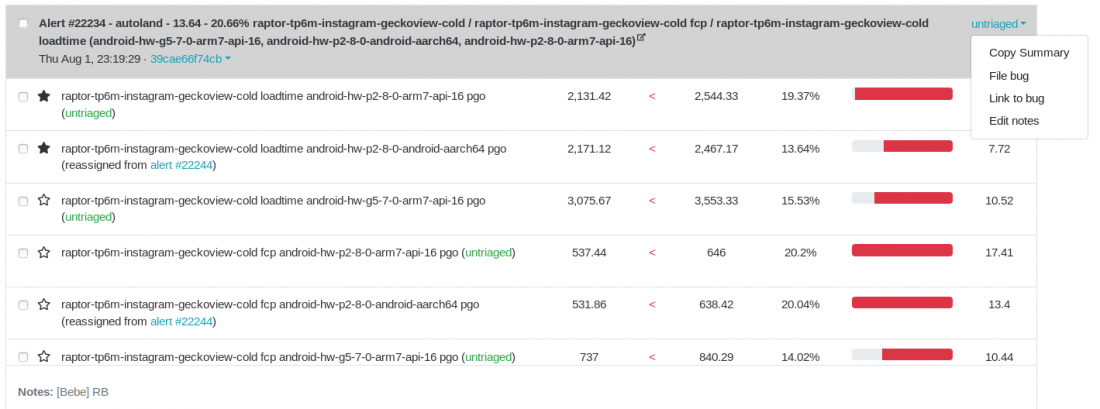

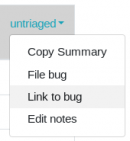

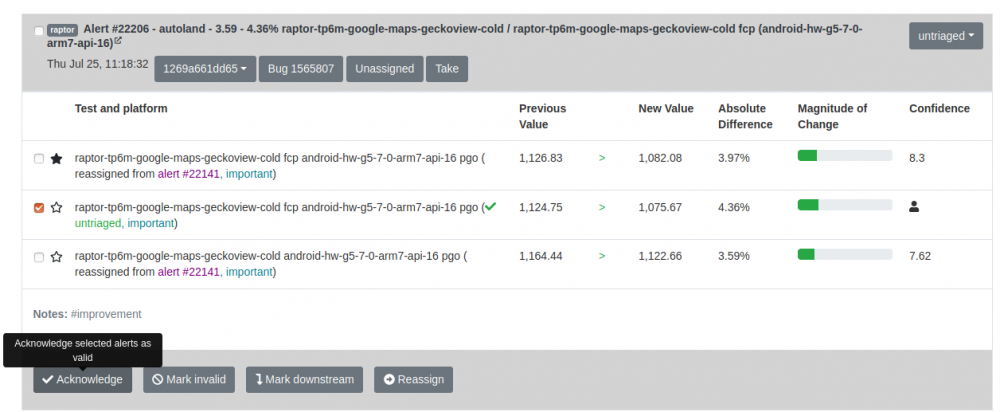

First thing after accessing the Perfherder alerts page is to make sure the filter is set to show the correct alerts you need to sheriff. The new alerts can be found in untriaged.

![]()

The Hide downstream/reassigned/invalid button is meant to reduce the visual pollution in case you don’t want to see the downstream/reassigned/invalid alerts. My alerts button will show only alerts that are assigned to you.

The alerts are grouped by Summaries*.

The tests:

- may run on different platforms (e.g. Windows, Ubuntu, android, etc.)

- can share the same suite (e.g. tp6m)

- share the same framework (e.g. raptor, talos): if a particular commit trigger alerts from multiple frameworks, there will be different summaries for every framework.

- measure various metrics (e.g. FCP, loadtime), but not all of the metrics trigger alerts

*By the book, an alert is one item of the summary, but we can refer also to a summary as an alert, depending on the context.

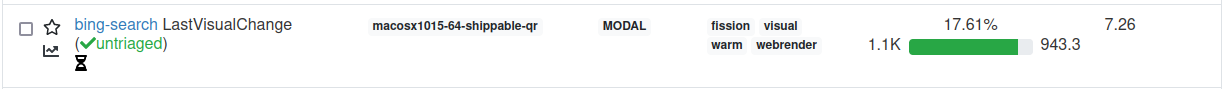

Though you can see in the summary items references to those namings, like below.

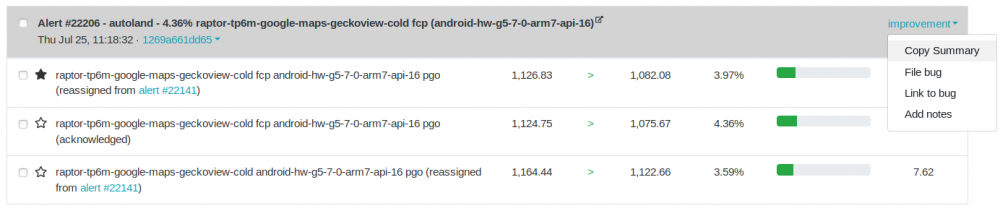

Ideally, the intent of every patch landed to the mozilla repositories is to cause improvements, but in the real world it doesn’t happen like that. An alert summary can contain improvements, regressions or both.

- the improvements are marked with green

- and the regressions are marked with red.

How to investigate an alert

Before doing any investigation, you have to assign the alert to yourself by clicking the ![]() button and pressing Enter after. You should see your usename in-place

button and pressing Enter after. You should see your usename in-place

![]() .

.

Golden rules

As golden rules, when you are not sure about the culprit of the graph you're investigating:

- zoom in until you can easily distinguish the datapoints

- retrigger more if the graph is too unstable for the data you have

- when the graph is too noisy and zooming in only makes it harder: zoom out, choose a larger timeframe (30 days, 60 days, etc), and then zoom back in slowly, following the limit line of the values in the before half of the graph until you find the datapoint that changes the trend.

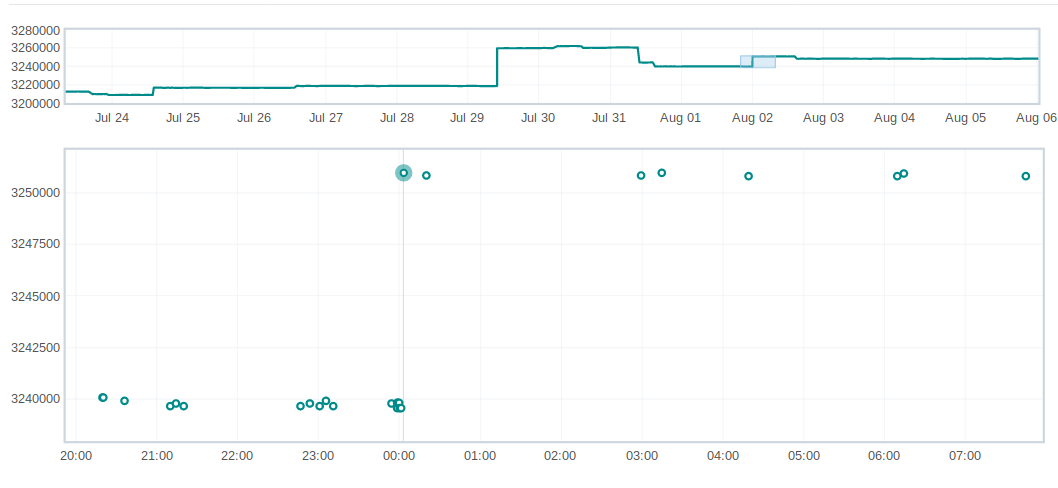

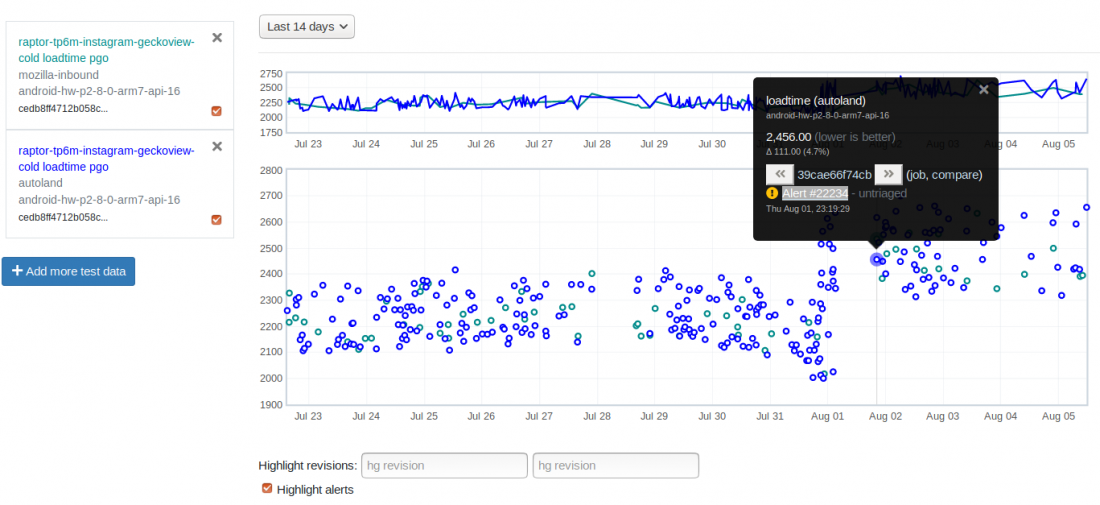

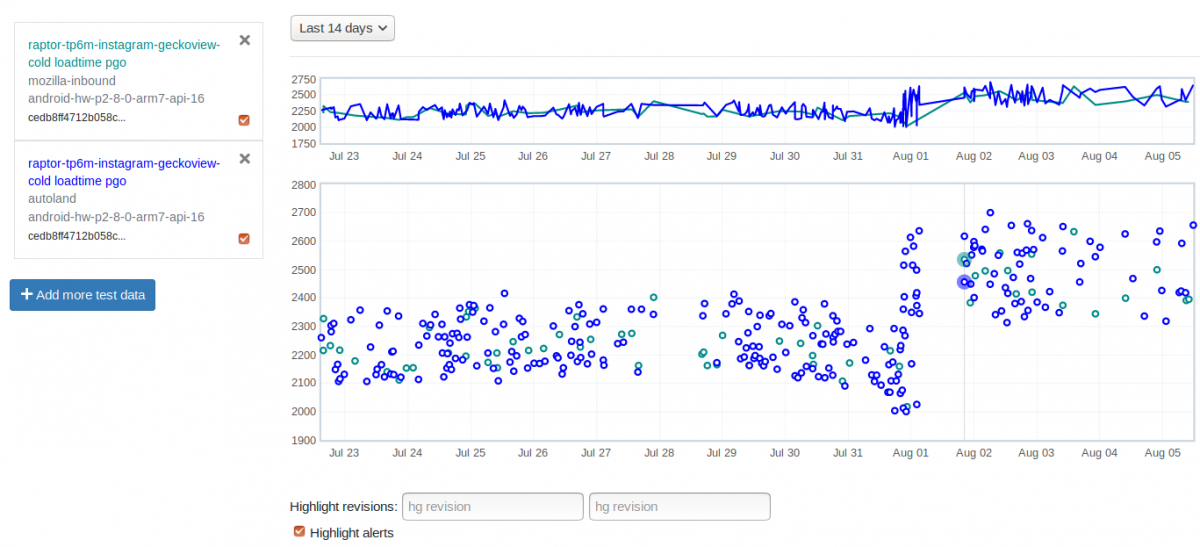

Reading the graph

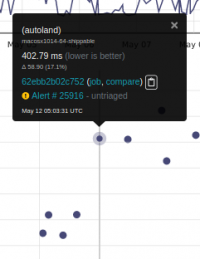

To read the graph of a certain alert, you just need to put the mouse over it and click on the graph link that appears:

Starring it ![]() you make sure you know which alert you read when come back to the summary.

you make sure you know which alert you read when come back to the summary.

The graph will show with a thin vertical line all the alerts associated with the test, so you need to make sure you’re looking at the right one by hovering or clicking on the datapoint. If the datapoint of the improvement/regression is not clear you might want to:

- zoom by drawing a rectangle over the desired area

- zoom out by clicking on the top graph

- extend the timeframe of the graph using the dropbox on the top of the page.

If the commit of the improvement/regression is not clear, take the desired action (usually Retrigger/Backfill) and make sure you write down in the notes of the alert (Add/Edit notes) your name and what you did, so you or another colleague know what’s happening next time the alert is sheriffed. The pattern is: [yourname] comments. We use to leave most recent comments first so we can easily read them when we come back.

TODO: list categories of graph - stable, noisy (noisy with bigger or smaller trend change), modal, invalid.

Retrigger/Backfill

Depending on the test, the jobs are ran once in several revisions or on every revision. The vast majority runs once in several revision, so almost every time you need to do a backfill between the first bad and last good job in order to determine for sure the culprit, by clicking on the job link from the tooltip of the regression data-point.

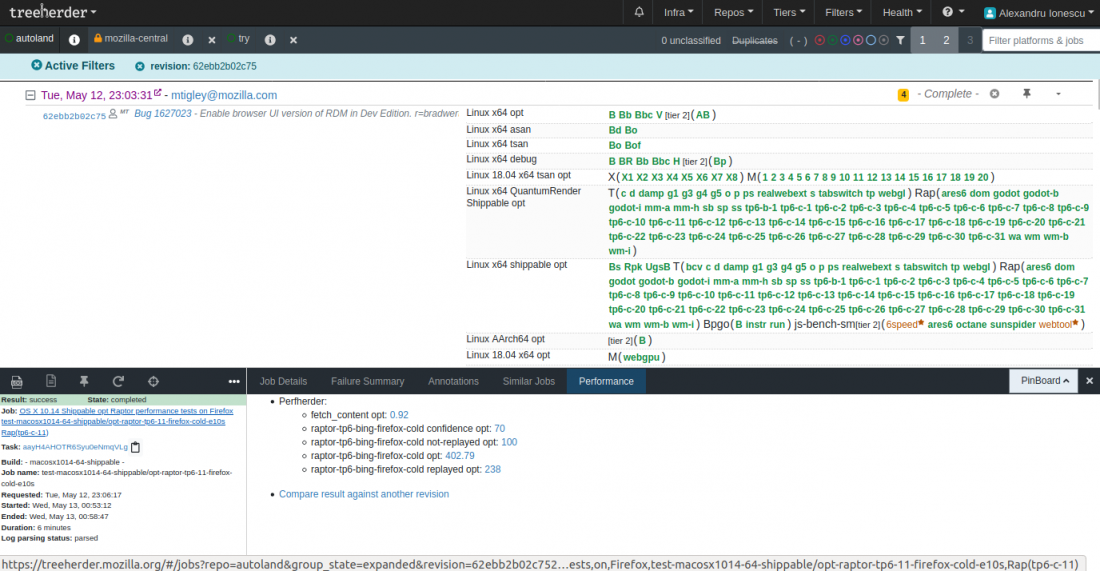

A new page with the list of jobs corresponding to the datapoint will appear, then click on the link next to Job at the lower left section of the page to narrow down the jobs you see just to the one that caused the regression.

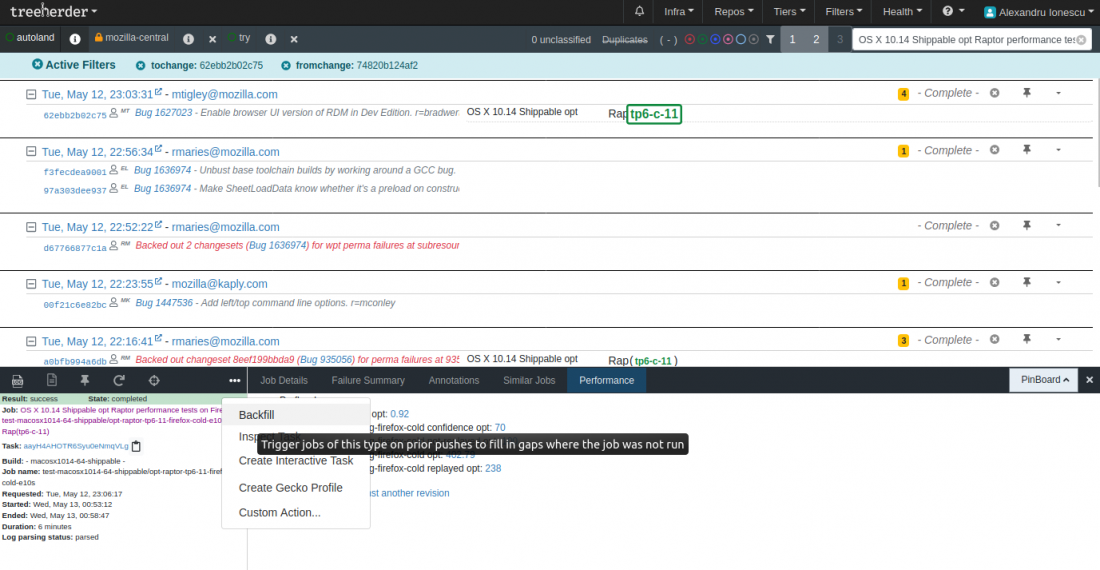

You're now seeing just the job from the revision that caused the regression. Now you need to see the previous jobs in order to identify how many revisions you need to backfill, by clicking on the number in the image below, usually the lowest is chosen

![]()

Having the top job selected, you now need to trigger the backfill action. Usually, the sheriffs chose the quick one (Backfill button) but with Custom action... you can chose how many revisions you want to go deep or how many retriggers per revision you want. There are also available other types of actions the sheriffs team don't use.

After triggering the action you have to see the confirmation message at the top left of the screen which shouldn't appear later than 5-7 seconds. If you don't see that you risk coming back and not have the desired jobs trigerred, meaning you lose investigation time.

![]()

After the jobs are finished running, you have jobs triggered on successive revisions and you can continue looking for the culprit.

Finding the culprit

- A clear improvement/regression appears usually when there is easily noticeable difference between two adjacent data points:

- There are cases when the difference is much less noticeable and the data of the test is more unstable, and some retriggers are necessary in order to determine the interval for the test data and compare it between several adjacent tests:

- There are cases when there's a revision(s) gap between the data points in the graph, and a little investigation might save us manual bisection:

https://mozilla.hosted.panopto.com/Panopto/Pages/Viewer.aspx?id=6f5cd956-d656-488c-bc91-b085009703c1

- A less fortunate situation is when the test is unstable, there are gaps in graph when the tests didn’t run for various reasons and the regression/improvement is almost impossible to be determined. If the investigation takes more than 5 business days it’s recommended to ask for help, if you haven’t already:

- Ask the other sheriffs in your team.

- See if there were situations like this in the past and find out how they were treated (there’s a high change there were).

- Search for the test framework in individual module ownership page or just search for what you want in mozilla wiki.

- Try to reach the framework owner you find in individual module ownership page by Mozilla's chat app, email or other method handy for you.

- Mozilla dashboard might also be helpful.

- If you still don’t figure it out, ask your team lead.

The investigation might end up opening a bug without knowing the specific commit that caused the regression and asking for help from most relevant people you found about.

A less common case of regression/improvement is when the graph is pretty clear about the culprit but the patch contains changes unrelated to the platform(s) targeted by the alert. For example, if the patch is just modifying configuration stuff for mobile platforms and the alert targets only desktop platforms, it might be an error somewhere. The recommendation is to ask in the culprit bug about what might be missing before opening the regression/linking the improvement to it.

Handling alerts

When an alert is created, it is not necessarily caused by a bug. It can be also caused by the instability/noise of the test or by other causes that are unrelated to the repo code, like CI setup.

A bug associated with an alert needs to have the perf-alert keyword. The regression bugs have that automatically when the File bug button is pressed, but for the bugs that are not regression bugs needs to be added manually.

Thresholds

There are different thresholds above which the alerts are considered regressions and they vary depending on the framework:

- AWSY >= 0,25%

- Build metrics installer size >= 100kb

- talos, raptor, Build metrics >= 2%

Regressions vs improvements

Whilst the difference between regressions and improvements is self-explanatory, after acknowledging the alert:

- the regressions go through multiple statuses until the final resolution

- the improvements have the only status of "improvement"

Identifying the culprit

If the revision that caused the regression is clear, then what is left to do is identify the culprit bug. There are several situations here:

- If the revision contains changes only from one bug, you need to open a regression bug for that.

- If the revision contains changes from several bugs but you are familiar with the test and you know which of the bugs caused the regression, open a bug for that one.

- If the revision contains changes from several bugs (usually a merge from one of the other repos), you need to do a bisection in order to identify the causing bug.

Harness alerts

Harness alerts are usually caused by re-recordings or changes to code from testing/raptor component changing the baseline. If the regression is assumed, then link the culprit directly to the alert, close as WONTFIX and add the "harness" tag. Otherwise, open a regression bug.

Baseline Changes

Baseline changes are hard to identify, but we have some questions we can ask ourselves and with other people on the team to help determine if we are dealing with a real regression or a baseline change:

1) Should the patch that caused a change in the baseline metrics because we are aiming for testing in a different environment?

If yes, then the new baseline may be accepted. As the conditions for testing have changed we have to expect that some things will change. Take for instance adding a blank profile to the conditioned profile changes, as this is testing in a different environment we expect things will change

2) Is the patch changing something that shouldn't cause a shift in metrics?

If yes, then do not accept the new baseline immediately, do an analysis and discuss with other sheriffs to get second opinions.

3) Does the patch change the test itself?

If yes, a new baseline may be accepted depending on the changes. If the changes are not functional, then we shouldn’t accept a new baseline.

4) Are test recordings changing?

If yes, then a new baseline should be accepted after checking to make sure that the page that is being tested is different from the previous recording. If they aren’t, then the differences should be investigated further - it’s possible that they still may be a valid baseline change even if the page is the same, as non-visual changes may be different.

5) Any other cases that do not fall in these categories should be discussed with the team for second opinions and with patch authors if needed.

Backout/regression-fix alerts

These alerts are caused by backouts or fixes of regressions and the associated tags are regression-backedout respectively regression-fix. The status of regressions can be changed to WONTFIX.

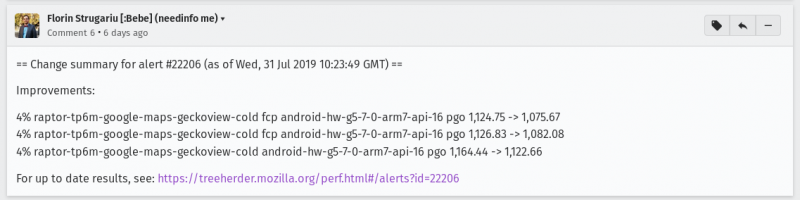

For alerts caused by backout the process is to reply to the backout comment with the summary of the alert and adding the perf-alert keyword.

Infra alerts

What is an infra alert

The regressions caused by infra changes are probably the most difficult to identify. Excepting the case when the infra change is announced and known of, an infra regression is usually most likely to be detected by the sheriff after all the suspect commits/bugs were removed from the list.

Anything that doesn’t depend on the repo code is considered to be part of the CI infrastructure, so it’s not dependent on the code state on a certain point in history/graph. For example, if the farm devices were updated with changed OS images, no matter which datapoint from history is (re)triggered, it will run on the current image. So, if the changes of the OS image don’t have the desired effect (improvement - this is always the intent), the retriggers will reveal a regression between the old datapoint(s) and the new ones of the same commit.

Looking at the graph below, it is obvious that until Apr 17 the graph is constantly around 1800-2000 and after that it dropped to 1200-1400. The backfill didn't reveal the culprit so we retriggered a while back. The retriggers were in the interval of the improvement and we can also see that there are many yellow vertical lines that mark the infra changes.

For an easy follow-up, there’s a changelog containing the changes related to the infrastructure that is very useful when the investigation is leading to this kind of regression: https://changelog.dev.mozaws.net/

How to handle infra alerts

Infra alerts became more frequent starting sometime in 2022, but the causes are still unknown for the moment. They usually come up in pairs of regression-improvement. When a regression comes up:

- it has to be tagged with the infra tag and move it to investigating queue

As soon as it comes back, if it is an improvement:

- it has to be tagged with the infra, regression-fix, improvement tags and mark it as WONTFIX

OR if the alert contains regression alerts:

- it has to be tagged with the infra, regression-fix' tags and mark it as WONTFIX

as well as the regression alert

- has to be changed the status to FIXED

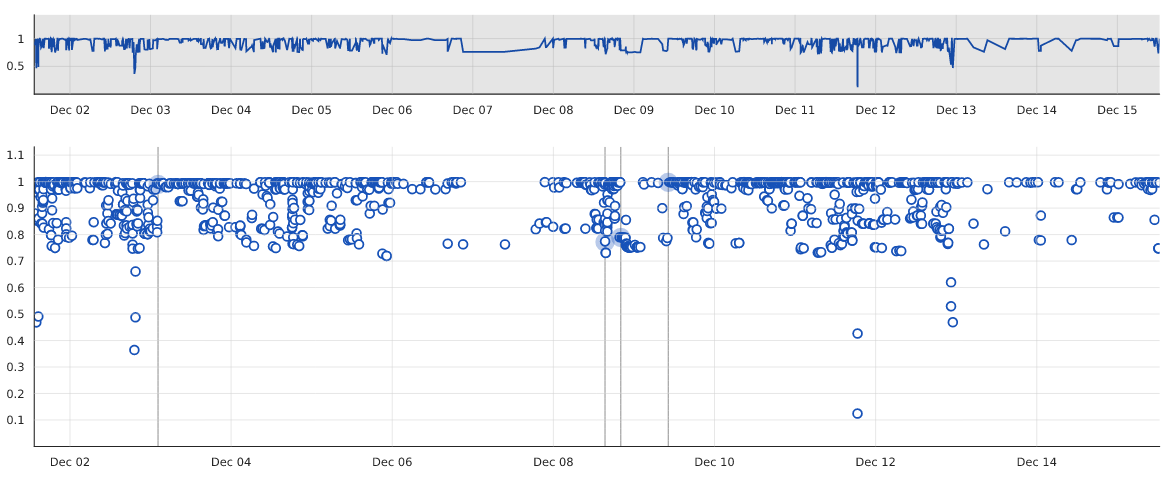

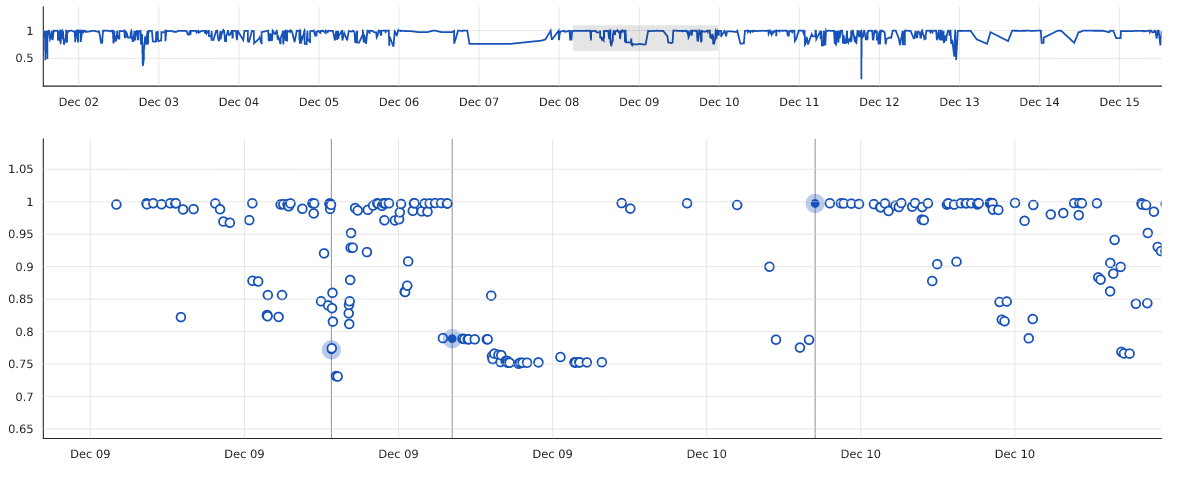

Invalid alerts

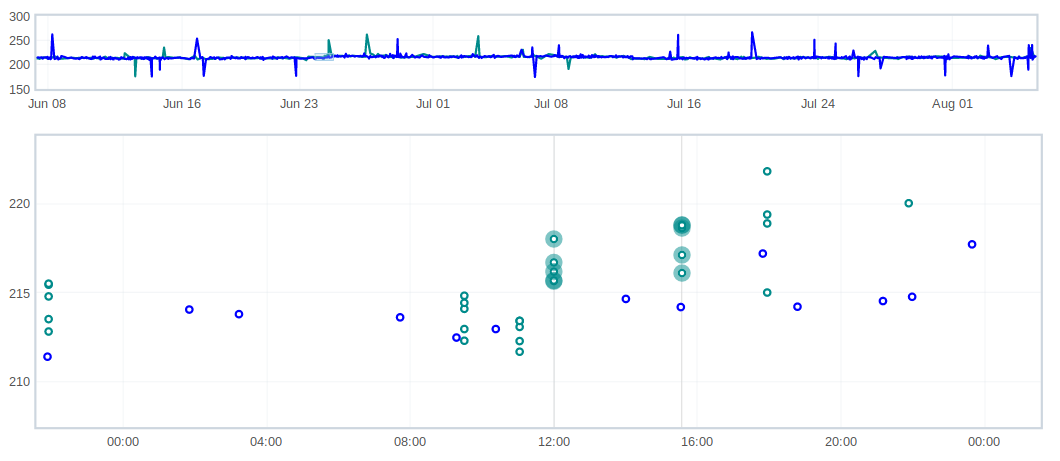

Invalid regressions usually (but not only) happen when the test results are very unstable. A useful tip of finding invalid regressions is looking at graph’s history for a pattern in the evolution of the datapoints.

In the graph below, the regression appeared around Dec 9 and as you can see, there a pattern of vary predominantly between 0.7 and 1. If you click on first highlighted datapoint (around Dec 3), you’ll see that its alert is marked as invalid.

Attention, though, that despite this graph has a wide varying interval, most of the datapoint are concentrated around value 1. This is the case of the alert around Dec 9, after which the stabilized itself around the regression’s value (0.75 - 0.8). This is a real regression!

A particular case are sccache hit rate tests. Most often, those alerts are invalid, but is the hit rate drops stay stays low for at least 12-24h then a regression bug should be open.

Handling Regressions

There are two different approaches to handling regressions:

- filing a regression bug actual regressions

- letting the author of the culprit know that their patch(es) caused a regression when we know that it will be accepted (backout, regression-fix, harness)

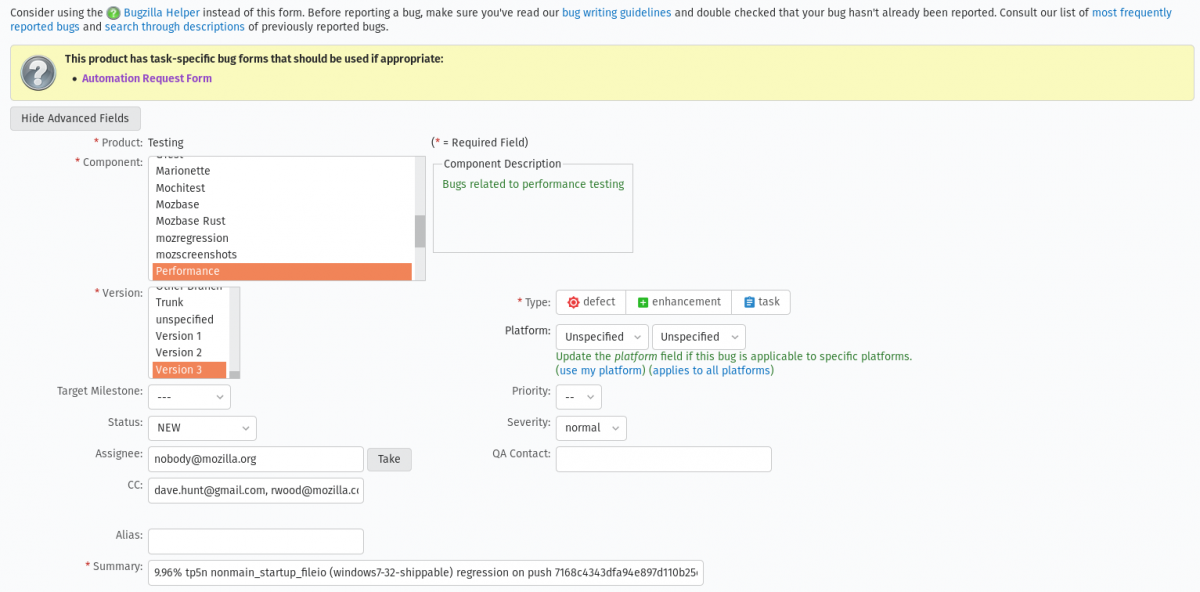

Filing a regression bug

Note: To file a bug from Perfherder you are required to be authenticated.

Steps to file regression bug:

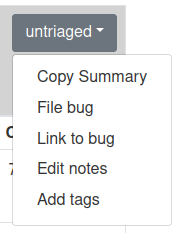

1. Click on the Untriaged status in the upper right side of the alert summary.

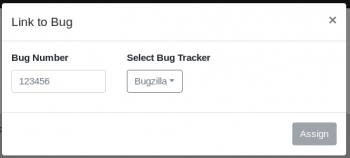

2. Next select the File bug option and enter the bug number from the bug that caused the regression. You will be redirected to bugzilla.mozilla.org.

3. In bugzilla scroll down and click on Set bug flags and set to affected the last status-firefox version that appears in the list.

4. Click on the Submit Bug button from the bottom of the page.

Manually filling a regression bug

Now that you know the causing bug, you need to make sure that there isn’t already a bug open for this, by searching for regressions just like following up on regressions but clearing the Search by People > Reporter field.

If there is no regression bug open, you need to open one:

The new page should look like this:

Most important fields when filing a regression bug are:

- Type: Defect

- Keywords: perf, perf-alert, regression - will be automatically filled

- Blocks: indicate the next release of the firefox and is a meta bug used to keep track of the regressions associated with a specific release

- Regressed by: the number of the bug that caused the regression (note: the bug number appears strikedthrough if it is closed)

- Request information from: the assignee of the bug

- CC: here usually goes at least the assignee, reporter and triage owner

- tracking-flag will appear at the bottom of the page after you click on Set bug flags and you have to set to affected the last status-firefox version that appears in the list, in this case status-firefox80

- Product and Component: are automatically filled in the Enter bug page, so you need to save the bug with the details so far and click edit to modify those. They have to be the same as in the original bug

After you finished with the regression bug you need to link it to the summary and change the status of the alert to acknowledged.

Next you have to follow the comments of the bug so you make sure it’s closed, ideally before the next Firefox release.

Handling improvements

Unlike for regressions, when you have identified an improvement, there's no need to open a bug. Just notify the bug assignee via a comment and add the 'perf-alert' keyword.

Valid improvements

This time you just need copy the summary, paste it as “Congrats” to the bug causing it and update the status of the summary:

You also need to add the 'perf-alert' keyword, to indicate there is a performance alert associated with this bug.

Note: If by mistake you left a comment that is actually invalid, just add to the comment the tag obsolete.

Attention! The summary could contain alerts reassigned from other alerts. You have to tick the box next to each untriaged alert and change its status to Acknowledge.

Ticking the box next to the alert summary and resetting it will UNLINK the reassigned alerts and you don’t want to do that!

Improvements treated as regression

Depending on the test, a high magnitude improvement (> 80%) should be treated more carefully. While a 100% improvement for a pageload test is impossible (the site never loads in an instant), over 80% is very rare and might imply that the test isn't loading what it should (an error page which is likely to contain much less code than the actual website).

Invalid improvements

Here you can apply the same logic as for invalid regressions, the difference is that the unstable graph evolution triggered an alert while the value changed in the sense of an improvement.

Updating alerts’ status

After finding the culprit (and done the necessary actions for the improvement/regression), you have to:

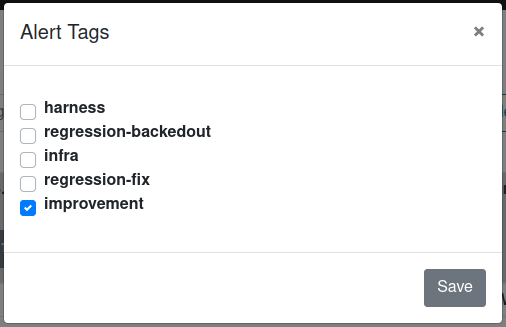

Add notes tag

TODO: update screenshots with tags modal

Update the notes tag if it fits to one of the following:

Improvements

- harness - patches that updated that harness and caused improvements

- regression-backedout - patches backed out due to causing regressions

- applies to the alerts linked to bugs that backed out a regression NOT to alerts that are linked to regression bugs

- infra - improvements caused by infra changes (changes not related to repository code)

- regression-fix - patches fixing a reported regression bug

- applies to the alerts linked to bugs that fixed a regression NOT to alerts that are linked to regression bugs

- improvements - patches causing improvements

Regressions

- harness - patches that updated that harness and caused regressions

- regression-backedout - patches backed out due to causing regressions

- applies to the alerts linked to bugs that backed out a regression NOT to alerts that are linked to regression bugs

- infra - regressions caused by infra changes (changes not related to repository code)

- regression-fix - patches fixing a reported regression bug

- applies to the alerts linked to bugs that fixed a regression NOT to alerts that are linked to regression bugs

- improvement-backedout - regression caused by backing out an earlier improvement

Move alerts from untriaged queue

After the revision that caused the alert was identified move alerts from untriaged queue as follows:

- if the alert is a valid improvement/regression and you linked a bug for it, change to

- if the alert is an invalid improvement/regression, change to

- if the alert is a downstream of an improvement/regression, change to

- if the improvement/regression happened earlier/later on the same repo, change to

Follow-up on regressions

TODO: suggested email filter, followup on comments and needinfos, update alerts status when the regression bug closes.

Questioned regressions

The regression identified from the graph can be inaccurate for several reasons. When the author of the culprit patch/bug doesn't agree that their code caused the regression, we usually take another look over the alert. What can the sheriff do is the following (but not necessary limited to):

- retrigger/backfill the jobs around the regression, especially when the graph is noisy and the regression is not very clear. The sheriff is used to read the graph and can see something clear while the author of the patch doesn't have that skill.

- it is possible that the alert contains 2 very close or neighbor regressions. If the alert contains different tests in terms of naming, it is possible that they are caused by different revisions and need to be confirmed/infirmed by some retriggers/backfills<br/

- sometimes, despite the graph is clear for the sheriff, the patch can contain "static" code (comments, documentation updates). In this case, other cause might be infra change. But be careful, the developer doesn't have to know what the patch does, so sometimes those are caught only if the developer questions the regression

Regressions with no activity

Regression bugs with no activity for three days should be:

- responding to open questions to sheriffs, or

- added the [qf] whiteboard entry

You can follow up on all the open regression bugs created by you.

Special Cases

Multiple Bug IDs on the same push

Regressions

If the push that has been identified as the culprit has multiple revisions and bug ids linked to it, the sheriff can fill in the "Regressed By" field for each of the bugs with the value of the filed bug for the associated alert.

If there's a regression-fix, regression-backedout or improvement-backedout bug to be linked to the alert but the revision contains multiple bugs, link the most relevant bug to the alert. If you're unsure, ni? the author to tell which of the bugs they think caused the alert.

Improvements

The general approach for improvements is to leave needinfos to the authors to ask for help in identifiying the corresponding bug.

Sheriffing Firefox-Android Alerts

The firefox-android repo (which contains both Fenix and Focus) also produces alerts at a lower cadence (~6 days after a change is triggered).

Sheriffing this branch is the same as autoland in that you'll need to do backfills on the firefox-android branch to determine a culprit commit. Once you find a culprit commit, then file a bug in Bugzilla as usual, and needinfo the author of the commit.

If you find a commit that has no clear author such as this one, then you'll have one of two options depending on the type of commit it is:

- If the commit message mentions GeckoView or something related to mozilla-central, then needinfo Android engineering manager Bryan Clark [:bclark] in the bug for the alert.

- If the commit message doesn't mention GeckoView and has no clear author, like this commit, then needinfo Android engineering manager Joe Mahon [:jmahon] in the bug for the alert.

Check to see if there might be an associated GeckoView alert that was produced on autoland when there's an alert produced on firefox-android. If there was, then you can associated the two alert bugs with a See Also entry.

Lastly, backfilling on the mozilla-central side when a change is detected in a GeckoView commit is done with try runs. You can find instructions for that here.

How to triage a firefox-android alert

1. Read the graph, check for job gaps (browsertime & build_metrics):

- click on the graph icon next to an alert item (usually the highest magnitude)

a thin vertical line will show for all the alerts associated with the test, you need to make sure you’re looking at the right one by hovering or * clicking on the datapoint

- click on the job link to go to treeherder job view

- make sure you’re seeing the jobs from current revision back and look for gaps

2. Trigger the jobs in the revisions in the gap (browsertime & build_metrics)

- For every revision in the gap click Add new jobs and trigger the needed job manually

3. Find the responsible push that caused the regression

- If the regression is caused by a mozilla-central push, then you have to take the build id (Update GeckoView (Nightly) to 121.0.20231106094018.) and search for the revision in Buildhub

- Build-metrics: file a generic performance regression

- Browsertime: it should be an autoland alert to mark as downstream for

4. If it’s caused by a firefox-android push then the process of filling a regression bug should be straight-forward - the default one from documentation. (browsertime & build_metrics)

Special case

There’s a special case that happens very very rare when code from geckoview build (mozilla-central) doesn’t affect geckoview pageload but affects fenix (firefox-android). It is the case for alert 40028. In this case there’s no other option than bisection on mozilla-central with fenix build. The steps are:

- Follow bisection workflow from documentation

- Checkout to the previous commit of the suspect one

- Download the fenix-android-all fenix-nightlySim(B) build: target.armeabi-v7a.apk (artifacts)

- Add it to the code using the command: ./mach try perf --browsertime-upload-apk path/to//target.armeabi-v7a.apk

- Commit hg commit -m="fenix apk"

- Push to try the pageload job for geckoview Android 11.0 Samsung A51 AArch64 Shippable

- Repeat steps 1-6 for pushing to try the suspected revision and then compare the results to try.

Alerts caused by modifying release flags (EARLY_BETA_OR_EARLIER) / bumping the Firefox version

These alerts are generated on mozilla-beta and have no bugs associated with the revisions:

- Check the milestone version by looking into the changeset details.

- Check if there was already a bugzilla bug created for this particualr milestone version.

- If not, then clone the following bug https://bugzilla.mozilla.org/show_bug.cgi?id=1879080.

- Leave a comment with the alert summary you were investigating.

Comment 0 could include the following information:

There were some alerts generated on mozilla-beta, that have no bugs associated with the revisions.

Alert: https://treeherder.mozilla.org/perf.html#/alerts?id=<alert#number>

Pushlog: https://hg.mozilla.org/releases/mozilla-beta/pushloghtml?fromchange=<rev>&tochange=<rev>

Changeset Details: https://hg.mozilla.org/releases/mozilla-beta/rev/<rev>

A few notes to consider:

- for mozilla-beta alerts, we normally search for downstreams on autoland

- most of the time, when a change is pushed to autoland, it is also pushed to mozilla-central during the same day

- on mozilla-beta the change is merged after ~ 6 weeks

build_metrics special cases

- decision alerts usually are just spikes and not relevant, which is why we are setting them as invalid. In the case that, by looking at the coresponding graph, the regression wasn't improved / fixed, then check with the other sheriffs in the Sheriffs of Performance channel on Element since it might be a cause of concern.

- instrumented in the Tags & Options column should be set as invalid. Alerting will be deactivated for these tests in the future.

Regression which was already backedout

If we identify a regression alert summary and that particular changeset was already backout then the safe approach would be to file a bug by following the usual workflow, but mention in the comment0 that this was already backedout and there's no action item for the author. This bug is only created for tracking purposes / being able to acknowledge the change summary of the alert.

Jobs are failing to run

In this case we usually resort to bisection.

Invalid comments

If by mistake you left a comment that is actually invalid, just add to the comment the tag obsolete.